Retrieval augmented generation (RAG) enhances language models by integrating external knowledge sources, improving accuracy and relevance during text generation. Parameter-efficient tuning optimizes pre-trained models by adjusting only a small subset of parameters, significantly reducing computational costs and training time. Explore these cutting-edge methods to understand how they revolutionize natural language processing and AI development.

Why it is important

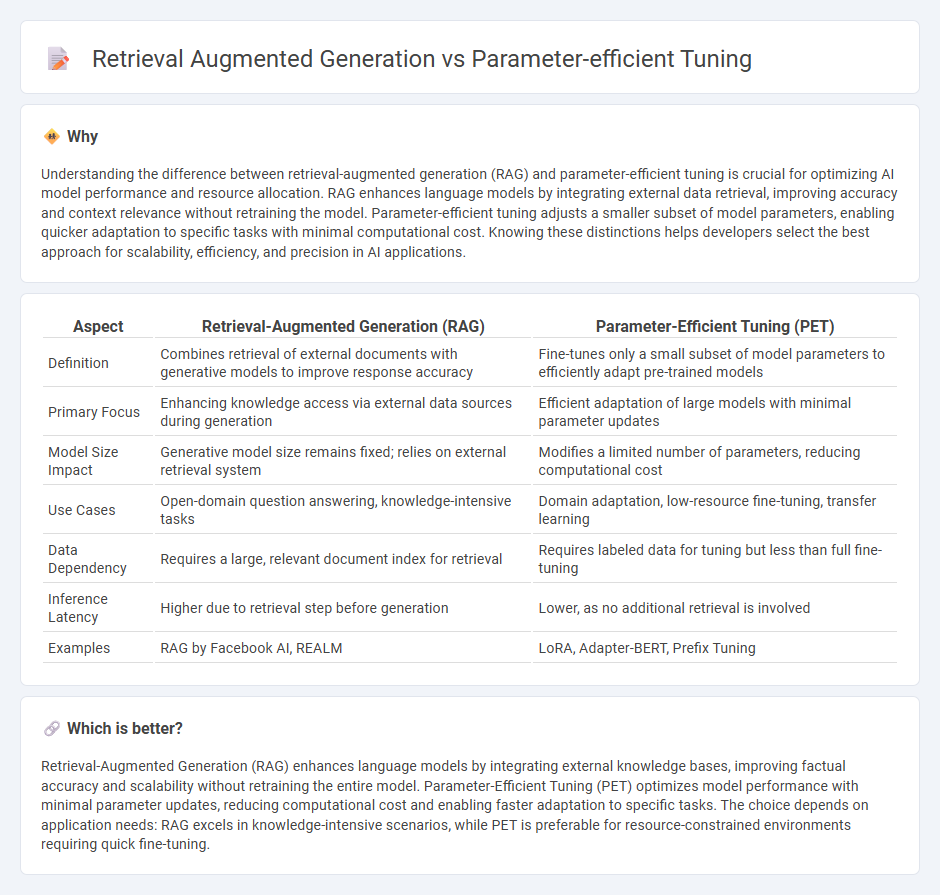

Understanding the difference between retrieval-augmented generation (RAG) and parameter-efficient tuning is crucial for optimizing AI model performance and resource allocation. RAG enhances language models by integrating external data retrieval, improving accuracy and context relevance without retraining the model. Parameter-efficient tuning adjusts a smaller subset of model parameters, enabling quicker adaptation to specific tasks with minimal computational cost. Knowing these distinctions helps developers select the best approach for scalability, efficiency, and precision in AI applications.

Comparison Table

| Aspect | Retrieval-Augmented Generation (RAG) | Parameter-Efficient Tuning (PET) |

|---|---|---|

| Definition | Combines retrieval of external documents with generative models to improve response accuracy | Fine-tunes only a small subset of model parameters to efficiently adapt pre-trained models |

| Primary Focus | Enhancing knowledge access via external data sources during generation | Efficient adaptation of large models with minimal parameter updates |

| Model Size Impact | Generative model size remains fixed; relies on external retrieval system | Modifies a limited number of parameters, reducing computational cost |

| Use Cases | Open-domain question answering, knowledge-intensive tasks | Domain adaptation, low-resource fine-tuning, transfer learning |

| Data Dependency | Requires a large, relevant document index for retrieval | Requires labeled data for tuning but less than full fine-tuning |

| Inference Latency | Higher due to retrieval step before generation | Lower, as no additional retrieval is involved |

| Examples | RAG by Facebook AI, REALM | LoRA, Adapter-BERT, Prefix Tuning |

Which is better?

Retrieval-Augmented Generation (RAG) enhances language models by integrating external knowledge bases, improving factual accuracy and scalability without retraining the entire model. Parameter-Efficient Tuning (PET) optimizes model performance with minimal parameter updates, reducing computational cost and enabling faster adaptation to specific tasks. The choice depends on application needs: RAG excels in knowledge-intensive scenarios, while PET is preferable for resource-constrained environments requiring quick fine-tuning.

Connection

Retrieval-augmented generation (RAG) enhances language models by integrating external knowledge bases during the generation process, improving accuracy and relevance. Parameter-efficient tuning techniques, such as adapters or LoRA, fine-tune pre-trained models with minimal parameter updates, preserving base model knowledge while adapting to specific tasks. Combining RAG with parameter-efficient tuning enables scalable and adaptable natural language processing systems that leverage extensive external data without exhaustive retraining.

Key Terms

Adapter Layers

Adapter layers enable parameter-efficient tuning by injecting small, trainable modules within pretrained models, significantly reducing the number of updated parameters during fine-tuning. Retrieval Augmented Generation (RAG) incorporates external knowledge through retrieval mechanisms, enhancing model responses but often requiring more extensive parameter updates and integration complexity. Explore how adapter layers streamline tuning processes and optimize retrieval-augmented architectures for advanced natural language processing tasks.

Prompt Tuning

Parameter-efficient tuning techniques like prompt tuning adjust only a small set of parameters to adapt large language models for specific tasks, minimizing computational overhead and memory usage. In contrast, retrieval-augmented generation (RAG) enhances model outputs by incorporating relevant external documents dynamically through retrieval mechanisms, improving accuracy and factuality without extensively modifying model weights. Explore the intricacies of prompt tuning to understand its role and benefits in efficient model adaptation.

External Knowledge Retrieval

Parameter-efficient tuning minimizes model updates by adjusting a small subset of parameters, enhancing adaptability without full retraining, while retrieval-augmented generation (RAG) integrates external knowledge sources dynamically during inference to improve response accuracy and relevance. RAG systems leverage large-scale document corpora or databases, retrieving pertinent information in real time to complement the language model's generation capabilities, crucial for tasks requiring up-to-date or specialized knowledge. Explore how combining parameter-efficient tuning with advanced retrieval methods can optimize external knowledge integration and boost NLP system performance.

Source and External Links

What is parameter-efficient fine-tuning (PEFT)? - PEFT improves the performance of large language models by training a small set of parameters, saving time and computational resources.

What is Parameter-Efficient Fine-Tuning (PEFT) of LLMs? - PEFT allows fine-tuning of a small subset of parameters in pretrained large language models, enhancing efficiency with techniques like adapters and LoRA.

Parameter-Efficient Fine-Tuning using PEFT - PEFT is a method for efficiently fine-tuning large models on downstream tasks, reducing computational needs and storage while achieving comparable performance.

dowidth.com

dowidth.com