Federated learning enables decentralized data processing by training algorithms directly on devices, enhancing privacy and reducing latency compared to traditional cloud computing, which relies on centralized servers for data analysis and storage. This approach minimizes data transfer, addressing security concerns while maintaining model accuracy across distributed networks. Explore how these technologies transform data management in various industries.

Why it is important

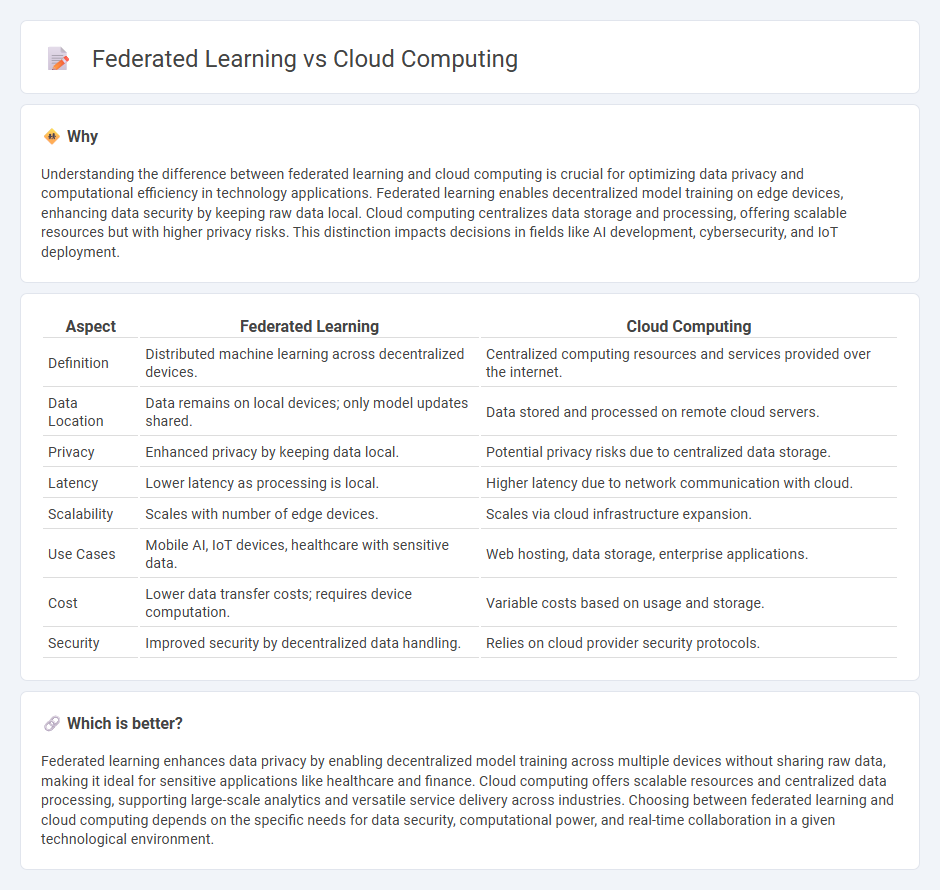

Understanding the difference between federated learning and cloud computing is crucial for optimizing data privacy and computational efficiency in technology applications. Federated learning enables decentralized model training on edge devices, enhancing data security by keeping raw data local. Cloud computing centralizes data storage and processing, offering scalable resources but with higher privacy risks. This distinction impacts decisions in fields like AI development, cybersecurity, and IoT deployment.

Comparison Table

| Aspect | Federated Learning | Cloud Computing |

|---|---|---|

| Definition | Distributed machine learning across decentralized devices. | Centralized computing resources and services provided over the internet. |

| Data Location | Data remains on local devices; only model updates shared. | Data stored and processed on remote cloud servers. |

| Privacy | Enhanced privacy by keeping data local. | Potential privacy risks due to centralized data storage. |

| Latency | Lower latency as processing is local. | Higher latency due to network communication with cloud. |

| Scalability | Scales with number of edge devices. | Scales via cloud infrastructure expansion. |

| Use Cases | Mobile AI, IoT devices, healthcare with sensitive data. | Web hosting, data storage, enterprise applications. |

| Cost | Lower data transfer costs; requires device computation. | Variable costs based on usage and storage. |

| Security | Improved security by decentralized data handling. | Relies on cloud provider security protocols. |

Which is better?

Federated learning enhances data privacy by enabling decentralized model training across multiple devices without sharing raw data, making it ideal for sensitive applications like healthcare and finance. Cloud computing offers scalable resources and centralized data processing, supporting large-scale analytics and versatile service delivery across industries. Choosing between federated learning and cloud computing depends on the specific needs for data security, computational power, and real-time collaboration in a given technological environment.

Connection

Federated learning leverages cloud computing infrastructure to enable decentralized model training across multiple devices while preserving data privacy. Cloud platforms provide scalable storage and computational resources, facilitating efficient aggregation of local models into a global model. This synergy enhances data security and reduces latency in AI applications by minimizing the need for centralized data transfer.

Key Terms

Data Privacy

Cloud computing centralizes data storage and processing, raising concerns about potential exposure of sensitive information to unauthorized parties. Federated learning enhances data privacy by enabling decentralized model training on local devices, ensuring that raw data never leaves the source. Explore the key differences and benefits of these technologies to better understand their impact on data privacy.

Distributed Architecture

Cloud computing centralizes data processing in large-scale data centers, enabling efficient resource management but raising concerns about data privacy and bandwidth limitations. Federated learning employs a distributed architecture where multiple edge devices collaboratively train machine learning models locally, minimizing data transfer and enhancing privacy by keeping sensitive data decentralized. Explore further to understand how distributed architectures impact scalability and security in these cutting-edge technologies.

Model Aggregation

Model aggregation in cloud computing typically relies on centralized servers that collect and merge data or model updates, ensuring robust global models but raising concerns about data privacy and bandwidth. Federated learning, by contrast, performs model aggregation locally on edge devices or decentralized nodes, enhancing privacy by keeping raw data on-device while aggregating only model parameters via secure protocols. Explore how these model aggregation strategies impact scalability and security in modern AI deployments.

Source and External Links

What is Cloud Computing? Types, Examples and Benefits - Cloud computing is the on-demand delivery of hosted computing and IT services over the internet, allowing users to access rented resources like data, analytics, and applications from remote data centers managed by service providers, with a pay-as-you-go pricing model.

Cloud computing - Wikipedia - Cloud computing is a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources, with self-service provisioning and administration on-demand, defined by essential characteristics such as on-demand self-service, broad network access, resource pooling, rapid elasticity, and measured service.

What Is Cloud Computing? | IBM - Cloud computing provides on-demand access to computing resources--servers, storage, networking, software, and more--over the internet with pay-per-use pricing, offering flexibility and scalability without the need to own or manage physical hardware.

dowidth.com

dowidth.com