Edge inference processes data locally on devices, enabling real-time analytics and reducing latency while enhancing privacy by minimizing data transmission to central servers. Federated learning trains machine learning models across multiple decentralized devices, aggregating updates without sharing raw data, thereby preserving user privacy and improving model robustness. Discover how these advanced technologies transform data processing and privacy in modern AI applications.

Why it is important

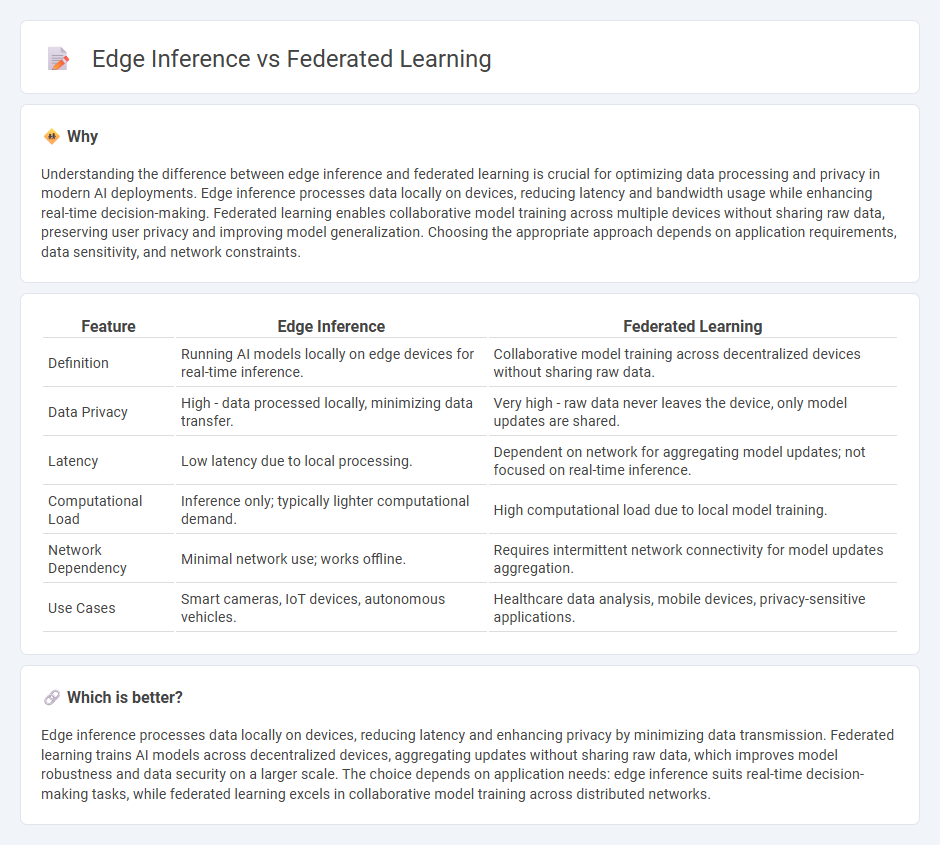

Understanding the difference between edge inference and federated learning is crucial for optimizing data processing and privacy in modern AI deployments. Edge inference processes data locally on devices, reducing latency and bandwidth usage while enhancing real-time decision-making. Federated learning enables collaborative model training across multiple devices without sharing raw data, preserving user privacy and improving model generalization. Choosing the appropriate approach depends on application requirements, data sensitivity, and network constraints.

Comparison Table

| Feature | Edge Inference | Federated Learning |

|---|---|---|

| Definition | Running AI models locally on edge devices for real-time inference. | Collaborative model training across decentralized devices without sharing raw data. |

| Data Privacy | High - data processed locally, minimizing data transfer. | Very high - raw data never leaves the device, only model updates are shared. |

| Latency | Low latency due to local processing. | Dependent on network for aggregating model updates; not focused on real-time inference. |

| Computational Load | Inference only; typically lighter computational demand. | High computational load due to local model training. |

| Network Dependency | Minimal network use; works offline. | Requires intermittent network connectivity for model updates aggregation. |

| Use Cases | Smart cameras, IoT devices, autonomous vehicles. | Healthcare data analysis, mobile devices, privacy-sensitive applications. |

Which is better?

Edge inference processes data locally on devices, reducing latency and enhancing privacy by minimizing data transmission. Federated learning trains AI models across decentralized devices, aggregating updates without sharing raw data, which improves model robustness and data security on a larger scale. The choice depends on application needs: edge inference suits real-time decision-making tasks, while federated learning excels in collaborative model training across distributed networks.

Connection

Edge inference enables real-time data processing directly on devices, minimizing latency and preserving user privacy by avoiding cloud transmission. Federated learning complements this by training AI models across multiple edge devices without sharing raw data, enhancing data security and scalability. Together, they form a decentralized AI approach that leverages local computation for faster, privacy-conscious intelligent applications.

Key Terms

Federated Learning:

Federated learning enables decentralized model training across multiple edge devices while preserving data privacy by keeping raw data local. It contrasts with edge inference, where pre-trained models are deployed on edge devices for immediate prediction without further training. Explore the benefits and applications of federated learning in enhancing privacy and collaborative AI development.

Decentralized Training

Federated learning enables decentralized training by allowing multiple edge devices to collaboratively train a shared model without exchanging raw data, preserving privacy and reducing communication overhead. Unlike edge inference, which focuses on deploying pre-trained models locally for real-time decision making, federated learning continuously updates the model across distributed nodes to improve accuracy and adaptability. Explore how decentralized training in federated learning transforms data privacy and model robustness in dynamic environments.

Model Aggregation

Federated learning relies on model aggregation by collecting and averaging locally trained models from distributed devices to create a robust global model while preserving data privacy. Edge inference processes data directly on local devices without centralized model updates, emphasizing real-time decision-making over collaborative training. Explore the impact of model aggregation techniques to optimize performance in decentralized AI systems.

Source and External Links

What Is Federated Learning? | IBM - Federated learning is a decentralized approach where machine learning models are trained across distributed nodes using local data, with updates aggregated by a central server to improve a global model without sharing raw data.

What is federated learning? - In federated learning, multiple parties collaboratively train a single AI model by downloading it, training it on their private data, and sending only encrypted model updates back to a central server for aggregation.

Federated learning - Federated learning trains algorithms on multiple local datasets without exchanging raw data, instead sharing only model parameters (e.g., neural network weights) to create a shared global model while preserving data privacy.

dowidth.com

dowidth.com