Edge intelligence integrates AI capabilities directly within edge devices, enabling real-time data processing and reduced latency for applications such as autonomous vehicles and smart sensors. Neural Processing Units (NPUs) are specialized hardware designed to accelerate deep learning and neural network computations, providing efficient power consumption and enhanced performance in AI tasks. Explore how edge intelligence and NPUs revolutionize modern technology by boosting speed, efficiency, and local data analysis.

Why it is important

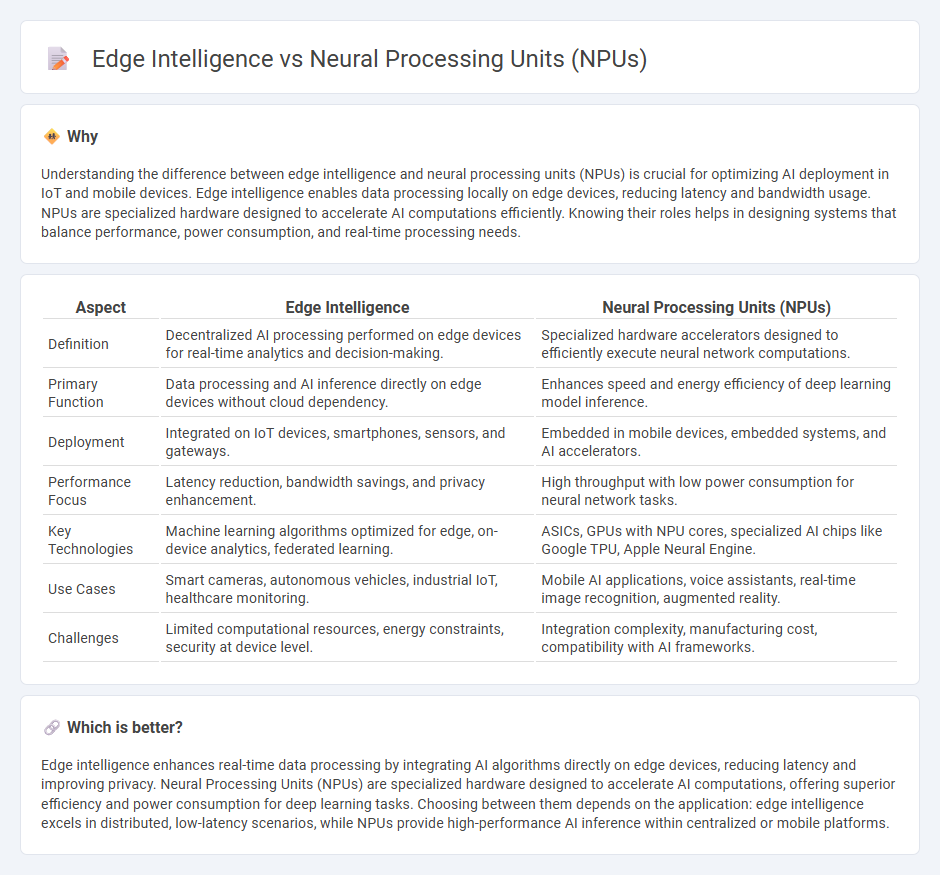

Understanding the difference between edge intelligence and neural processing units (NPUs) is crucial for optimizing AI deployment in IoT and mobile devices. Edge intelligence enables data processing locally on edge devices, reducing latency and bandwidth usage. NPUs are specialized hardware designed to accelerate AI computations efficiently. Knowing their roles helps in designing systems that balance performance, power consumption, and real-time processing needs.

Comparison Table

| Aspect | Edge Intelligence | Neural Processing Units (NPUs) |

|---|---|---|

| Definition | Decentralized AI processing performed on edge devices for real-time analytics and decision-making. | Specialized hardware accelerators designed to efficiently execute neural network computations. |

| Primary Function | Data processing and AI inference directly on edge devices without cloud dependency. | Enhances speed and energy efficiency of deep learning model inference. |

| Deployment | Integrated on IoT devices, smartphones, sensors, and gateways. | Embedded in mobile devices, embedded systems, and AI accelerators. |

| Performance Focus | Latency reduction, bandwidth savings, and privacy enhancement. | High throughput with low power consumption for neural network tasks. |

| Key Technologies | Machine learning algorithms optimized for edge, on-device analytics, federated learning. | ASICs, GPUs with NPU cores, specialized AI chips like Google TPU, Apple Neural Engine. |

| Use Cases | Smart cameras, autonomous vehicles, industrial IoT, healthcare monitoring. | Mobile AI applications, voice assistants, real-time image recognition, augmented reality. |

| Challenges | Limited computational resources, energy constraints, security at device level. | Integration complexity, manufacturing cost, compatibility with AI frameworks. |

Which is better?

Edge intelligence enhances real-time data processing by integrating AI algorithms directly on edge devices, reducing latency and improving privacy. Neural Processing Units (NPUs) are specialized hardware designed to accelerate AI computations, offering superior efficiency and power consumption for deep learning tasks. Choosing between them depends on the application: edge intelligence excels in distributed, low-latency scenarios, while NPUs provide high-performance AI inference within centralized or mobile platforms.

Connection

Edge intelligence leverages Neural Processing Units (NPUs) to enable real-time data processing and decision-making at the source, reducing latency and bandwidth usage. NPUs are specialized hardware designed to accelerate AI computations, making them essential for deploying sophisticated machine learning models in edge devices. This synergy enhances applications such as autonomous vehicles, smart cameras, and IoT systems by providing efficient, low-power AI inference directly on the device.

Key Terms

Parallel Computing

Neural Processing Units (NPUs) are specialized hardware designed to accelerate parallel computing tasks in artificial intelligence, enabling efficient on-device data processing for edge intelligence applications. Edge intelligence leverages NPUs to execute complex neural network models locally, reducing latency and bandwidth usage compared to cloud-based computation. Discover how integrating NPUs enhances parallel computing performance for smarter edge devices.

On-device Inference

Neural Processing Units (NPUs) are specialized hardware accelerators designed to enhance on-device inference by efficiently executing deep learning models with low latency and energy consumption. Edge intelligence combines NPUs with edge computing architectures to enable real-time data processing and decision-making directly on devices, reducing reliance on cloud connectivity and improving privacy. Explore the latest advancements in NPUs and edge intelligence to understand their impact on AI-driven applications.

Latency Optimization

Neural Processing Units (NPUs) enhance latency optimization by executing AI computations directly on edge devices, reducing data transmission delays compared to cloud-based solutions. Edge intelligence leverages these NPUs to process data locally, enabling real-time decision-making crucial for applications like autonomous vehicles and IoT devices. Explore how integrating NPUs with edge intelligence revolutionizes latency-sensitive environments.

Source and External Links

What is a Neural Processing Unit (NPU)? - IBM - A neural processing unit (NPU) is a specialized microprocessor designed to accelerate AI, machine learning, and deep learning tasks by efficiently processing neural network layers, often integrated into consumer devices for real-time, low-power AI applications.

NPU (Neural Processing Units) | Samsung Semiconductor Global - NPUs mimic the parallel signal processing of the human brain, enabling simultaneous matrix operations and self-learning for deep learning applications, making them distinct from general-purpose CPUs which handle a broader range of tasks.

What is an NPU: the new AI chips explained - TechRadar - NPUs are dedicated processors optimized for accelerating neural network operations and AI workloads, often integrated into system-on-chips (SoCs) in consumer devices to efficiently handle tasks like image recognition, speech processing, and video editing.

dowidth.com

dowidth.com