Algorithmic bias auditing identifies and mitigates unfair outcomes within AI models by analyzing data inputs and decision processes to ensure equitable performance across diverse populations. Responsible AI governance establishes ethical frameworks, policies, and accountability measures that guide the development and deployment of AI technologies in alignment with societal values and legal standards. Explore how integrating both approaches enhances trust and effectiveness in AI systems.

Why it is important

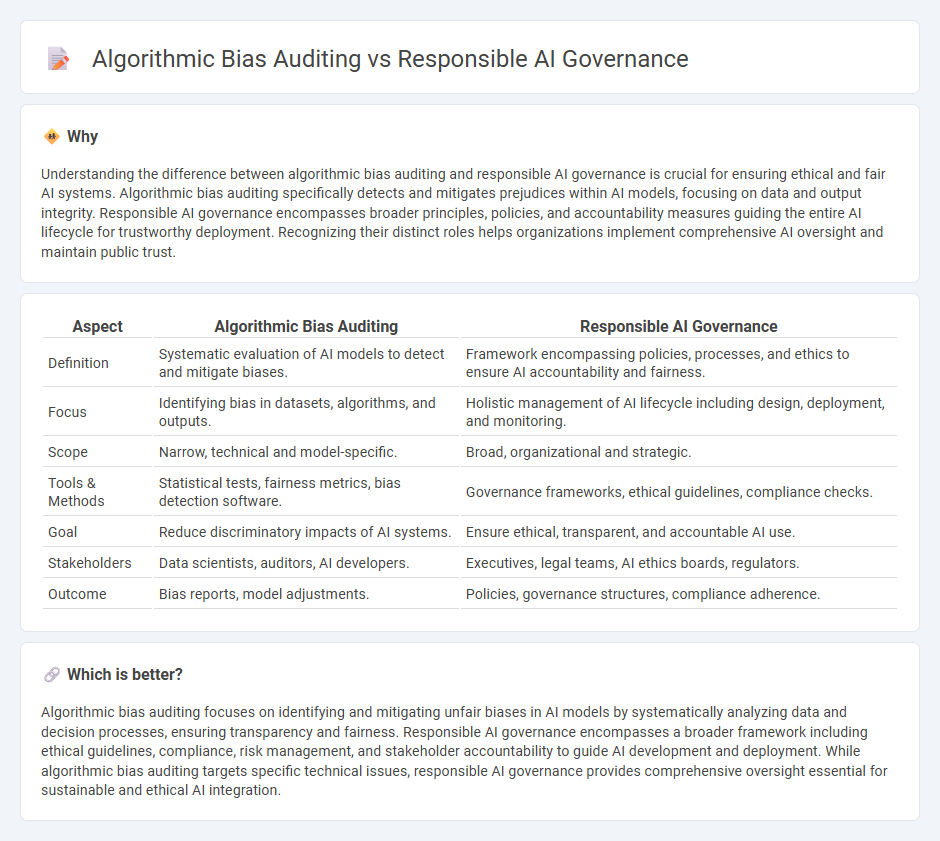

Understanding the difference between algorithmic bias auditing and responsible AI governance is crucial for ensuring ethical and fair AI systems. Algorithmic bias auditing specifically detects and mitigates prejudices within AI models, focusing on data and output integrity. Responsible AI governance encompasses broader principles, policies, and accountability measures guiding the entire AI lifecycle for trustworthy deployment. Recognizing their distinct roles helps organizations implement comprehensive AI oversight and maintain public trust.

Comparison Table

| Aspect | Algorithmic Bias Auditing | Responsible AI Governance |

|---|---|---|

| Definition | Systematic evaluation of AI models to detect and mitigate biases. | Framework encompassing policies, processes, and ethics to ensure AI accountability and fairness. |

| Focus | Identifying bias in datasets, algorithms, and outputs. | Holistic management of AI lifecycle including design, deployment, and monitoring. |

| Scope | Narrow, technical and model-specific. | Broad, organizational and strategic. |

| Tools & Methods | Statistical tests, fairness metrics, bias detection software. | Governance frameworks, ethical guidelines, compliance checks. |

| Goal | Reduce discriminatory impacts of AI systems. | Ensure ethical, transparent, and accountable AI use. |

| Stakeholders | Data scientists, auditors, AI developers. | Executives, legal teams, AI ethics boards, regulators. |

| Outcome | Bias reports, model adjustments. | Policies, governance structures, compliance adherence. |

Which is better?

Algorithmic bias auditing focuses on identifying and mitigating unfair biases in AI models by systematically analyzing data and decision processes, ensuring transparency and fairness. Responsible AI governance encompasses a broader framework including ethical guidelines, compliance, risk management, and stakeholder accountability to guide AI development and deployment. While algorithmic bias auditing targets specific technical issues, responsible AI governance provides comprehensive oversight essential for sustainable and ethical AI integration.

Connection

Algorithmic bias auditing identifies and mitigates discriminatory patterns in AI models, ensuring fairness and transparency within technological systems. Responsible AI governance establishes ethical frameworks and compliance standards that guide the development and deployment of unbiased algorithms. Together, these practices promote accountability and trustworthiness in AI-driven technologies across industries.

Key Terms

**Responsible AI Governance:**

Responsible AI governance establishes comprehensive frameworks to ensure ethical AI development, deployment, and compliance with legal standards, emphasizing transparency, accountability, and inclusivity. It integrates continuous risk management, stakeholder engagement, and adaptive policy controls to mitigate potential harms and promote fairness across AI systems. Discover how robust governance structures pave the way for ethical innovation in AI technology by exploring our in-depth analysis.

Accountability

Responsible AI governance ensures accountability by establishing transparent policies and ethical standards for AI deployment, promoting fairness and trustworthiness. Algorithmic bias auditing specifically targets the identification and mitigation of biased outcomes within AI models to uphold equitable decision-making processes. Explore how integrating both approaches strengthens AI accountability frameworks and drives ethical innovation.

Transparency

Responsible AI governance prioritizes transparency by establishing clear policies, ethical guidelines, and stakeholder accountability to ensure AI systems operate fairly and openly. Algorithmic bias auditing enhances transparency through rigorous evaluation of AI models, identifying and mitigating biases embedded in data and decision-making processes. Explore further to understand how transparency bridges governance and auditing for ethical AI deployment.

Source and External Links

What is AI Governance? - Responsible AI governance involves developing and using AI systems in a way that respects rights, promotes fairness, and encourages transparency, with organizations implementing oversight and clear accountability to ensure ethical and responsible use.

What Is AI Governance? - Effective AI governance requires organizations to establish clear accountability structures, define stakeholder roles, and implement comprehensive policies and training to ensure AI systems are safe, ethical, and secure.

What is AI Governance? | IBM - AI governance refers to the processes, standards, and oversight mechanisms that ensure AI systems are developed and deployed ethically, mitigating risks such as bias and privacy infringement while fostering trust and societal alignment.

dowidth.com

dowidth.com