Edge computing in manufacturing involves processing data near the source to reduce latency and improve real-time decision-making. AI at the edge integrates machine learning models directly on devices, enabling autonomous operations and predictive maintenance without relying on cloud connectivity. Explore how combining these technologies revolutionizes industrial efficiency and innovation.

Why it is important

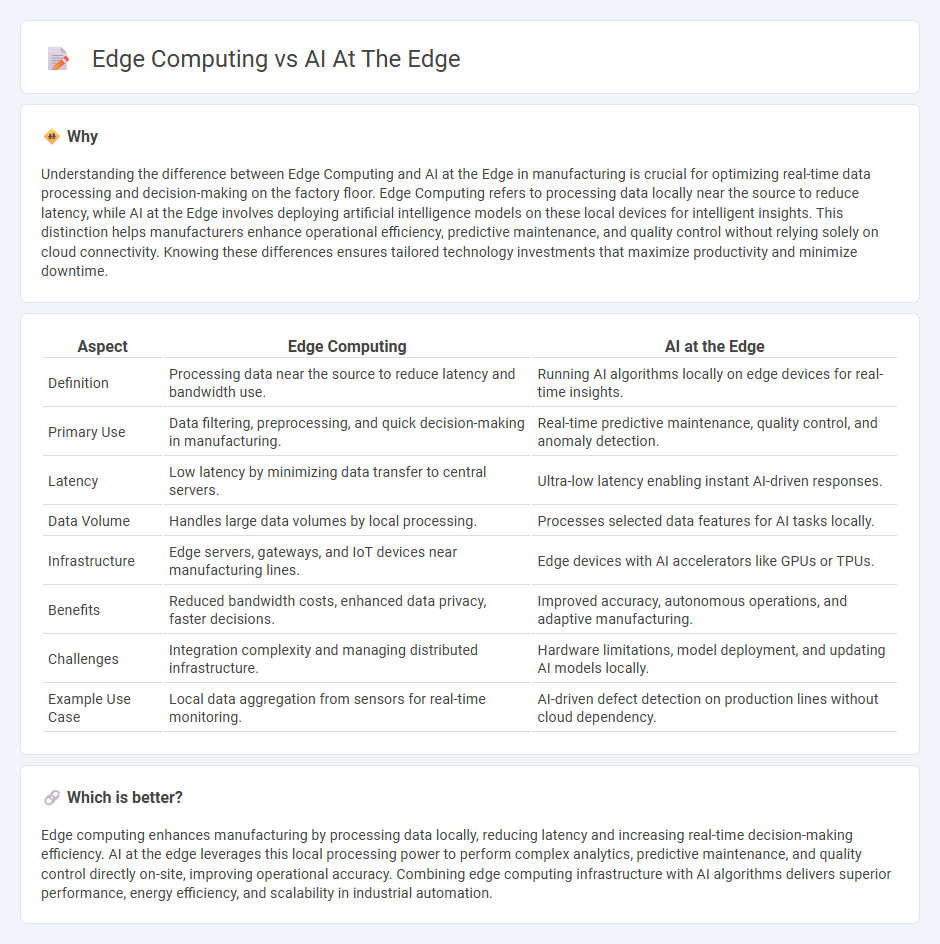

Understanding the difference between Edge Computing and AI at the Edge in manufacturing is crucial for optimizing real-time data processing and decision-making on the factory floor. Edge Computing refers to processing data locally near the source to reduce latency, while AI at the Edge involves deploying artificial intelligence models on these local devices for intelligent insights. This distinction helps manufacturers enhance operational efficiency, predictive maintenance, and quality control without relying solely on cloud connectivity. Knowing these differences ensures tailored technology investments that maximize productivity and minimize downtime.

Comparison Table

| Aspect | Edge Computing | AI at the Edge |

|---|---|---|

| Definition | Processing data near the source to reduce latency and bandwidth use. | Running AI algorithms locally on edge devices for real-time insights. |

| Primary Use | Data filtering, preprocessing, and quick decision-making in manufacturing. | Real-time predictive maintenance, quality control, and anomaly detection. |

| Latency | Low latency by minimizing data transfer to central servers. | Ultra-low latency enabling instant AI-driven responses. |

| Data Volume | Handles large data volumes by local processing. | Processes selected data features for AI tasks locally. |

| Infrastructure | Edge servers, gateways, and IoT devices near manufacturing lines. | Edge devices with AI accelerators like GPUs or TPUs. |

| Benefits | Reduced bandwidth costs, enhanced data privacy, faster decisions. | Improved accuracy, autonomous operations, and adaptive manufacturing. |

| Challenges | Integration complexity and managing distributed infrastructure. | Hardware limitations, model deployment, and updating AI models locally. |

| Example Use Case | Local data aggregation from sensors for real-time monitoring. | AI-driven defect detection on production lines without cloud dependency. |

Which is better?

Edge computing enhances manufacturing by processing data locally, reducing latency and increasing real-time decision-making efficiency. AI at the edge leverages this local processing power to perform complex analytics, predictive maintenance, and quality control directly on-site, improving operational accuracy. Combining edge computing infrastructure with AI algorithms delivers superior performance, energy efficiency, and scalability in industrial automation.

Connection

Edge computing enables real-time data processing directly on manufacturing devices, reducing latency and enhancing operational efficiency. Artificial Intelligence (AI) at the edge leverages this localized data to perform immediate analytics, predictive maintenance, and quality control without relying on cloud connectivity. This integration accelerates decision-making and optimizes manufacturing workflows through rapid insights and adaptive automation.

Key Terms

Real-time processing

AI at the edge enables real-time processing by deploying machine learning models directly on local devices, minimizing latency and reducing dependency on cloud connectivity. Edge computing provides the infrastructure for processing data closer to the source, enhancing speed and reliability for time-sensitive applications such as autonomous vehicles and industrial automation. Explore how the synergy between AI at the edge and edge computing revolutionizes real-time decision-making and operational efficiency.

Data localization

AI at the edge processes data directly on local devices, enhancing real-time decision-making and reducing latency while maintaining data privacy by minimizing cloud dependencies. Edge computing encompasses broader infrastructure, supporting data storage, processing, and analytics closer to data sources to improve efficiency and comply with data localization laws. Explore how integrating AI at the edge transforms compliance with global data regulations and accelerates innovation.

Machine learning inference

Machine learning inference at the edge leverages AI models directly on edge devices to reduce latency and enhance real-time decision-making compared to traditional edge computing, which primarily handles data processing and storage closer to data sources. Deploying AI at the edge allows for efficient use of limited hardware resources, improved data privacy, and decreased bandwidth consumption by minimizing data transfer to central servers. Discover how integrating AI-driven inference at the edge transforms industries by boosting performance and reliability in critical applications.

Source and External Links

What Is Edge AI and How Does It Work? - NVIDIA Blog - Edge AI involves running AI computations near the user, on devices like traffic lights or phones, leveraging advances in neural networks, compute infrastructure, and IoT adoption to enable intelligent automation in diverse environments.

A beginner's guide to AI Edge computing: How it works and its benefits - Edge AI delivers real-time processing and decision-making on local devices, enhancing applications like facial recognition and healthcare by providing instant responses without cloud latency and improving data privacy.

What Is Edge AI? | IBM - Edge AI combines edge computing and AI to process data locally on devices like sensors and IoT gadgets, enabling real-time feedback, reducing latency, enhancing security, and lowering costs in applications such as self-driving cars and smart appliances.

dowidth.com

dowidth.com