Federated learning enables decentralized machine learning by training models across multiple devices while keeping data localized, enhancing privacy without centralizing sensitive information. Secure multi-party computation allows multiple parties to jointly compute a function over their inputs while ensuring that no individual party reveals its private data during the process. Explore the nuances and applications of these cutting-edge privacy-preserving technologies to understand their roles in advancing secure data collaboration.

Why it is important

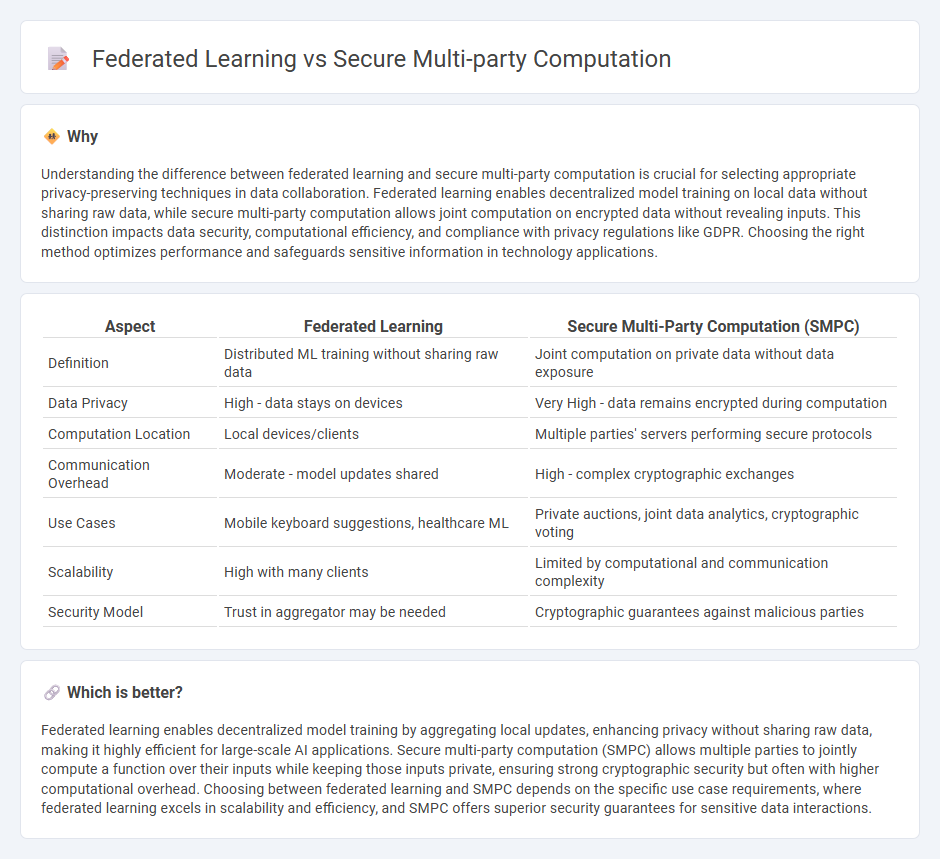

Understanding the difference between federated learning and secure multi-party computation is crucial for selecting appropriate privacy-preserving techniques in data collaboration. Federated learning enables decentralized model training on local data without sharing raw data, while secure multi-party computation allows joint computation on encrypted data without revealing inputs. This distinction impacts data security, computational efficiency, and compliance with privacy regulations like GDPR. Choosing the right method optimizes performance and safeguards sensitive information in technology applications.

Comparison Table

| Aspect | Federated Learning | Secure Multi-Party Computation (SMPC) |

|---|---|---|

| Definition | Distributed ML training without sharing raw data | Joint computation on private data without data exposure |

| Data Privacy | High - data stays on devices | Very High - data remains encrypted during computation |

| Computation Location | Local devices/clients | Multiple parties' servers performing secure protocols |

| Communication Overhead | Moderate - model updates shared | High - complex cryptographic exchanges |

| Use Cases | Mobile keyboard suggestions, healthcare ML | Private auctions, joint data analytics, cryptographic voting |

| Scalability | High with many clients | Limited by computational and communication complexity |

| Security Model | Trust in aggregator may be needed | Cryptographic guarantees against malicious parties |

Which is better?

Federated learning enables decentralized model training by aggregating local updates, enhancing privacy without sharing raw data, making it highly efficient for large-scale AI applications. Secure multi-party computation (SMPC) allows multiple parties to jointly compute a function over their inputs while keeping those inputs private, ensuring strong cryptographic security but often with higher computational overhead. Choosing between federated learning and SMPC depends on the specific use case requirements, where federated learning excels in scalability and efficiency, and SMPC offers superior security guarantees for sensitive data interactions.

Connection

Federated learning and secure multi-party computation (SMPC) both enhance privacy-preserving data analysis by enabling collaborative computations without directly sharing raw data. Federated learning distributes model training across decentralized devices, while SMPC allows multiple parties to jointly compute functions over their inputs while keeping those inputs private. Combining these technologies strengthens data security in applications such as healthcare, finance, and IoT, where sensitive information requires collaborative data insights without compromising confidentiality.

Key Terms

Data Privacy

Secure multi-party computation enables multiple parties to jointly compute a function over their inputs while keeping those inputs private, ensuring no participant can access others' raw data. Federated learning allows decentralized model training across diverse datasets without transferring raw data, enhancing privacy through data locality and aggregation of model updates. Explore deeper insights into how these privacy-preserving techniques transform collaborative data analysis.

Decentralization

Secure multi-party computation (SMPC) enables multiple parties to jointly compute a function over their inputs while keeping those inputs private, ensuring strong decentralization by eliminating the need for a trusted central authority. Federated learning distributes model training across decentralized devices but often relies on a central server to aggregate local updates, introducing partial centralization. Explore more about these technologies to understand their distinct decentralization mechanisms and use cases.

Cryptographic Protocols

Secure multi-party computation (SMPC) employs cryptographic protocols that enable multiple parties to jointly compute a function over their inputs while keeping those inputs private, relying on techniques like secret sharing, homomorphic encryption, and oblivious transfer. Federated learning, in contrast, uses cryptographic methods mainly to secure model updates and preserve data privacy during distributed training, often incorporating differential privacy and secure aggregation protocols. Explore further to understand the detailed cryptographic mechanisms distinguishing SMPC and federated learning in privacy-preserving computation.

Source and External Links

What is Secure Multiparty Computation? - Secure multiparty computation (SMPC) enables different organizations to jointly compute with their private data without revealing the underlying data or relying on a trusted third party, preserving privacy and providing accurate, quantum-safe results but at the cost of higher computational and communication overhead.

Secure multi-party computation - Secure multi-party computation is a cryptographic method that allows multiple parties to jointly compute a function over their private inputs, maintaining the confidentiality of each party's data throughout the process without revealing it to the others.

Secure Multi-Party Computation - SMPC ensures enhanced security, regulatory compliance, and privacy by enabling collaborative computation over encrypted data from multiple sources, allowing precise results for sensitive applications while protecting against both classical and quantum threats.

dowidth.com

dowidth.com