Graph Neural Networks (GNNs) excel in processing data with complex relational structures by capturing dependencies between nodes in graph formats, while Recurrent Neural Networks (RNNs) are tailored for sequential data, effectively managing time-series and language models through temporal dynamics. GNNs leverage message passing and aggregation mechanisms to model interactions in social networks, molecules, and recommendation systems, whereas RNNs utilize hidden states to capture patterns over sequences such as text or speech. Explore the distinct architectures and applications of GNNs and RNNs to understand their complementary roles in advanced machine learning.

Why it is important

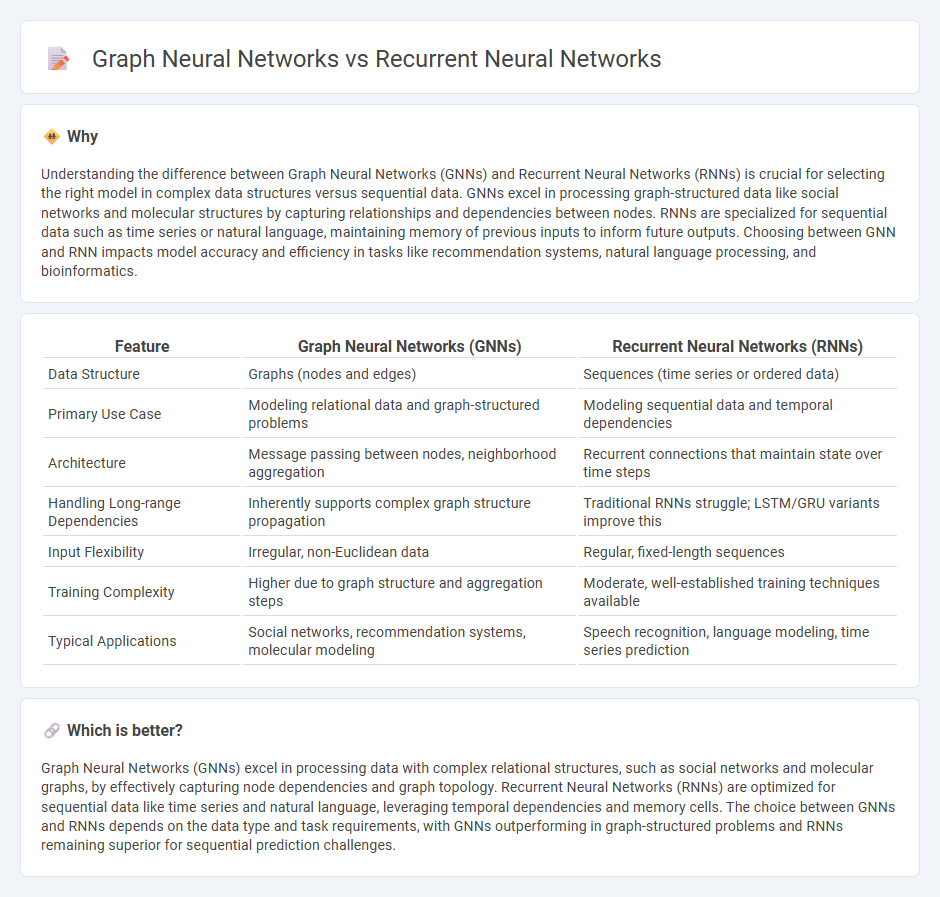

Understanding the difference between Graph Neural Networks (GNNs) and Recurrent Neural Networks (RNNs) is crucial for selecting the right model in complex data structures versus sequential data. GNNs excel in processing graph-structured data like social networks and molecular structures by capturing relationships and dependencies between nodes. RNNs are specialized for sequential data such as time series or natural language, maintaining memory of previous inputs to inform future outputs. Choosing between GNN and RNN impacts model accuracy and efficiency in tasks like recommendation systems, natural language processing, and bioinformatics.

Comparison Table

| Feature | Graph Neural Networks (GNNs) | Recurrent Neural Networks (RNNs) |

|---|---|---|

| Data Structure | Graphs (nodes and edges) | Sequences (time series or ordered data) |

| Primary Use Case | Modeling relational data and graph-structured problems | Modeling sequential data and temporal dependencies |

| Architecture | Message passing between nodes, neighborhood aggregation | Recurrent connections that maintain state over time steps |

| Handling Long-range Dependencies | Inherently supports complex graph structure propagation | Traditional RNNs struggle; LSTM/GRU variants improve this |

| Input Flexibility | Irregular, non-Euclidean data | Regular, fixed-length sequences |

| Training Complexity | Higher due to graph structure and aggregation steps | Moderate, well-established training techniques available |

| Typical Applications | Social networks, recommendation systems, molecular modeling | Speech recognition, language modeling, time series prediction |

Which is better?

Graph Neural Networks (GNNs) excel in processing data with complex relational structures, such as social networks and molecular graphs, by effectively capturing node dependencies and graph topology. Recurrent Neural Networks (RNNs) are optimized for sequential data like time series and natural language, leveraging temporal dependencies and memory cells. The choice between GNNs and RNNs depends on the data type and task requirements, with GNNs outperforming in graph-structured problems and RNNs remaining superior for sequential prediction challenges.

Connection

Graph Neural Networks (GNNs) and Recurrent Neural Networks (RNNs) share a connection through their ability to model structured data and temporal dependencies, respectively. GNNs focus on capturing relationships within graph-structured data by propagating information across nodes, while RNNs process sequential data by maintaining hidden states over time. Integrating GNNs with RNNs enables advanced learning on dynamic graphs and time-evolving networks, enhancing applications in social network analysis, recommendation systems, and natural language processing.

Key Terms

Sequence Modeling

Recurrent Neural Networks (RNNs) specialize in sequence modeling by processing data sequentially, capturing temporal dependencies in time-series and language tasks, excelling in applications like speech recognition and machine translation. Graph Neural Networks (GNNs) extend sequence modeling to complex relational data by leveraging graph structures, enabling improved performance in tasks involving structured data such as social networks, molecular chemistry, and recommendation systems. Explore deeper insights into the comparative strengths and use cases of RNNs and GNNs in sequence modeling.

Node Representation

Recurrent Neural Networks (RNNs) capture sequential dependencies by processing data step-by-step, which is effective for time-series and language tasks but less suited for complex graph structures. Graph Neural Networks (GNNs) excel at node representation by aggregating features from a node's neighbors, preserving graph topology and enhancing tasks like node classification and link prediction. Explore how GNNs outperform RNNs in capturing relational data and learn more about their applications in graph-centric problems.

Temporal Dependence

Recurrent Neural Networks (RNNs) excel at modeling temporal dependence by processing sequential data through hidden states that capture information over time, making them ideal for time-series and natural language tasks. Graph Neural Networks (GNNs), while primarily designed to encode relational and structural information in graph data, can incorporate temporal dynamics when extended with temporal graph layers or recurrent units, enabling robust spatiotemporal learning. Explore advanced architectures and use cases to deepen your understanding of how RNNs and GNNs capture temporal dependence differently.

Source and External Links

What is a Recurrent Neural Network (RNN)? - IBM - This resource provides an overview of RNNs, explaining their role in processing sequential data and their ability to maintain hidden states to remember past inputs.

Recurrent Neural Network - Wikipedia - This article details the architecture and applications of RNNs for tasks like speech recognition and natural language processing, highlighting their limitation in handling long-term dependencies.

What is RNN? - Recurrent Neural Networks Explained - AWS - This page explains RNNs as deep learning models for processing and converting sequential data inputs into outputs, while noting their replacement by more efficient transformer models.

dowidth.com

dowidth.com