Generative fill employs advanced AI algorithms to create entirely new image content by analyzing surrounding pixels, enabling seamless and realistic modifications. Inpainting focuses on restoring or reconstructing missing or damaged parts of images using context-aware techniques to maintain visual coherence. Explore the differences between generative fill and inpainting to enhance your understanding of cutting-edge image editing technologies.

Why it is important

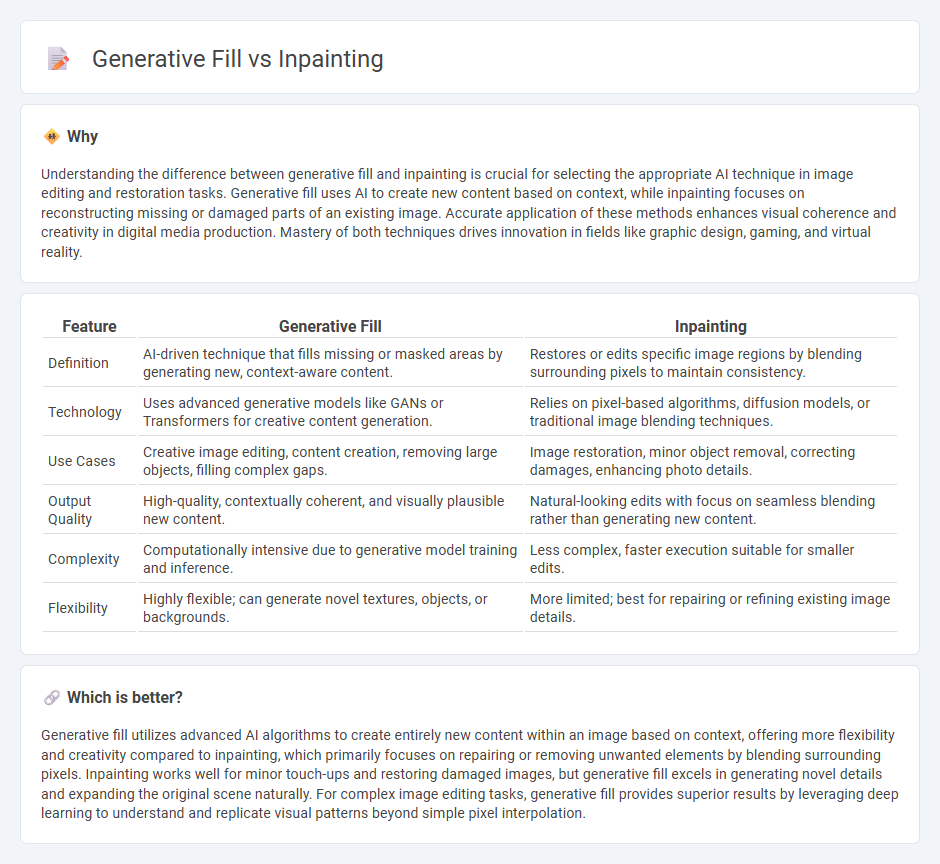

Understanding the difference between generative fill and inpainting is crucial for selecting the appropriate AI technique in image editing and restoration tasks. Generative fill uses AI to create new content based on context, while inpainting focuses on reconstructing missing or damaged parts of an existing image. Accurate application of these methods enhances visual coherence and creativity in digital media production. Mastery of both techniques drives innovation in fields like graphic design, gaming, and virtual reality.

Comparison Table

| Feature | Generative Fill | Inpainting |

|---|---|---|

| Definition | AI-driven technique that fills missing or masked areas by generating new, context-aware content. | Restores or edits specific image regions by blending surrounding pixels to maintain consistency. |

| Technology | Uses advanced generative models like GANs or Transformers for creative content generation. | Relies on pixel-based algorithms, diffusion models, or traditional image blending techniques. |

| Use Cases | Creative image editing, content creation, removing large objects, filling complex gaps. | Image restoration, minor object removal, correcting damages, enhancing photo details. |

| Output Quality | High-quality, contextually coherent, and visually plausible new content. | Natural-looking edits with focus on seamless blending rather than generating new content. |

| Complexity | Computationally intensive due to generative model training and inference. | Less complex, faster execution suitable for smaller edits. |

| Flexibility | Highly flexible; can generate novel textures, objects, or backgrounds. | More limited; best for repairing or refining existing image details. |

Which is better?

Generative fill utilizes advanced AI algorithms to create entirely new content within an image based on context, offering more flexibility and creativity compared to inpainting, which primarily focuses on repairing or removing unwanted elements by blending surrounding pixels. Inpainting works well for minor touch-ups and restoring damaged images, but generative fill excels in generating novel details and expanding the original scene naturally. For complex image editing tasks, generative fill provides superior results by leveraging deep learning to understand and replicate visual patterns beyond simple pixel interpolation.

Connection

Generative fill and inpainting are connected through their use of deep learning algorithms to reconstruct or replace missing or undesired parts of images. Both techniques analyze surrounding pixels and textures to generate seamless content that blends naturally with the original image. These methods enhance image editing by enabling automated, context-aware modifications without manual intervention.

Key Terms

Masking

Inpainting uses masking to selectively restore or reconstruct missing or damaged areas within an image by analyzing surrounding pixels and textures, preserving the original context. Generative fill employs advanced neural networks to interpret the mask in combination with learned image patterns, producing new content that seamlessly integrates with the existing image while allowing greater creative flexibility. Explore detailed comparisons to understand how masking techniques influence restoration and generation outcomes.

Context-aware synthesis

Inpainting relies on context-aware synthesis to seamlessly restore missing or damaged areas of an image by analyzing surrounding pixels and textures, ensuring coherent visual integration. Generative fill enhances this process by leveraging advanced AI models, such as GANs or diffusion models, to generate highly realistic content that matches the context while introducing novel elements congenial to the scene. Explore how these cutting-edge techniques revolutionize image editing and creative workflows.

Deep learning

Inpainting involves using deep learning models, such as convolutional neural networks (CNNs), to restore missing or corrupted image regions by predicting plausible content based on surrounding pixels. Generative fill leverages advanced generative adversarial networks (GANs) or diffusion models to synthesize entirely new, contextually relevant image parts, often producing more creative and high-fidelity results. Explore in-depth comparisons and technical details to understand which method best suits your image restoration or creative needs.

Source and External Links

Inpainting - Wikipedia - Inpainting is the conservation process of filling in damaged, deteriorated, or missing parts of artwork or images to restore a complete image, used in both physical and digital mediums.

What is inpainting and how does it work? - Adobe Photoshop - Inpainting in digital imaging uses AI to reconstruct missing or damaged portions by analyzing surrounding details, providing seamless fills with tools like Photoshop's Generative Fill.

Beginner's guide to inpainting (step-by-step examples) - Inpainting can be performed using AI models like Stable Diffusion to fix image defects by creating masks on target areas for regeneration, enabling detailed and accurate restorations.

dowidth.com

dowidth.com