Multimodal AI integrates data from various sources such as text, images, and audio to enhance machine understanding and decision-making capabilities, making it crucial for applications like autonomous vehicles and advanced healthcare diagnostics. Explainable AI, on the other hand, focuses on transparency and interpretability, enabling users to understand how AI models arrive at specific decisions, which is essential for trust and regulatory compliance. Explore the distinct advantages and challenges of multimodal AI and explainable AI to understand their impact on the future of intelligent systems.

Why it is important

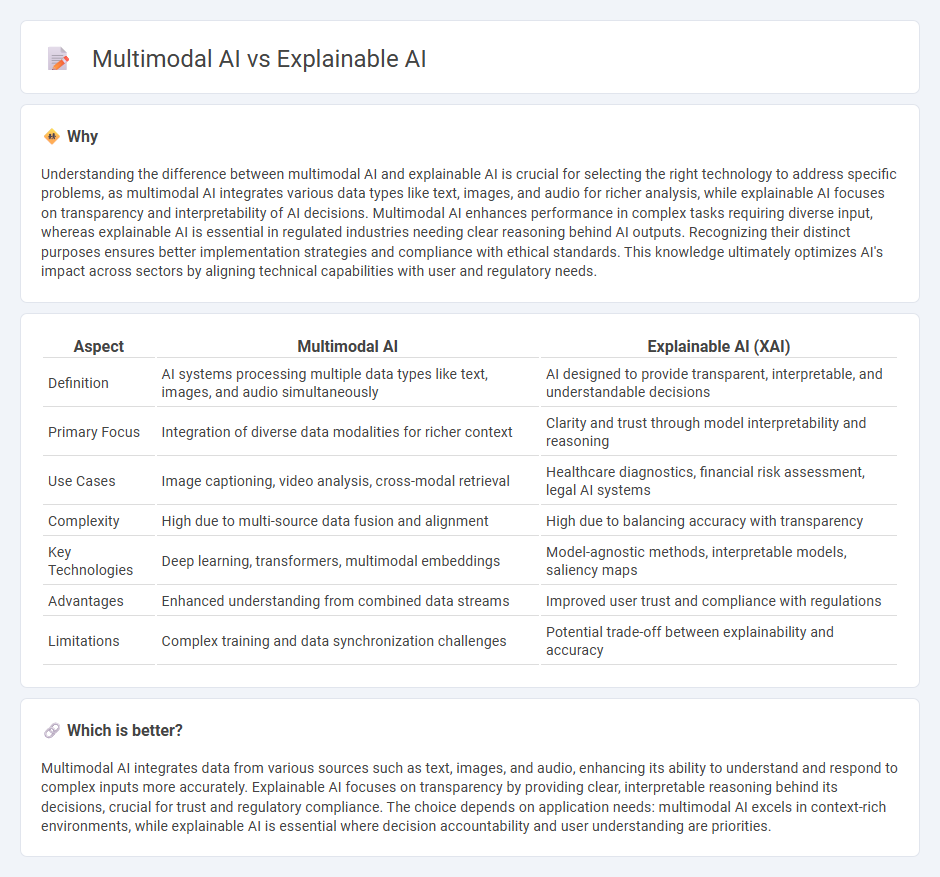

Understanding the difference between multimodal AI and explainable AI is crucial for selecting the right technology to address specific problems, as multimodal AI integrates various data types like text, images, and audio for richer analysis, while explainable AI focuses on transparency and interpretability of AI decisions. Multimodal AI enhances performance in complex tasks requiring diverse input, whereas explainable AI is essential in regulated industries needing clear reasoning behind AI outputs. Recognizing their distinct purposes ensures better implementation strategies and compliance with ethical standards. This knowledge ultimately optimizes AI's impact across sectors by aligning technical capabilities with user and regulatory needs.

Comparison Table

| Aspect | Multimodal AI | Explainable AI (XAI) |

|---|---|---|

| Definition | AI systems processing multiple data types like text, images, and audio simultaneously | AI designed to provide transparent, interpretable, and understandable decisions |

| Primary Focus | Integration of diverse data modalities for richer context | Clarity and trust through model interpretability and reasoning |

| Use Cases | Image captioning, video analysis, cross-modal retrieval | Healthcare diagnostics, financial risk assessment, legal AI systems |

| Complexity | High due to multi-source data fusion and alignment | High due to balancing accuracy with transparency |

| Key Technologies | Deep learning, transformers, multimodal embeddings | Model-agnostic methods, interpretable models, saliency maps |

| Advantages | Enhanced understanding from combined data streams | Improved user trust and compliance with regulations |

| Limitations | Complex training and data synchronization challenges | Potential trade-off between explainability and accuracy |

Which is better?

Multimodal AI integrates data from various sources such as text, images, and audio, enhancing its ability to understand and respond to complex inputs more accurately. Explainable AI focuses on transparency by providing clear, interpretable reasoning behind its decisions, crucial for trust and regulatory compliance. The choice depends on application needs: multimodal AI excels in context-rich environments, while explainable AI is essential where decision accountability and user understanding are priorities.

Connection

Multimodal AI integrates diverse data types such as text, image, and audio, enabling comprehensive understanding and decision-making processes. Explainable AI enhances this by providing transparent reasoning behind multimodal model outputs, facilitating trust and interpretability. Together, they address complexity in AI systems by combining rich data fusion with clear, human-understandable explanations.

Key Terms

Interpretability

Explainable AI (XAI) prioritizes transparency by providing clear, human-understandable reasoning behind model decisions, enhancing interpretability in machine learning systems. Multimodal AI integrates and processes diverse data types such as text, images, and audio, often increasing complexity and challenging straightforward interpretation. Explore deeper insights to understand how advances in interpretability impact the effectiveness of both AI paradigms.

Cross-modal Integration

Explainable AI enhances transparency by providing human-understandable justifications for machine decisions, crucial in sectors demanding accountability. Multimodal AI integrates diverse data types such as text, images, and audio, improving context comprehension and decision accuracy. Explore the intricacies of cross-modal integration to understand how combined data streams drive more robust and interpretable AI systems.

Transparency

Explainable AI (XAI) emphasizes transparency by making AI decision processes understandable and interpretable to humans, enhancing trust and accountability. In contrast, multimodal AI integrates diverse data types such as text, images, and audio, often increasing system complexity and challenging transparency efforts. Explore how advancements in AI are balancing the trade-offs between model transparency and multimodal data integration.

Source and External Links

Four Principles of Explainable Artificial Intelligence - Explainable AI systems deliver understandable, accurate explanations for their processes and decisions, tailored to different users, ensuring transparency and trustworthiness.

What is Explainable AI? - SEI Blog - Explainable AI refers to methods enabling humans to comprehend and trust AI outputs, important in fields like healthcare and finance where transparency and accountability are critical.

What is Explainable AI (XAI)? - IBM - Explainable AI helps characterize AI model accuracy, fairness, and biases by providing clear insights into how AI reaches decisions, thereby building trust and enabling responsible AI use.

dowidth.com

dowidth.com