Neuromorphic chips mimic the human brain's neural architecture to enhance computing efficiency and speed, especially in tasks like pattern recognition and sensory processing. GPUs excel in parallel processing, making them ideal for graphics rendering and large-scale machine learning workloads. Explore the advancements and applications of neuromorphic chips compared to GPUs to understand the future of computing technology.

Why it is important

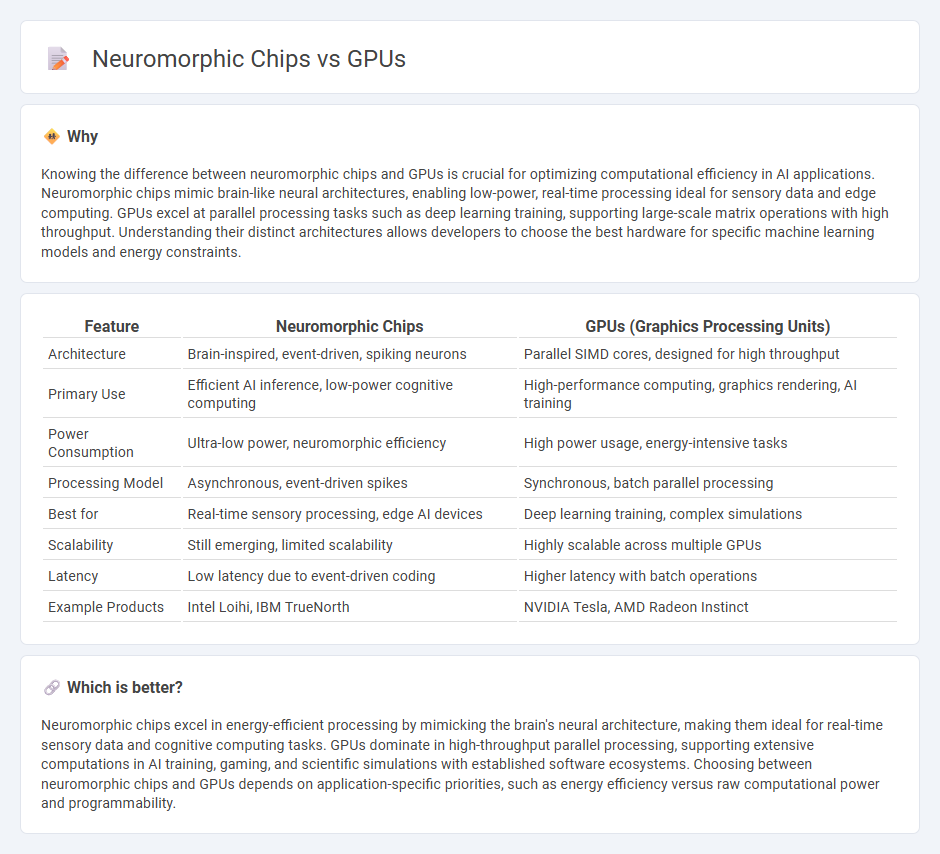

Knowing the difference between neuromorphic chips and GPUs is crucial for optimizing computational efficiency in AI applications. Neuromorphic chips mimic brain-like neural architectures, enabling low-power, real-time processing ideal for sensory data and edge computing. GPUs excel at parallel processing tasks such as deep learning training, supporting large-scale matrix operations with high throughput. Understanding their distinct architectures allows developers to choose the best hardware for specific machine learning models and energy constraints.

Comparison Table

| Feature | Neuromorphic Chips | GPUs (Graphics Processing Units) |

|---|---|---|

| Architecture | Brain-inspired, event-driven, spiking neurons | Parallel SIMD cores, designed for high throughput |

| Primary Use | Efficient AI inference, low-power cognitive computing | High-performance computing, graphics rendering, AI training |

| Power Consumption | Ultra-low power, neuromorphic efficiency | High power usage, energy-intensive tasks |

| Processing Model | Asynchronous, event-driven spikes | Synchronous, batch parallel processing |

| Best for | Real-time sensory processing, edge AI devices | Deep learning training, complex simulations |

| Scalability | Still emerging, limited scalability | Highly scalable across multiple GPUs |

| Latency | Low latency due to event-driven coding | Higher latency with batch operations |

| Example Products | Intel Loihi, IBM TrueNorth | NVIDIA Tesla, AMD Radeon Instinct |

Which is better?

Neuromorphic chips excel in energy-efficient processing by mimicking the brain's neural architecture, making them ideal for real-time sensory data and cognitive computing tasks. GPUs dominate in high-throughput parallel processing, supporting extensive computations in AI training, gaming, and scientific simulations with established software ecosystems. Choosing between neuromorphic chips and GPUs depends on application-specific priorities, such as energy efficiency versus raw computational power and programmability.

Connection

Neuromorphic chips and GPUs share a common goal of enhancing computational efficiency through parallel processing, with neuromorphic chips mimicking the brain's neural architecture and GPUs excelling at handling massive parallel workloads. Both technologies leverage specialized hardware to accelerate machine learning and artificial intelligence tasks, enabling faster data processing and improved energy efficiency. The integration of neuromorphic principles into GPU design fosters innovative advancements in neural network simulations and real-time cognitive computing applications.

Key Terms

Parallel Processing

GPUs excel at parallel processing with thousands of cores designed for handling large-scale data and complex computations simultaneously, making them ideal for AI and deep learning tasks. Neuromorphic chips mimic the brain's neural architecture, offering energy-efficient, event-driven parallelism optimized for real-time sensory processing and adaptive learning. Explore the differences in architecture and applications to understand the future of parallel computing technology.

Spiking Neural Networks

GPUs excel at parallel processing with floating-point precision, making them ideal for conventional deep learning models, while neuromorphic chips mimic brain architecture using Spiking Neural Networks (SNNs) for energy-efficient, event-driven computations. SNNs process information through discrete spikes, enabling neuromorphic hardware to perform real-time sensory data analysis with lower power consumption compared to GPUs. Explore further to understand how SNNs and neuromorphic chips are transforming AI and edge computing applications.

Energy Efficiency

Neuromorphic chips mimic the human brain's architecture, enabling event-driven processing that drastically lowers power consumption compared to traditional GPUs. GPUs consume significant energy due to parallel processing of large-scale data streams, primarily designed for high-throughput tasks. Explore the distinct advantages of neuromorphic technology in revolutionizing energy-efficient computing.

Source and External Links

What is a GPU? - A GPU is an electronic circuit designed for rapid processing of graphics and images, featuring hundreds or thousands of cores that enable parallel computing for fast rendering in gaming, video editing, and AI tasks.

What Is a GPU? Graphics Processing Units Defined - Originally developed to accelerate 3D graphics for video games, modern GPUs are now highly programmable and used for a wide range of applications, including content creation, scientific computing, and machine learning.

What is a GPU? - Graphics Processing Unit Explained - GPUs are powerful processors that handle complex mathematical calculations at high speed, essential for gaming, professional visualization, and accelerating machine learning workloads.

dowidth.com

dowidth.com