Diffusion models simulate complex systems by spreading information or influence through interconnected nodes, making them effective in network analysis and image generation. Ensemble methods combine multiple machine learning models to improve predictive accuracy and robustness across various applications like classification and regression. Explore the detailed differences and applications of diffusion models versus ensemble methods to enhance your understanding of advanced technologies.

Why it is important

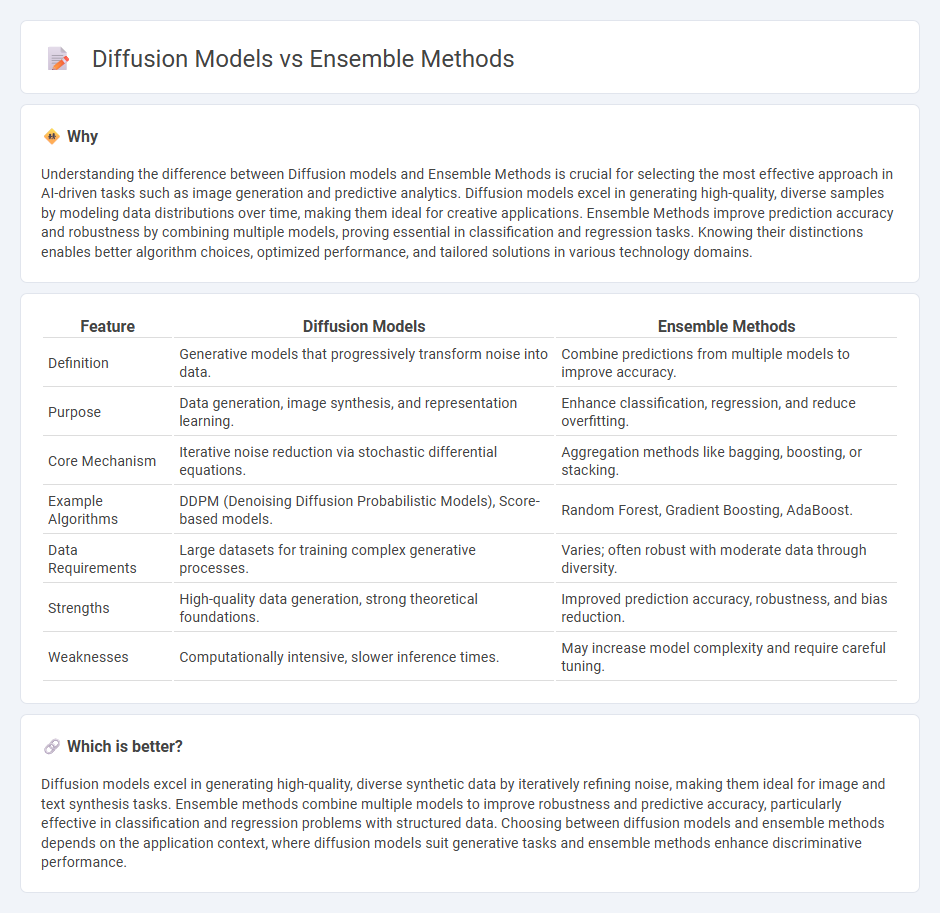

Understanding the difference between Diffusion models and Ensemble Methods is crucial for selecting the most effective approach in AI-driven tasks such as image generation and predictive analytics. Diffusion models excel in generating high-quality, diverse samples by modeling data distributions over time, making them ideal for creative applications. Ensemble Methods improve prediction accuracy and robustness by combining multiple models, proving essential in classification and regression tasks. Knowing their distinctions enables better algorithm choices, optimized performance, and tailored solutions in various technology domains.

Comparison Table

| Feature | Diffusion Models | Ensemble Methods |

|---|---|---|

| Definition | Generative models that progressively transform noise into data. | Combine predictions from multiple models to improve accuracy. |

| Purpose | Data generation, image synthesis, and representation learning. | Enhance classification, regression, and reduce overfitting. |

| Core Mechanism | Iterative noise reduction via stochastic differential equations. | Aggregation methods like bagging, boosting, or stacking. |

| Example Algorithms | DDPM (Denoising Diffusion Probabilistic Models), Score-based models. | Random Forest, Gradient Boosting, AdaBoost. |

| Data Requirements | Large datasets for training complex generative processes. | Varies; often robust with moderate data through diversity. |

| Strengths | High-quality data generation, strong theoretical foundations. | Improved prediction accuracy, robustness, and bias reduction. |

| Weaknesses | Computationally intensive, slower inference times. | May increase model complexity and require careful tuning. |

Which is better?

Diffusion models excel in generating high-quality, diverse synthetic data by iteratively refining noise, making them ideal for image and text synthesis tasks. Ensemble methods combine multiple models to improve robustness and predictive accuracy, particularly effective in classification and regression problems with structured data. Choosing between diffusion models and ensemble methods depends on the application context, where diffusion models suit generative tasks and ensemble methods enhance discriminative performance.

Connection

Diffusion models enhance data representation by simulating the gradual spread of information, which complements ensemble methods that combine multiple learning algorithms to improve predictive accuracy. Both techniques rely on leveraging diverse data distributions and model variations to reduce overfitting and increase robustness in machine learning tasks. Ensemble methods, such as bagging and boosting, benefit from diffusion processes by incorporating varied feature spaces generated through diffusion transformations, leading to more generalized models.

Key Terms

Model Aggregation

Ensemble methods combine multiple predictive models to improve accuracy and robustness by averaging or voting techniques, effectively reducing variance and bias in machine learning tasks. Diffusion models leverage iterative refinement through stochastic processes, generating data by progressively denoising from random noise to structured output, which offers advantages in generating complex data distributions. Explore detailed comparisons and practical applications of model aggregation strategies in ensemble methods versus diffusion models to enhance your understanding.

Noise Scheduling

Ensemble methods improve model robustness by combining multiple predictions to reduce variance, while diffusion models leverage noise scheduling to iteratively refine data through controlled stochastic processes. Noise scheduling in diffusion models is critical for balancing the signal-to-noise ratio at each denoising step, directly impacting sample quality and generation diversity. Explore the nuances of noise scheduling in diffusion models to better understand their advantages over ensemble techniques.

Predictive Uncertainty

Ensemble methods improve predictive uncertainty by combining multiple models, averaging their outputs to reduce variance and bias, thus providing more robust confidence estimates in predictions. Diffusion models capture uncertainty through iterative refinement of data distributions, effectively modeling complex, high-dimensional uncertainties in generative tasks. Explore detailed comparisons and applications of ensemble methods versus diffusion models to enhance predictive uncertainty understanding.

Source and External Links

Ensemble learning - Ensemble methods combine multiple learning algorithms to improve predictive performance by promoting diversity among models and using techniques like averaging or weighted averaging to outperform individual models.

Ensemble Methods - Overview, Categories, Main Types - Ensemble methods improve model accuracy by combining several base learners either sequentially (e.g., AdaBoost) or in parallel (e.g., random forests), using homogenous or heterogeneous models to reduce variance and error.

Ensemble Learning - Ensemble learning uses multiple models like Bagging, Boosting, and Stacking to reduce bias and variance, combining predictions from weak models into a stronger overall model for classification or regression tasks.

dowidth.com

dowidth.com