Differentiable programming integrates gradient-based optimization directly into general-purpose programming, enabling models to learn from data through continuous differentiation, unlike neural networks which specifically consist of interconnected layers designed to mimic brain function. In practice, neural networks are a subset within differentiable programming frameworks, primarily focused on pattern recognition tasks, while differentiable programming extends to broader algorithmic applications. Explore deeper into how these paradigms reshape machine learning and artificial intelligence development.

Why it is important

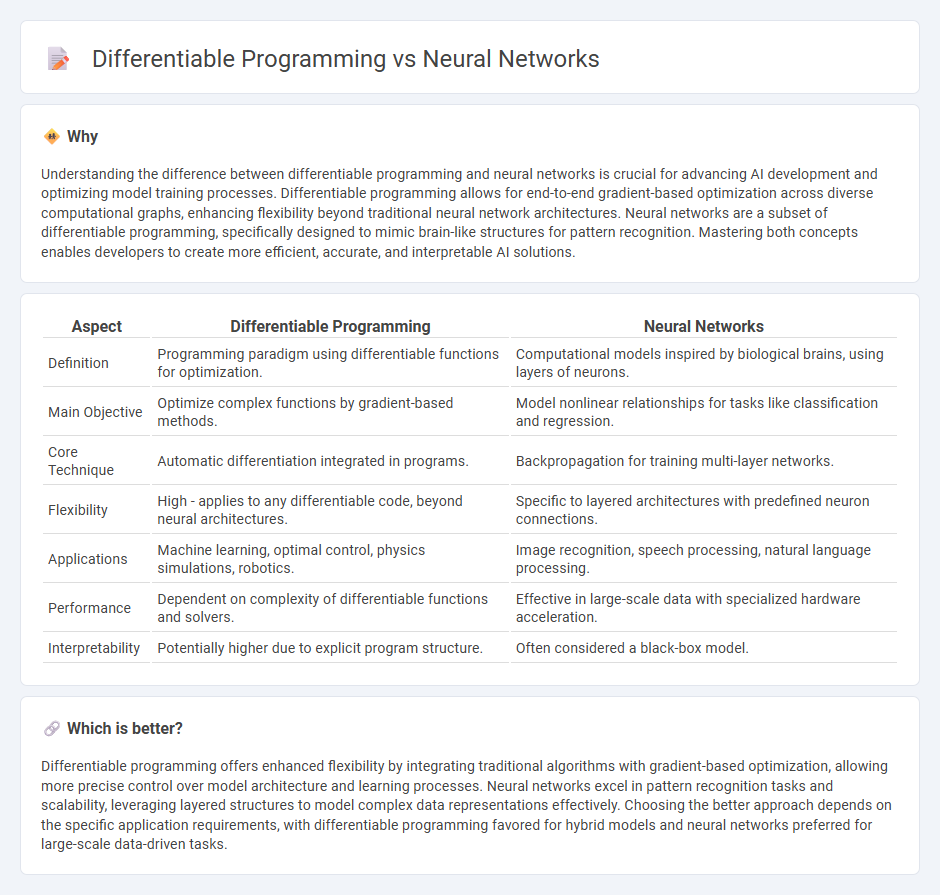

Understanding the difference between differentiable programming and neural networks is crucial for advancing AI development and optimizing model training processes. Differentiable programming allows for end-to-end gradient-based optimization across diverse computational graphs, enhancing flexibility beyond traditional neural network architectures. Neural networks are a subset of differentiable programming, specifically designed to mimic brain-like structures for pattern recognition. Mastering both concepts enables developers to create more efficient, accurate, and interpretable AI solutions.

Comparison Table

| Aspect | Differentiable Programming | Neural Networks |

|---|---|---|

| Definition | Programming paradigm using differentiable functions for optimization. | Computational models inspired by biological brains, using layers of neurons. |

| Main Objective | Optimize complex functions by gradient-based methods. | Model nonlinear relationships for tasks like classification and regression. |

| Core Technique | Automatic differentiation integrated in programs. | Backpropagation for training multi-layer networks. |

| Flexibility | High - applies to any differentiable code, beyond neural architectures. | Specific to layered architectures with predefined neuron connections. |

| Applications | Machine learning, optimal control, physics simulations, robotics. | Image recognition, speech processing, natural language processing. |

| Performance | Dependent on complexity of differentiable functions and solvers. | Effective in large-scale data with specialized hardware acceleration. |

| Interpretability | Potentially higher due to explicit program structure. | Often considered a black-box model. |

Which is better?

Differentiable programming offers enhanced flexibility by integrating traditional algorithms with gradient-based optimization, allowing more precise control over model architecture and learning processes. Neural networks excel in pattern recognition tasks and scalability, leveraging layered structures to model complex data representations effectively. Choosing the better approach depends on the specific application requirements, with differentiable programming favored for hybrid models and neural networks preferred for large-scale data-driven tasks.

Connection

Differentiable programming enables the optimization of neural networks by allowing the computation of gradients through complex models, which is essential for training via backpropagation. Neural networks leverage this gradient information to adjust their parameters efficiently, improving performance on tasks like image recognition and natural language processing. The integration of differentiable programming techniques accelerates innovation in AI by enhancing model flexibility and learning capability.

Key Terms

Backpropagation

Neural networks rely heavily on backpropagation to optimize weights through gradient descent, enabling efficient learning from large datasets. Differentiable programming extends this concept by allowing gradients to be computed for entire programs, not just neural network layers, which enhances flexibility and integration with other algorithms. Explore how backpropagation algorithms evolve in differentiable programming to advance machine learning capabilities.

Computational Graphs

Neural networks rely on computational graphs to represent and optimize complex functions through layers of interconnected neurons, facilitating automatic differentiation and backpropagation during training. Differentiable programming extends this concept by allowing any program, not just neural networks, to be expressed with differentiable operations in a computational graph, enabling seamless gradient-based optimization across diverse models and algorithms. Explore more about how computational graphs unify these paradigms for advanced AI development.

Automatic Differentiation

Neural networks leverage automatic differentiation (AD) to efficiently compute gradients for backpropagation, enabling precise parameter updates during training. Differentiable programming generalizes this approach by embedding AD into various programming constructs, allowing seamless gradient-based optimization beyond traditional neural network models. Explore how automatic differentiation bridges these paradigms and enhances machine learning methodologies.

Source and External Links

What is a Neural Network? | IBM - Neural networks are machine learning models that mimic the decision-making processes of the human brain using layers of interconnected nodes, enabling tasks like speech and image recognition by learning from data over time.

What is a Neural Network? | GeeksforGeeks - Neural networks consist of layers of artificial neurons with weighted connections, capable of learning complex patterns directly from data to perform pattern recognition and decision-making.

What is a Neural Network? | AWS - A neural network is a deep learning technique inspired by the human brain that allows computers to learn from data, improve with experience, and perform complex tasks like natural language understanding and image recognition.

dowidth.com

dowidth.com