Self-supervised learning leverages unlabeled data by creating proxy tasks to generate useful representations, enhancing model performance without extensive labeled datasets. Meta-learning focuses on improving an algorithm's ability to learn new tasks quickly by utilizing prior knowledge across multiple tasks, often referred to as "learning to learn." Explore the nuances and applications of self-supervised learning versus meta-learning to understand their distinct advantages in AI development.

Why it is important

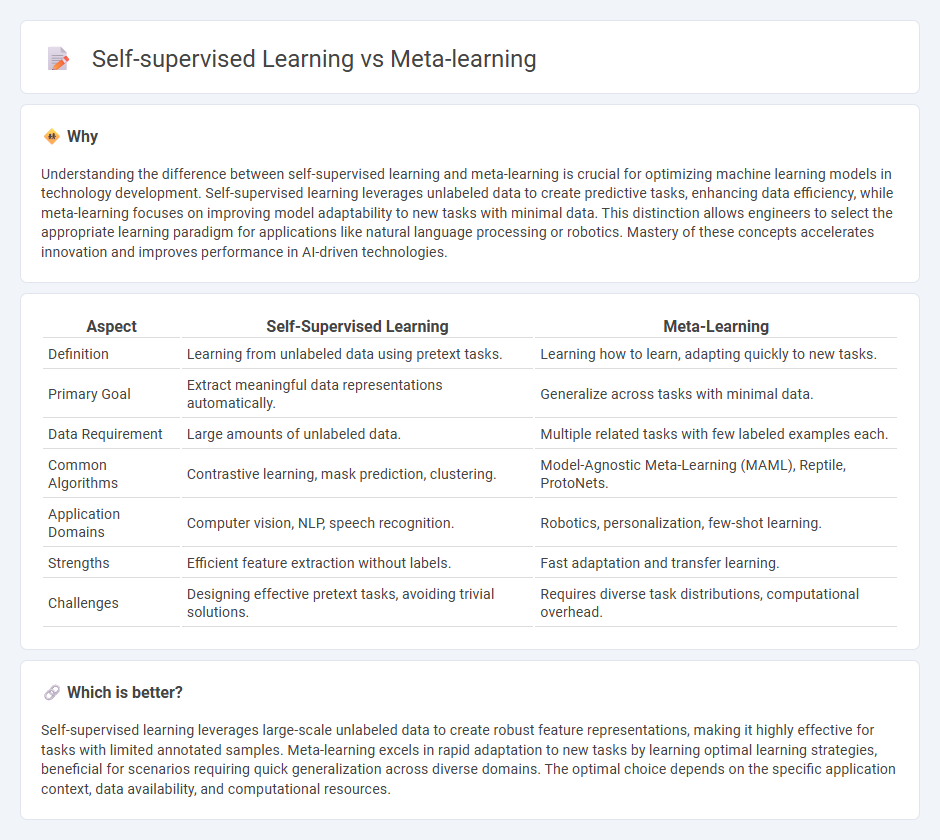

Understanding the difference between self-supervised learning and meta-learning is crucial for optimizing machine learning models in technology development. Self-supervised learning leverages unlabeled data to create predictive tasks, enhancing data efficiency, while meta-learning focuses on improving model adaptability to new tasks with minimal data. This distinction allows engineers to select the appropriate learning paradigm for applications like natural language processing or robotics. Mastery of these concepts accelerates innovation and improves performance in AI-driven technologies.

Comparison Table

| Aspect | Self-Supervised Learning | Meta-Learning |

|---|---|---|

| Definition | Learning from unlabeled data using pretext tasks. | Learning how to learn, adapting quickly to new tasks. |

| Primary Goal | Extract meaningful data representations automatically. | Generalize across tasks with minimal data. |

| Data Requirement | Large amounts of unlabeled data. | Multiple related tasks with few labeled examples each. |

| Common Algorithms | Contrastive learning, mask prediction, clustering. | Model-Agnostic Meta-Learning (MAML), Reptile, ProtoNets. |

| Application Domains | Computer vision, NLP, speech recognition. | Robotics, personalization, few-shot learning. |

| Strengths | Efficient feature extraction without labels. | Fast adaptation and transfer learning. |

| Challenges | Designing effective pretext tasks, avoiding trivial solutions. | Requires diverse task distributions, computational overhead. |

Which is better?

Self-supervised learning leverages large-scale unlabeled data to create robust feature representations, making it highly effective for tasks with limited annotated samples. Meta-learning excels in rapid adaptation to new tasks by learning optimal learning strategies, beneficial for scenarios requiring quick generalization across diverse domains. The optimal choice depends on the specific application context, data availability, and computational resources.

Connection

Self-supervised learning creates predictive models by leveraging unlabeled data to generate its own supervision signals, which enhances feature representation without explicit labels. Meta-learning optimizes models to quickly adapt to new tasks using minimal data by learning how to learn, often relying on the rich representations developed through self-supervised methods. Combining self-supervised learning with meta-learning accelerates model generalization and adaptability across diverse technological applications such as natural language processing and computer vision.

Key Terms

Adaptation

Meta-learning enables rapid adaptation by training models on diverse tasks to learn how to learn efficiently, optimizing parameter initialization for fast convergence with minimal data. Self-supervised learning enhances adaptation by leveraging vast amounts of unlabeled data to learn robust representations that generalize well across tasks without explicit labels. Explore the nuances between these approaches to understand their impact on model adaptation and performance.

Representation learning

Meta-learning enhances representation learning by enabling models to rapidly adapt to new tasks using prior knowledge from diverse datasets. Self-supervised learning leverages pretext tasks to learn robust and generalizable feature representations without labeled data. Explore how these approaches transform representation learning for complex AI applications.

Data labeling

Meta-learning reduces the dependency on extensive data labeling by leveraging prior knowledge to quickly adapt to new tasks with minimal labeled examples, whereas self-supervised learning minimizes labeled data requirements by automatically generating supervisory signals from the data itself. Both methods aim to optimize learning efficiency in environments with scarce labeled data, yet they approach the data labeling challenge from different perspectives--meta-learning through rapid adaptation and self-supervised learning through intrinsic data structure exploitation. Explore further to understand how these approaches transform data labeling strategies in machine learning.

Source and External Links

What Is Meta Learning? - IBM - Meta-learning consists of two main stages, meta training and meta testing, where a base learner model updates its parameters by learning from a divided dataset to optimize adaptability to new tasks.

What is Meta-Learning? Benefits, Applications and Challenges - Meta-learning, or "learning to learn," enables systems to adapt quickly to new tasks using experience from previous tasks, reducing the need for large datasets and enabling faster, cost-effective training with better generalization.

Meta-learning (computer science) - Wikipedia - Meta-learning involves applying automated learning algorithms on metadata from previous machine learning experiments to improve algorithm flexibility and overall learning performance, often described as learning to learn.

dowidth.com

dowidth.com