Graph Neural Networks (GNNs) excel at capturing relationships and structures within graph-structured data, making them ideal for social networks, molecular analysis, and recommendation systems. Transformers prioritize sequence modeling and excel in natural language processing and image recognition by leveraging self-attention mechanisms to capture long-range dependencies. Explore how these architectures transform AI applications by diving deeper into their unique capabilities and use cases.

Why it is important

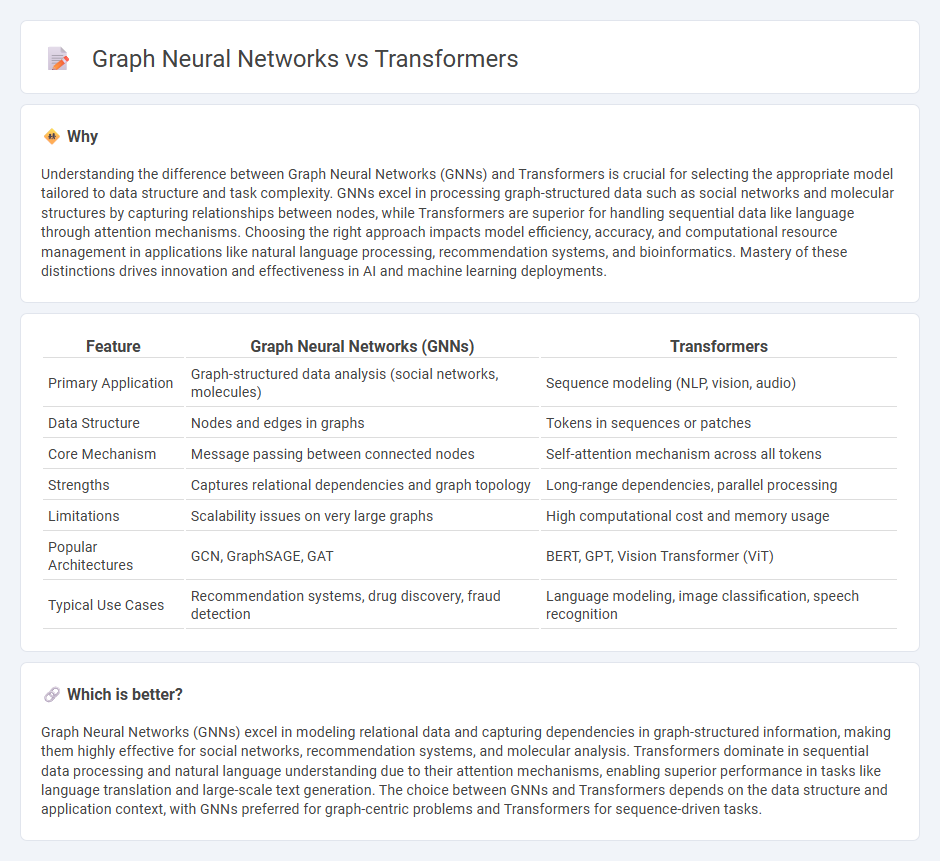

Understanding the difference between Graph Neural Networks (GNNs) and Transformers is crucial for selecting the appropriate model tailored to data structure and task complexity. GNNs excel in processing graph-structured data such as social networks and molecular structures by capturing relationships between nodes, while Transformers are superior for handling sequential data like language through attention mechanisms. Choosing the right approach impacts model efficiency, accuracy, and computational resource management in applications like natural language processing, recommendation systems, and bioinformatics. Mastery of these distinctions drives innovation and effectiveness in AI and machine learning deployments.

Comparison Table

| Feature | Graph Neural Networks (GNNs) | Transformers |

|---|---|---|

| Primary Application | Graph-structured data analysis (social networks, molecules) | Sequence modeling (NLP, vision, audio) |

| Data Structure | Nodes and edges in graphs | Tokens in sequences or patches |

| Core Mechanism | Message passing between connected nodes | Self-attention mechanism across all tokens |

| Strengths | Captures relational dependencies and graph topology | Long-range dependencies, parallel processing |

| Limitations | Scalability issues on very large graphs | High computational cost and memory usage |

| Popular Architectures | GCN, GraphSAGE, GAT | BERT, GPT, Vision Transformer (ViT) |

| Typical Use Cases | Recommendation systems, drug discovery, fraud detection | Language modeling, image classification, speech recognition |

Which is better?

Graph Neural Networks (GNNs) excel in modeling relational data and capturing dependencies in graph-structured information, making them highly effective for social networks, recommendation systems, and molecular analysis. Transformers dominate in sequential data processing and natural language understanding due to their attention mechanisms, enabling superior performance in tasks like language translation and large-scale text generation. The choice between GNNs and Transformers depends on the data structure and application context, with GNNs preferred for graph-centric problems and Transformers for sequence-driven tasks.

Connection

Graph neural networks (GNNs) and Transformers share a foundation in processing relational data, with GNNs excelling at capturing local graph structures and Transformers offering powerful attention mechanisms for global context modeling. Recent advancements integrate graph attention layers inspired by Transformer self-attention to improve message passing and node representation learning. This fusion enhances tasks like molecule classification, social network analysis, and recommendation systems through better semantic understanding of graph-structured data.

Key Terms

Attention Mechanism

Transformers utilize self-attention mechanisms to capture long-range dependencies by assigning dynamic weights to input tokens, enabling efficient parallel processing in natural language processing tasks. Graph Neural Networks (GNNs) employ attention mechanisms like Graph Attention Networks (GATs) to aggregate node features based on the importance of neighboring nodes, effectively capturing graph-structured data relationships. Explore the intricate differences between transformer self-attention and graph-based attention to deepen your understanding of these advanced neural architectures.

Node Embeddings

Transformers excel at capturing long-range dependencies in node embeddings by leveraging self-attention mechanisms, enabling dynamic context-aware representation of graph nodes. Graph Neural Networks (GNNs) specifically aggregate neighborhood information, producing embeddings that encode local structural and feature-based graph properties, which is critical for tasks like node classification and link prediction. Explore how advanced architectures combine these strengths to enhance node embedding quality for complex graph datasets.

Graph Structure

Graph Neural Networks (GNNs) excel in leveraging explicit graph structures by directly modeling node relationships and edge attributes, enabling efficient learning from complex relational data. Transformers, while powerful in capturing long-range dependencies, often require adaptations like graph-aware positional encodings to effectively process graph-structured data. Explore the advantages and trade-offs between these architectures in handling intricate graph structures to optimize your machine learning models.

Source and External Links

Transformers - A media franchise produced by Hasbro and Takara Tomy, primarily featuring the heroic Autobots and the evil Decepticons.

Transformers (film series) - A series of live-action films directed by Michael Bay, based on the Transformers franchise.

Transformers One - An animated origin story of Optimus Prime and Megatron, exploring their past friendship.

dowidth.com

dowidth.com