Privacy Sandbox and Differential Privacy represent two advanced approaches to protecting user data within the evolving digital landscape. Privacy Sandbox aims to enable targeted advertising while minimizing cross-site tracking through browser-based solutions, whereas Differential Privacy introduces statistical noise to datasets to ensure individual information remains confidential. Explore the nuances and applications of these technologies to understand their impact on data privacy.

Why it is important

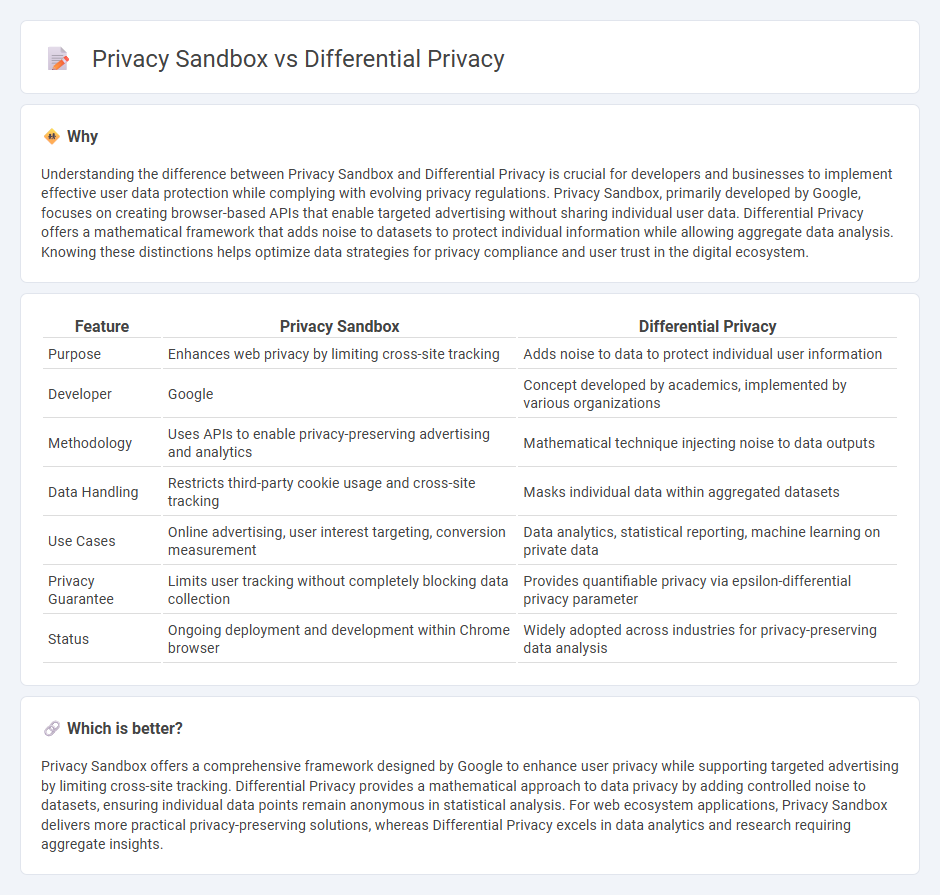

Understanding the difference between Privacy Sandbox and Differential Privacy is crucial for developers and businesses to implement effective user data protection while complying with evolving privacy regulations. Privacy Sandbox, primarily developed by Google, focuses on creating browser-based APIs that enable targeted advertising without sharing individual user data. Differential Privacy offers a mathematical framework that adds noise to datasets to protect individual information while allowing aggregate data analysis. Knowing these distinctions helps optimize data strategies for privacy compliance and user trust in the digital ecosystem.

Comparison Table

| Feature | Privacy Sandbox | Differential Privacy |

|---|---|---|

| Purpose | Enhances web privacy by limiting cross-site tracking | Adds noise to data to protect individual user information |

| Developer | Concept developed by academics, implemented by various organizations | |

| Methodology | Uses APIs to enable privacy-preserving advertising and analytics | Mathematical technique injecting noise to data outputs |

| Data Handling | Restricts third-party cookie usage and cross-site tracking | Masks individual data within aggregated datasets |

| Use Cases | Online advertising, user interest targeting, conversion measurement | Data analytics, statistical reporting, machine learning on private data |

| Privacy Guarantee | Limits user tracking without completely blocking data collection | Provides quantifiable privacy via epsilon-differential privacy parameter |

| Status | Ongoing deployment and development within Chrome browser | Widely adopted across industries for privacy-preserving data analysis |

Which is better?

Privacy Sandbox offers a comprehensive framework designed by Google to enhance user privacy while supporting targeted advertising by limiting cross-site tracking. Differential Privacy provides a mathematical approach to data privacy by adding controlled noise to datasets, ensuring individual data points remain anonymous in statistical analysis. For web ecosystem applications, Privacy Sandbox delivers more practical privacy-preserving solutions, whereas Differential Privacy excels in data analytics and research requiring aggregate insights.

Connection

Privacy Sandbox and Differential Privacy are interconnected through their shared goal of enhancing user privacy while enabling targeted advertising. Privacy Sandbox, developed by Google, incorporates Differential Privacy techniques to anonymize user data, reducing the risk of personal identification. This alliance allows advertisers to gather aggregated insights without compromising individual user confidentiality.

Key Terms

Noise Injection

Differential Privacy enhances data privacy by injecting carefully calibrated noise into datasets, ensuring individual data points become indistinguishable while retaining overall data utility. Privacy Sandbox leverages noise injection within browser-based APIs to limit cross-site tracking, balancing targeted advertising needs with user privacy. Explore how these noise injection techniques transform data protection and advertising privacy frameworks in modern digital ecosystems.

Federated Learning

Differential Privacy uses mathematical noise injection to protect individual data points in Federated Learning by ensuring statistical outputs reveal minimal specific user information, while Privacy Sandbox focuses on limiting cross-site tracking and enhancing user privacy in browser ecosystems. Federated Learning leverages Differential Privacy techniques to confidentially train decentralized models without directly sharing raw data, whereas Privacy Sandbox aligns with Federated Learning by providing privacy-preserving APIs that reduce data leakage risks during federated computations. Explore the nuances between these privacy frameworks to optimize secure and compliant Federated Learning deployments.

Aggregation

Differential Privacy ensures data aggregation by adding controlled noise to individual data points, preserving user privacy while enabling accurate statistical analysis. Privacy Sandbox aggregates user data through anonymized cohort-based advertising techniques without exposing individual identities. Explore how these privacy frameworks balance data utility and user protection in large-scale data aggregation.

Source and External Links

What Is Differential Privacy? - Differential privacy mathematically protects individuals in data sets by adding randomized noise to data analysis, ensuring attackers cannot identify any individual even with auxiliary information.

Differential privacy - Wikipedia - Differential privacy provides a rigorous framework to safely share aggregate information while limiting leakage about individuals, making the presence or absence of any one individual indistinguishable in outputs.

Differential Privacy for Privacy-Preserving Data Analysis - Differential privacy guarantees that the results of an analysis are roughly the same whether or not any one individual's data is included, controlled by a privacy parameter epsilon which balances privacy and data utility.

dowidth.com

dowidth.com