Diffusion models generate data by iteratively refining random noise through a series of denoising steps, excelling in producing high-quality, diverse outputs, while Recurrent Neural Networks (RNNs) process sequential data by maintaining temporal dependencies via hidden states, making them ideal for tasks like language modeling and time series prediction. Diffusion models leverage stochastic processes and have gained prominence in image and audio synthesis, whereas RNNs rely on deterministic state transitions but often face challenges like vanishing gradients in long sequences. Explore the fundamental differences and applications of diffusion models and RNNs to better understand their impact on modern AI technology.

Why it is important

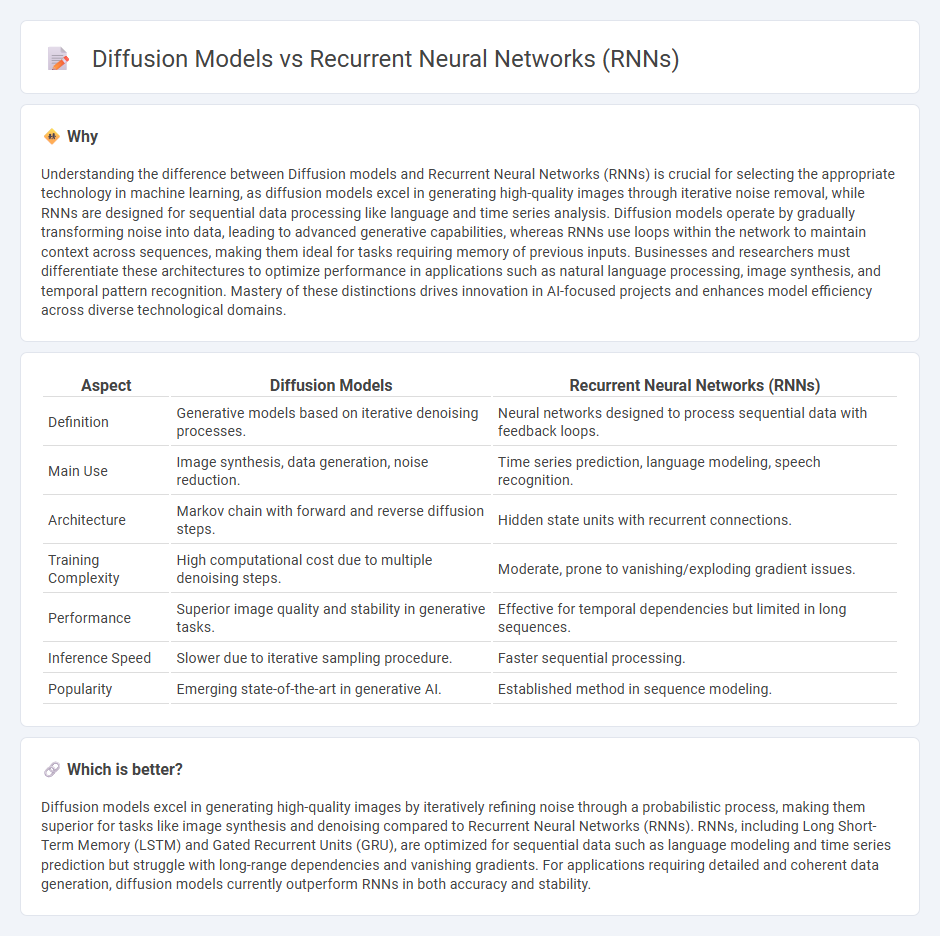

Understanding the difference between Diffusion models and Recurrent Neural Networks (RNNs) is crucial for selecting the appropriate technology in machine learning, as diffusion models excel in generating high-quality images through iterative noise removal, while RNNs are designed for sequential data processing like language and time series analysis. Diffusion models operate by gradually transforming noise into data, leading to advanced generative capabilities, whereas RNNs use loops within the network to maintain context across sequences, making them ideal for tasks requiring memory of previous inputs. Businesses and researchers must differentiate these architectures to optimize performance in applications such as natural language processing, image synthesis, and temporal pattern recognition. Mastery of these distinctions drives innovation in AI-focused projects and enhances model efficiency across diverse technological domains.

Comparison Table

| Aspect | Diffusion Models | Recurrent Neural Networks (RNNs) |

|---|---|---|

| Definition | Generative models based on iterative denoising processes. | Neural networks designed to process sequential data with feedback loops. |

| Main Use | Image synthesis, data generation, noise reduction. | Time series prediction, language modeling, speech recognition. |

| Architecture | Markov chain with forward and reverse diffusion steps. | Hidden state units with recurrent connections. |

| Training Complexity | High computational cost due to multiple denoising steps. | Moderate, prone to vanishing/exploding gradient issues. |

| Performance | Superior image quality and stability in generative tasks. | Effective for temporal dependencies but limited in long sequences. |

| Inference Speed | Slower due to iterative sampling procedure. | Faster sequential processing. |

| Popularity | Emerging state-of-the-art in generative AI. | Established method in sequence modeling. |

Which is better?

Diffusion models excel in generating high-quality images by iteratively refining noise through a probabilistic process, making them superior for tasks like image synthesis and denoising compared to Recurrent Neural Networks (RNNs). RNNs, including Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), are optimized for sequential data such as language modeling and time series prediction but struggle with long-range dependencies and vanishing gradients. For applications requiring detailed and coherent data generation, diffusion models currently outperform RNNs in both accuracy and stability.

Connection

Diffusion models and Recurrent Neural Networks (RNNs) are connected through their ability to model sequential data and temporal dependencies in technology applications. Diffusion models simulate the gradual spread of information or features over a network, while RNNs process sequences by maintaining hidden states that capture context across time steps. Integrating diffusion processes with RNN architectures enhances performance in tasks like natural language processing, time series prediction, and graph-based learning by combining dynamic feature propagation with temporal sequence modeling.

Key Terms

Sequence Modeling

Recurrent Neural Networks (RNNs) leverage sequential data by maintaining hidden states that capture temporal dependencies, making them effective for tasks like language modeling and time series prediction. Diffusion models generate data by iteratively refining noise through a Markov chain, offering robust capabilities for sequence generation with strong generative quality and stable training dynamics. Explore the nuanced benefits of RNNs and diffusion models in sequence modeling to enhance your understanding of modern AI architectures.

Iterative Generation

Recurrent Neural Networks (RNNs) generate sequences by iteratively processing one element at a time, maintaining hidden states to capture temporal dependencies, which makes them effective for tasks like language modeling and time series prediction. Diffusion models progressively refine data by iteratively denoising a latent variable, enabling high-quality generative tasks such as image synthesis and audio generation through a stepwise reverse diffusion process. Explore further to understand the distinct iterative mechanisms and applications of RNNs and diffusion models in advanced generative AI.

Temporal Dependency

Recurrent Neural Networks (RNNs) specialize in capturing temporal dependencies through sequential data processing and internal memory states, making them effective for time-series analysis and language modeling. Diffusion models, while primarily designed for generative tasks, progressively denoise data and can incorporate temporal dynamics by modeling the diffusion process over time steps. Explore more about how these architectures handle temporal information in modern AI applications.

Source and External Links

What is a Recurrent Neural Network (RNN)? - IBM - A recurrent neural network (RNN) is a deep neural network trained on sequential or time series data, characterized by its ability to use past information ("memory") to influence current outputs, making it suitable for tasks like language translation, speech recognition, and time series prediction.

What is RNN? - Recurrent Neural Networks Explained - AWS - RNNs are deep learning models designed to process sequential input data into sequential output by capturing complex dependencies in sequences such as language or time series, though they are increasingly superseded by transformer models for many tasks.

Recurrent neural network - Wikipedia - RNNs process sequential data by maintaining a hidden state that feeds back outputs from one step as inputs to the next, enabling temporal dependency capture; advanced variants like LSTM and GRU address challenges like vanishing gradients, with transformers now dominant in many sequence tasks.

dowidth.com

dowidth.com