Multimodal AI integrates data from various sources such as text, images, and audio to improve machine understanding and decision-making across diverse applications. Transfer learning leverages pre-trained models on large datasets to adapt quickly to new, specific tasks with minimal additional data, enhancing efficiency and accuracy. Explore more to understand how these cutting-edge technologies reshape artificial intelligence capabilities.

Why it is important

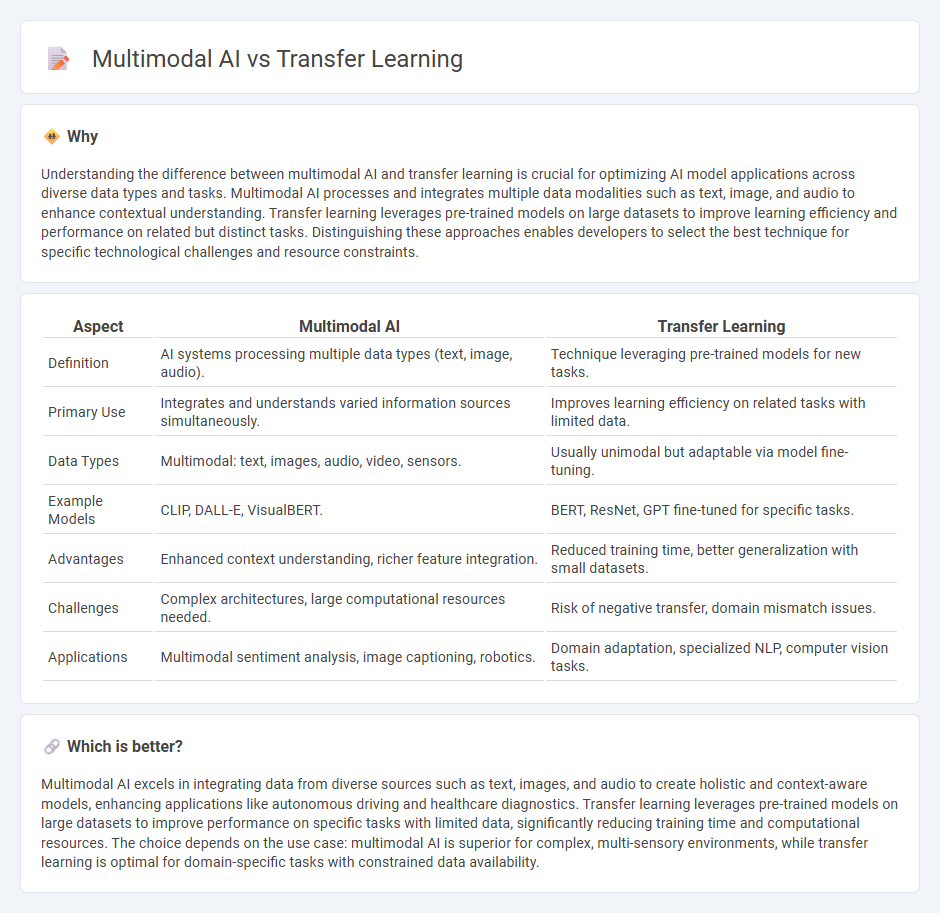

Understanding the difference between multimodal AI and transfer learning is crucial for optimizing AI model applications across diverse data types and tasks. Multimodal AI processes and integrates multiple data modalities such as text, image, and audio to enhance contextual understanding. Transfer learning leverages pre-trained models on large datasets to improve learning efficiency and performance on related but distinct tasks. Distinguishing these approaches enables developers to select the best technique for specific technological challenges and resource constraints.

Comparison Table

| Aspect | Multimodal AI | Transfer Learning |

|---|---|---|

| Definition | AI systems processing multiple data types (text, image, audio). | Technique leveraging pre-trained models for new tasks. |

| Primary Use | Integrates and understands varied information sources simultaneously. | Improves learning efficiency on related tasks with limited data. |

| Data Types | Multimodal: text, images, audio, video, sensors. | Usually unimodal but adaptable via model fine-tuning. |

| Example Models | CLIP, DALL-E, VisualBERT. | BERT, ResNet, GPT fine-tuned for specific tasks. |

| Advantages | Enhanced context understanding, richer feature integration. | Reduced training time, better generalization with small datasets. |

| Challenges | Complex architectures, large computational resources needed. | Risk of negative transfer, domain mismatch issues. |

| Applications | Multimodal sentiment analysis, image captioning, robotics. | Domain adaptation, specialized NLP, computer vision tasks. |

Which is better?

Multimodal AI excels in integrating data from diverse sources such as text, images, and audio to create holistic and context-aware models, enhancing applications like autonomous driving and healthcare diagnostics. Transfer learning leverages pre-trained models on large datasets to improve performance on specific tasks with limited data, significantly reducing training time and computational resources. The choice depends on the use case: multimodal AI is superior for complex, multi-sensory environments, while transfer learning is optimal for domain-specific tasks with constrained data availability.

Connection

Multimodal AI leverages transfer learning by applying pretrained models from one data modality, such as text, to improve understanding in another, like images or audio. Transfer learning enables efficient adaptation and integration of diverse data types, enhancing the capability of AI systems to process and generate rich, context-aware outputs. This synergy accelerates advancements in natural language processing, computer vision, and speech recognition by sharing knowledge across modalities.

Key Terms

Domain adaptation

Transfer learning leverages pretrained models to adapt knowledge from a source domain to improve performance in a related but different target domain, making domain adaptation crucial for handling distribution mismatches. Multimodal AI integrates information from diverse data types such as text, images, and audio, requiring sophisticated domain adaptation techniques to align heterogeneous feature spaces across modalities. Explore how advanced domain adaptation methods enhance transfer learning and multimodal AI for robust, cross-domain applications.

Cross-modal integration

Transfer learning enhances cross-modal integration by leveraging pre-trained models from one modality to improve performance in another, enabling efficient knowledge transfer across domains. Multimodal AI focuses on simultaneously processing and integrating information from multiple modalities such as text, images, and audio to create comprehensive and context-aware representations. Explore how combining transfer learning and multimodal AI drives innovations in cross-modal integration for advanced applications.

Pre-trained models

Pre-trained models in transfer learning leverage vast datasets to fine-tune specific tasks, enhancing performance with fewer labeled examples. Multimodal AI integrates diverse data types, such as text, images, and audio, using pre-trained models specialized for each modality to create more comprehensive representations. Explore the latest advancements to understand how these approaches reshape AI applications and improve accuracy.

Source and External Links

What is transfer learning? - IBM - Transfer learning is a machine learning technique where knowledge gained from one task or dataset is used to improve model performance on another related task or dataset, often by reusing pre-trained models to enhance generalization in new settings.

What Is Transfer Learning? A Guide for Deep Learning | Built In - Transfer learning involves reusing a pre-trained model on a new problem to enable training with less data, especially useful in deep learning for tasks like image recognition and natural language processing by transferring learned weights from one task to another related task.

What is Transfer Learning? - AWS - Transfer learning is a technique in which a pre-trained model is fine-tuned on a new but related task, offering advantages like faster training, reduced data requirements, and improved model robustness for applications such as image recognition and medical imaging.

dowidth.com

dowidth.com