Generative Adversarial Networks (GANs) utilize two neural networks competing against each other to generate realistic data, excelling in image synthesis and data augmentation tasks. Self-Supervised Learning leverages unlabeled data by creating surrogate tasks, enabling models to learn robust representations without extensive human annotation. Explore the differences in architecture, applications, and advantages to understand their impact on modern AI development.

Why it is important

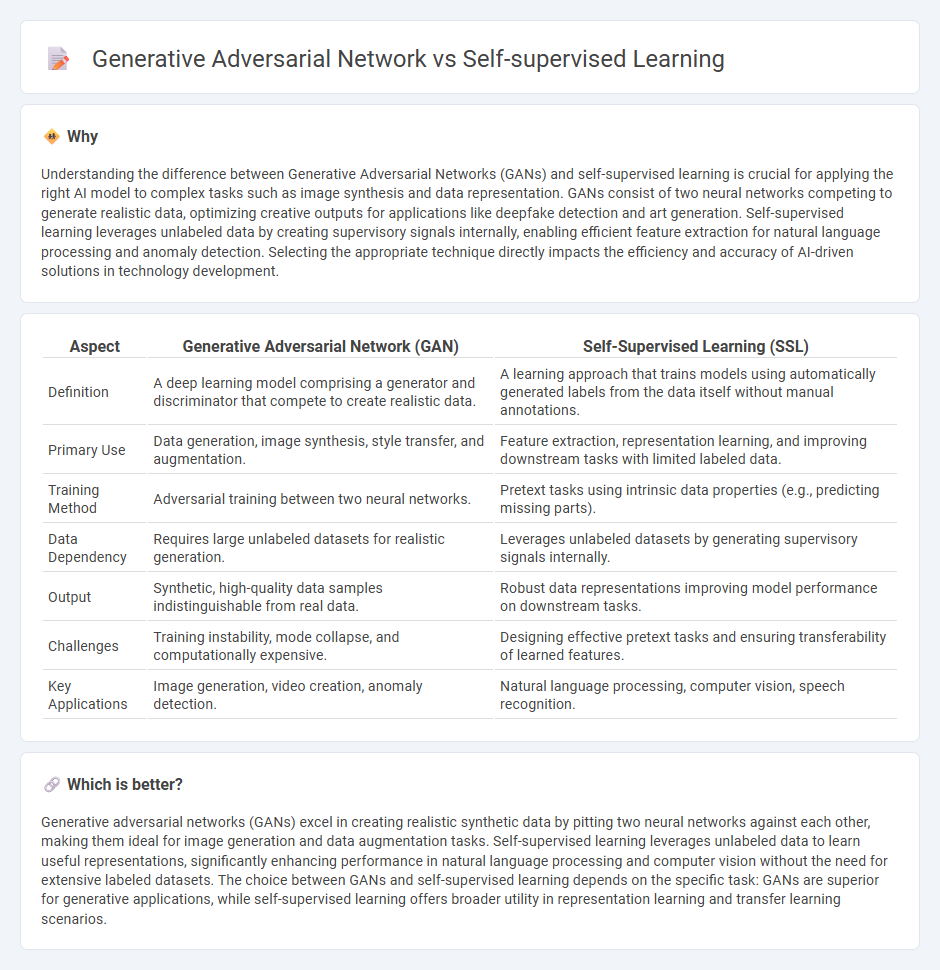

Understanding the difference between Generative Adversarial Networks (GANs) and self-supervised learning is crucial for applying the right AI model to complex tasks such as image synthesis and data representation. GANs consist of two neural networks competing to generate realistic data, optimizing creative outputs for applications like deepfake detection and art generation. Self-supervised learning leverages unlabeled data by creating supervisory signals internally, enabling efficient feature extraction for natural language processing and anomaly detection. Selecting the appropriate technique directly impacts the efficiency and accuracy of AI-driven solutions in technology development.

Comparison Table

| Aspect | Generative Adversarial Network (GAN) | Self-Supervised Learning (SSL) |

|---|---|---|

| Definition | A deep learning model comprising a generator and discriminator that compete to create realistic data. | A learning approach that trains models using automatically generated labels from the data itself without manual annotations. |

| Primary Use | Data generation, image synthesis, style transfer, and augmentation. | Feature extraction, representation learning, and improving downstream tasks with limited labeled data. |

| Training Method | Adversarial training between two neural networks. | Pretext tasks using intrinsic data properties (e.g., predicting missing parts). |

| Data Dependency | Requires large unlabeled datasets for realistic generation. | Leverages unlabeled datasets by generating supervisory signals internally. |

| Output | Synthetic, high-quality data samples indistinguishable from real data. | Robust data representations improving model performance on downstream tasks. |

| Challenges | Training instability, mode collapse, and computationally expensive. | Designing effective pretext tasks and ensuring transferability of learned features. |

| Key Applications | Image generation, video creation, anomaly detection. | Natural language processing, computer vision, speech recognition. |

Which is better?

Generative adversarial networks (GANs) excel in creating realistic synthetic data by pitting two neural networks against each other, making them ideal for image generation and data augmentation tasks. Self-supervised learning leverages unlabeled data to learn useful representations, significantly enhancing performance in natural language processing and computer vision without the need for extensive labeled datasets. The choice between GANs and self-supervised learning depends on the specific task: GANs are superior for generative applications, while self-supervised learning offers broader utility in representation learning and transfer learning scenarios.

Connection

Generative adversarial networks (GANs) and self-supervised learning share a common goal of improving machine learning models by leveraging unlabeled data. GANs utilize a generator and discriminator in a competitive framework to create realistic data samples, indirectly learning data features without explicit labels. Self-supervised learning designs pretext tasks to generate supervisory signals from the data itself, often benefiting from representations similar to those learned by GANs for enhanced feature extraction and downstream performance.

Key Terms

**Self-supervised learning:**

Self-supervised learning leverages unlabeled data by generating pseudo-labels from the data itself, enabling models to learn meaningful representations without human annotation. This approach has shown significant success in natural language processing and computer vision tasks, reducing dependency on large labeled datasets and improving transfer learning performance. Explore how self-supervised learning techniques can transform your AI projects with efficient, scalable model training.

Pretext Task

Self-supervised learning leverages pretext tasks such as predicting image rotations or colorization to generate supervisory signals directly from unlabeled data, enabling models to learn useful feature representations without explicit annotations. In contrast, generative adversarial networks (GANs) utilize a min-max game between a generator and discriminator to produce realistic synthetic data, which can indirectly aid representation learning but do not rely primarily on pretext tasks. Explore how pretext tasks drive representation learning efficiency in self-supervised methods compared to generative frameworks.

Representation Learning

Self-supervised learning excels in representation learning by leveraging unlabeled data through pretext tasks, enabling models to learn robust feature embeddings without explicit annotations. Generative Adversarial Networks (GANs) contribute by producing high-quality synthetic data that enrich training sets and enhance feature extraction capabilities, especially in image domains. Explore deeper insights into how these techniques revolutionize representation learning efficiency and accuracy.

Source and External Links

What Is Self-Supervised Learning? - IBM - Self-supervised learning is a technique where models generate implicit labels from unlabeled data to learn meaningful representations through pretext tasks, which can then be applied to downstream tasks without requiring manually labeled datasets, making it cost-effective especially in computer vision and natural language processing.

Breaking Down Self-Supervised Learning: Concepts, Comparisons ... - Self-supervised learning works by creating artificial tasks from the input data itself, which the model solves by learning to predict hidden or altered parts of the data, then transferring the learned features to downstream tasks like classification or detection.

Self-Supervised Learning and Its Applications - neptune.ai - Self-supervised learning transforms an unsupervised problem into a supervised one by auto-generating labels from the data itself to train models to predict missing parts of the input, distinct from unsupervised learning by providing supervisory signals to guide training.

dowidth.com

dowidth.com