Generative fill leverages deep learning to create new image content that seamlessly blends with existing visuals, enabling precise and context-aware editing. Texture synthesis focuses on replicating patterns and surface details, producing larger textures from smaller samples while maintaining consistency and realism. Explore the nuances and applications of generative fill versus texture synthesis to enhance your understanding of advanced image generation techniques.

Why it is important

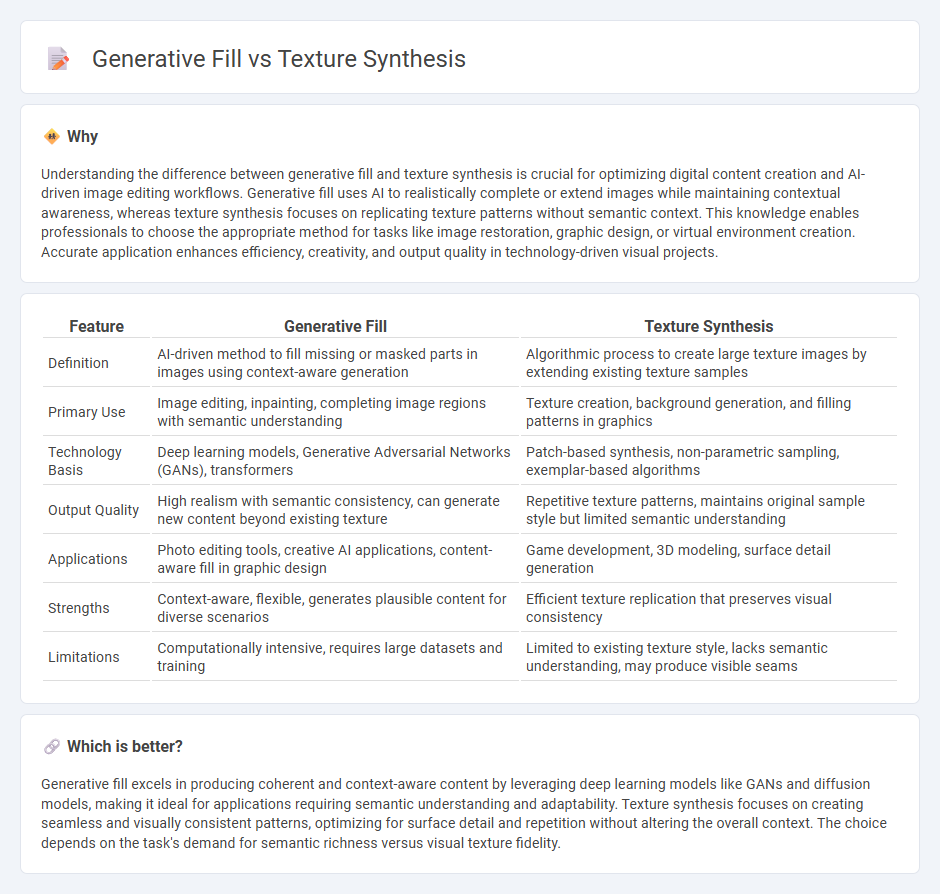

Understanding the difference between generative fill and texture synthesis is crucial for optimizing digital content creation and AI-driven image editing workflows. Generative fill uses AI to realistically complete or extend images while maintaining contextual awareness, whereas texture synthesis focuses on replicating texture patterns without semantic context. This knowledge enables professionals to choose the appropriate method for tasks like image restoration, graphic design, or virtual environment creation. Accurate application enhances efficiency, creativity, and output quality in technology-driven visual projects.

Comparison Table

| Feature | Generative Fill | Texture Synthesis |

|---|---|---|

| Definition | AI-driven method to fill missing or masked parts in images using context-aware generation | Algorithmic process to create large texture images by extending existing texture samples |

| Primary Use | Image editing, inpainting, completing image regions with semantic understanding | Texture creation, background generation, and filling patterns in graphics |

| Technology Basis | Deep learning models, Generative Adversarial Networks (GANs), transformers | Patch-based synthesis, non-parametric sampling, exemplar-based algorithms |

| Output Quality | High realism with semantic consistency, can generate new content beyond existing texture | Repetitive texture patterns, maintains original sample style but limited semantic understanding |

| Applications | Photo editing tools, creative AI applications, content-aware fill in graphic design | Game development, 3D modeling, surface detail generation |

| Strengths | Context-aware, flexible, generates plausible content for diverse scenarios | Efficient texture replication that preserves visual consistency |

| Limitations | Computationally intensive, requires large datasets and training | Limited to existing texture style, lacks semantic understanding, may produce visible seams |

Which is better?

Generative fill excels in producing coherent and context-aware content by leveraging deep learning models like GANs and diffusion models, making it ideal for applications requiring semantic understanding and adaptability. Texture synthesis focuses on creating seamless and visually consistent patterns, optimizing for surface detail and repetition without altering the overall context. The choice depends on the task's demand for semantic richness versus visual texture fidelity.

Connection

Generative fill and texture synthesis share a common foundation in deep learning algorithms that analyze and replicate visual patterns to create seamless, high-quality images. Both techniques use neural networks to predict missing or extended parts of an image by understanding texture, color, and structure, enhancing image editing and restoration workflows. Their integration improves content creation tools by enabling more realistic image completion and texture generation in fields such as graphic design, gaming, and virtual reality.

Key Terms

Pattern replication vs. semantic generation

Texture synthesis excels in pattern replication by analyzing existing textures to generate visually consistent repetitions, ensuring high fidelity in surface detail reproduction. Generative fill utilizes semantic understanding and AI to create contextually relevant content, enabling the generation of new image regions that align with the overall scene rather than merely repeating patterns. Explore further to understand how these techniques transform digital image editing and creative workflows.

Pixel-based algorithms vs. deep learning models

Pixel-based algorithms in texture synthesis rely on sampling and copying local pixel neighborhoods to create new textures that seamlessly blend with existing ones, emphasizing spatial coherence and fine detail replication. In contrast, generative fill techniques leverage deep learning models, such as GANs or diffusion models, to understand and generate contextual content beyond pixel correlations, enabling more semantically meaningful and adaptive image completion. Explore how these approaches transform image editing by comparing their underlying mechanics and output quality.

Continuity mapping vs. context-aware filling

Texture synthesis relies on continuity mapping to seamlessly extend patterns by matching neighborhood structures, ensuring consistent texture flow across boundaries. Generative fill employs context-aware filling techniques, using deep learning models to understand and replicate semantic content, thereby producing coherent and contextually relevant image regions. Explore deeper insights into how these methods transform image completion and restoration tasks.

Source and External Links

Texture Synthesis on Surfaces - Synthesizes texture on irregular meshes by interpolating color and applying mesh operations such as filtering, downsampling, and upsampling to transfer texture samples from a source image onto a 3D surface.

Texture Synthesis - An overview of algorithmic methods, from simple tiling and stochastic generation to more complex approaches like chaos mosaic, aiming to generate large, seamless textures from small samples while minimizing visible repetition and artifacts.

Texture Synthesis Using Convolutional Neural Networks - Explores both non-parametric resampling (using pixels or patches from the original texture) and parametric modeling (using statistical features) to generate new, visually convincing textures with deep learning techniques.

dowidth.com

dowidth.com