Algorithmic bias auditing focuses on identifying and mitigating unfair prejudices in machine learning models to ensure equitable outcomes across diverse population groups. Adversarial robustness testing evaluates a model's resilience against malicious input perturbations designed to deceive or manipulate predictions. Explore how these complementary methodologies enhance the reliability and fairness of AI systems.

Why it is important

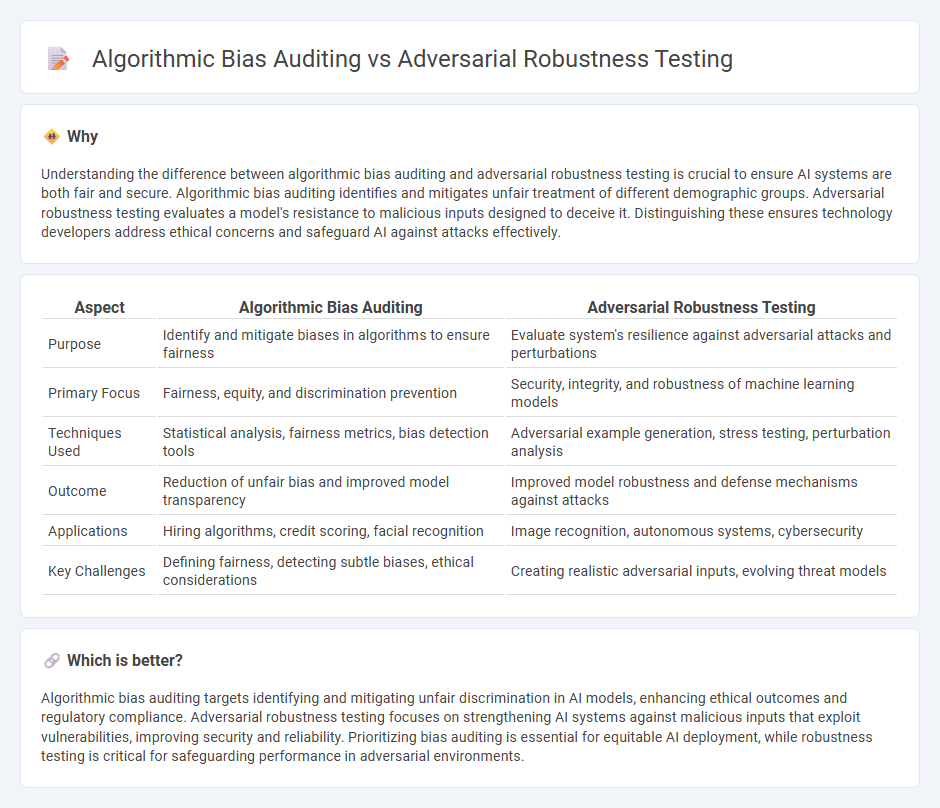

Understanding the difference between algorithmic bias auditing and adversarial robustness testing is crucial to ensure AI systems are both fair and secure. Algorithmic bias auditing identifies and mitigates unfair treatment of different demographic groups. Adversarial robustness testing evaluates a model's resistance to malicious inputs designed to deceive it. Distinguishing these ensures technology developers address ethical concerns and safeguard AI against attacks effectively.

Comparison Table

| Aspect | Algorithmic Bias Auditing | Adversarial Robustness Testing |

|---|---|---|

| Purpose | Identify and mitigate biases in algorithms to ensure fairness | Evaluate system's resilience against adversarial attacks and perturbations |

| Primary Focus | Fairness, equity, and discrimination prevention | Security, integrity, and robustness of machine learning models |

| Techniques Used | Statistical analysis, fairness metrics, bias detection tools | Adversarial example generation, stress testing, perturbation analysis |

| Outcome | Reduction of unfair bias and improved model transparency | Improved model robustness and defense mechanisms against attacks |

| Applications | Hiring algorithms, credit scoring, facial recognition | Image recognition, autonomous systems, cybersecurity |

| Key Challenges | Defining fairness, detecting subtle biases, ethical considerations | Creating realistic adversarial inputs, evolving threat models |

Which is better?

Algorithmic bias auditing targets identifying and mitigating unfair discrimination in AI models, enhancing ethical outcomes and regulatory compliance. Adversarial robustness testing focuses on strengthening AI systems against malicious inputs that exploit vulnerabilities, improving security and reliability. Prioritizing bias auditing is essential for equitable AI deployment, while robustness testing is critical for safeguarding performance in adversarial environments.

Connection

Algorithmic bias auditing identifies discriminatory patterns within machine learning models, highlighting vulnerabilities that can be exploited or unintentionally reinforced. Adversarial robustness testing evaluates a model's resistance to malicious inputs designed to deceive or manipulate, directly addressing weaknesses revealed during bias audits. Integrating both processes enhances AI fairness and security by ensuring models perform reliably across diverse and potentially adversarial scenarios.

Key Terms

**Adversarial Robustness Testing:**

Adversarial robustness testing evaluates machine learning models' resilience against malicious attacks designed to manipulate predictions through subtle input perturbations, ensuring model reliability under adversarial conditions. This testing involves techniques like gradient-based attacks, adversarial example generation, and robustness benchmarking across datasets to identify vulnerabilities and improve defense mechanisms. Explore cutting-edge adversarial robustness methodologies and tools to strengthen AI systems against evolving threats.

Perturbation

Adversarial robustness testing involves applying small, intentional perturbations to input data to evaluate a model's vulnerability to adversarial attacks, ensuring the system maintains accuracy under malicious manipulation. Algorithmic bias auditing examines perturbations in demographic or feature data to identify and mitigate unfair treatment or discriminatory outcomes in AI systems. Explore the nuances of perturbation techniques to deepen your understanding of AI fairness and security.

Attack Vectors

Adversarial robustness testing targets attack vectors by simulating deliberate adversarial inputs to evaluate a model's resilience against manipulation, focusing on perturbations, evasion tactics, and fast gradient sign methods. Algorithmic bias auditing examines attack vectors related to data representation and fairness disparities, such as skewed training datasets or biased feature selection, ensuring equitable model outcomes across demographics. Explore the distinctions between adversarial robustness testing and algorithmic bias auditing for comprehensive security and fairness insights.

Source and External Links

Adversarial robustness evaluation metric - Adversarial robustness measures how well AI models and prompt templates resist attack vectors like prompt injections and jailbreaks, scoring resilience on a scale from 0 to 1, with detailed evaluation categories from basic to advanced adversarial attacks in generative AI tasks.

Adversarial Robustness In LLMs: Defending Against Malicious Inputs - This article explains evaluating adversarial robustness in large language models (LLMs) using benchmarking datasets like GLUE, anomaly detection, and robustness testing frameworks to simulate real-world attacks and improve defenses against malicious inputs.

Robustness Testing: The Essential Guide - Robustness testing simulates adversarial attacks on machine learning models to identify vulnerabilities, helping organizations secure their AI systems and comply with regulations such as GDPR by ensuring model reliability against data manipulation attacks.

dowidth.com

dowidth.com