Neural Radiance Fields (NeRF) leverage deep learning to reconstruct high-fidelity 3D scenes from multiple 2D images by modeling volumetric radiance and view-dependent effects, enabling photorealistic rendering of complex environments. In contrast, Simultaneous Localization and Mapping (SLAM) relies on sensor data such as LiDAR or cameras to build real-time maps while tracking an agent's position, essential for autonomous navigation and robotics. Explore the technical distinctions and applications of NeRF and SLAM to understand their impact on 3D scene reconstruction and spatial awareness.

Why it is important

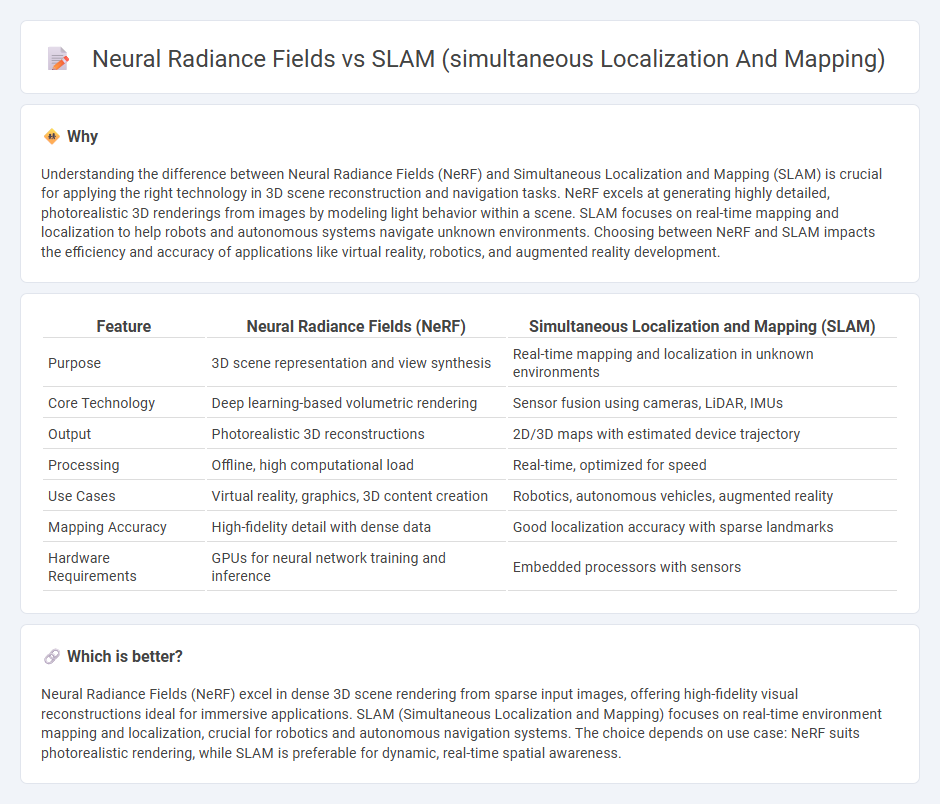

Understanding the difference between Neural Radiance Fields (NeRF) and Simultaneous Localization and Mapping (SLAM) is crucial for applying the right technology in 3D scene reconstruction and navigation tasks. NeRF excels at generating highly detailed, photorealistic 3D renderings from images by modeling light behavior within a scene. SLAM focuses on real-time mapping and localization to help robots and autonomous systems navigate unknown environments. Choosing between NeRF and SLAM impacts the efficiency and accuracy of applications like virtual reality, robotics, and augmented reality development.

Comparison Table

| Feature | Neural Radiance Fields (NeRF) | Simultaneous Localization and Mapping (SLAM) |

|---|---|---|

| Purpose | 3D scene representation and view synthesis | Real-time mapping and localization in unknown environments |

| Core Technology | Deep learning-based volumetric rendering | Sensor fusion using cameras, LiDAR, IMUs |

| Output | Photorealistic 3D reconstructions | 2D/3D maps with estimated device trajectory |

| Processing | Offline, high computational load | Real-time, optimized for speed |

| Use Cases | Virtual reality, graphics, 3D content creation | Robotics, autonomous vehicles, augmented reality |

| Mapping Accuracy | High-fidelity detail with dense data | Good localization accuracy with sparse landmarks |

| Hardware Requirements | GPUs for neural network training and inference | Embedded processors with sensors |

Which is better?

Neural Radiance Fields (NeRF) excel in dense 3D scene rendering from sparse input images, offering high-fidelity visual reconstructions ideal for immersive applications. SLAM (Simultaneous Localization and Mapping) focuses on real-time environment mapping and localization, crucial for robotics and autonomous navigation systems. The choice depends on use case: NeRF suits photorealistic rendering, while SLAM is preferable for dynamic, real-time spatial awareness.

Connection

Neural Radiance Fields (NeRF) enhance Simultaneous Localization and Mapping (SLAM) by providing dense 3D scene representations from sparse input images, improving environmental understanding and reconstruction accuracy. SLAM algorithms benefit from NeRF's ability to model complex lighting and geometry, enabling more robust localization in dynamic or unstructured environments. This integration advances augmented reality and robotics by delivering higher-fidelity spatial maps with detailed visual and geometric cues.

Key Terms

Sensor Fusion

SLAM integrates data from multiple sensors such as LiDAR, cameras, and IMUs to build accurate, real-time maps and localize the device within the environment, emphasizing robust sensor fusion algorithms to handle noise and dynamic changes. Neural Radiance Fields (NeRF) rely primarily on visual data to generate photorealistic 3D scene representations, lacking inherent multi-sensor fusion capabilities critical for robust localization in dynamic environments. Explore further to understand how sensor fusion advances the synergy between SLAM and NeRF applications.

Scene Representation

SLAM (Simultaneous Localization and Mapping) excels at creating real-time geometric maps by incrementally building a sparse or dense representation of the environment, essential for autonomous navigation and robotics. Neural Radiance Fields (NeRF) offer a novel scene representation by encoding the 3D volume with continuous volumetric radiance, enabling photorealistic rendering from novel viewpoints but typically lack real-time localization capabilities. Explore deeper insights into the trade-offs and advancements in scene representation between SLAM and NeRF technologies.

Neural Rendering

Neural Radiance Fields (NeRF) create highly detailed 3D scenes by optimizing volumetric scene representations directly from images, enabling photorealistic neural rendering with complex lighting and view-dependent effects. SLAM (simultaneous localization and mapping) focuses on real-time environment mapping and robot localization, typically using geometric features for sparse or semi-dense 3D reconstruction without photorealism. Explore how neural rendering advances immersive virtual experiences beyond SLAM's spatial mapping capabilities by reading more about these cutting-edge technologies.

Source and External Links

Understanding SLAM in Robotics and Autonomous Vehicles - SLAM allows robots and autonomous vehicles to build a map and localize themselves simultaneously by identifying landmarks and calculating their position relative to these markers, continuously updating the map as they explore an unknown environment.

Simultaneous localization and mapping - Wikipedia - SLAM is the computational problem of constructing or updating a map of an unknown environment while simultaneously tracking an agent's location, solved by algorithms like particle filters and Kalman filters, and used in robots, autonomous vehicles, and more.

Introduction to SLAM (Simultaneous Localization and Mapping) - SLAM helps robots estimate their pose (position and orientation) on a map while creating the map of the environment, using sensors like lidar or cameras to record surroundings and extract features for accurate localization and mapping.

dowidth.com

dowidth.com