Retrieval augmented generation (RAG) integrates external knowledge sources with language models, enhancing response accuracy and contextual relevance by retrieving pertinent information during generation. Prompt engineering focuses on crafting precise inputs to guide model outputs, optimizing performance without modifying the underlying architecture. Explore the nuances and benefits of RAG and prompt engineering to leverage cutting-edge AI capabilities effectively.

Why it is important

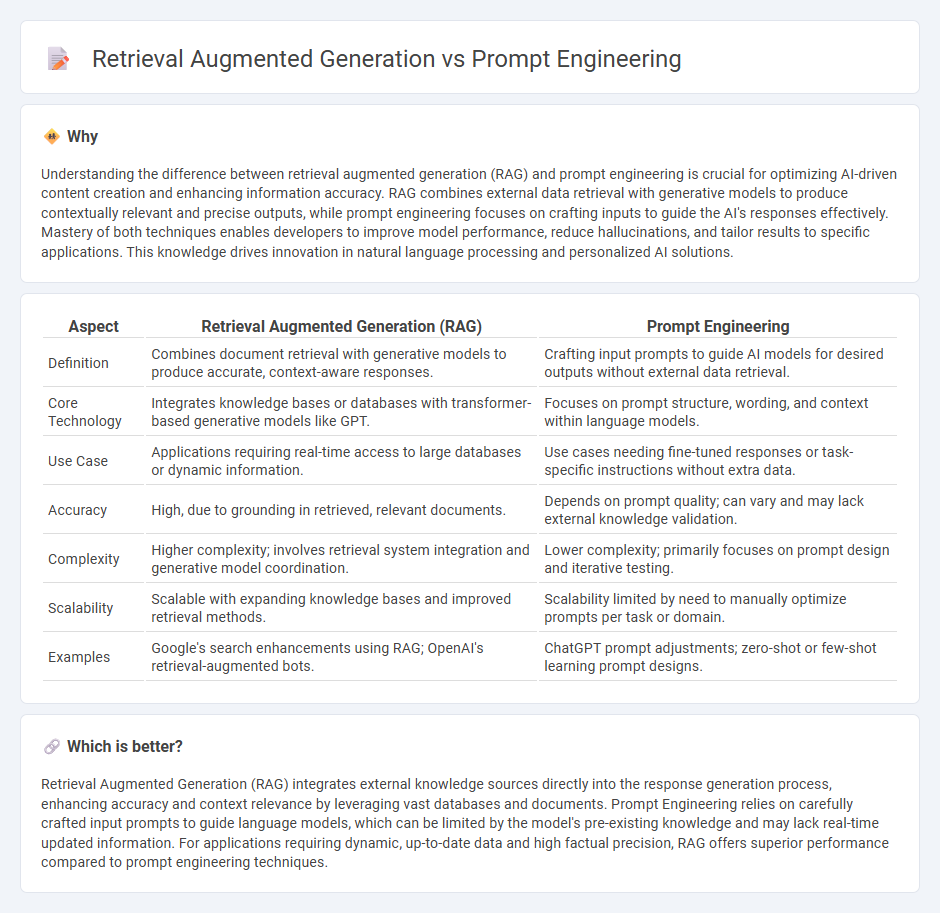

Understanding the difference between retrieval augmented generation (RAG) and prompt engineering is crucial for optimizing AI-driven content creation and enhancing information accuracy. RAG combines external data retrieval with generative models to produce contextually relevant and precise outputs, while prompt engineering focuses on crafting inputs to guide the AI's responses effectively. Mastery of both techniques enables developers to improve model performance, reduce hallucinations, and tailor results to specific applications. This knowledge drives innovation in natural language processing and personalized AI solutions.

Comparison Table

| Aspect | Retrieval Augmented Generation (RAG) | Prompt Engineering |

|---|---|---|

| Definition | Combines document retrieval with generative models to produce accurate, context-aware responses. | Crafting input prompts to guide AI models for desired outputs without external data retrieval. |

| Core Technology | Integrates knowledge bases or databases with transformer-based generative models like GPT. | Focuses on prompt structure, wording, and context within language models. |

| Use Case | Applications requiring real-time access to large databases or dynamic information. | Use cases needing fine-tuned responses or task-specific instructions without extra data. |

| Accuracy | High, due to grounding in retrieved, relevant documents. | Depends on prompt quality; can vary and may lack external knowledge validation. |

| Complexity | Higher complexity; involves retrieval system integration and generative model coordination. | Lower complexity; primarily focuses on prompt design and iterative testing. |

| Scalability | Scalable with expanding knowledge bases and improved retrieval methods. | Scalability limited by need to manually optimize prompts per task or domain. |

| Examples | Google's search enhancements using RAG; OpenAI's retrieval-augmented bots. | ChatGPT prompt adjustments; zero-shot or few-shot learning prompt designs. |

Which is better?

Retrieval Augmented Generation (RAG) integrates external knowledge sources directly into the response generation process, enhancing accuracy and context relevance by leveraging vast databases and documents. Prompt Engineering relies on carefully crafted input prompts to guide language models, which can be limited by the model's pre-existing knowledge and may lack real-time updated information. For applications requiring dynamic, up-to-date data and high factual precision, RAG offers superior performance compared to prompt engineering techniques.

Connection

Retrieval Augmented Generation (RAG) leverages external knowledge sources to enhance language model outputs by integrating relevant retrieved documents into the generation process. Prompt engineering strategically designs input prompts to guide the model's behavior, ensuring that the retrieved information is effectively utilized for accurate and context-aware responses. Together, RAG and prompt engineering optimize the synergy between data retrieval and natural language generation, improving performance in tasks like question answering and content creation.

Key Terms

Prompt Design

Prompt engineering emphasizes crafting precise and context-rich prompts to optimize the performance of language models, ensuring relevant and coherent outputs. Retrieval augmented generation (RAG) integrates external data retrieval mechanisms with prompt design to enhance response accuracy by incorporating up-to-date, document-based information directly into the generation process. Explore detailed strategies on how innovative prompt designs leverage retrieval systems to maximize model effectiveness.

Retrieval Mechanism

Retrieval Augmented Generation (RAG) integrates an external retrieval mechanism to enhance response accuracy by sourcing relevant information from large datasets or knowledge bases, unlike traditional prompt engineering which relies solely on pre-trained model outputs. RAG dynamically fetches contextually pertinent documents during inference, improving factual consistency and reducing hallucinations in generated text. Explore more about how retrieval mechanisms optimize generation quality and real-world applications.

Context Augmentation

Prompt engineering optimizes AI model inputs to improve response relevance, while retrieval augmented generation (RAG) enhances outputs by integrating external knowledge databases dynamically into the context. Context augmentation in RAG significantly extends the model's informational base, boosting accuracy and specificity compared to static prompt tweaks. Explore deeper insights into how context augmentation transforms AI performance.

Source and External Links

Prompt Engineering for AI Guide - This guide explains prompt engineering as the art and science of designing and optimizing prompts to guide AI models towards generating desired responses.

What Is Prompt Engineering? Definition and Examples - This article discusses prompt engineering as a process of refining prompts for generative AI tools to improve accuracy and effectiveness.

What is Prompt Engineering? - AI - This webpage describes prompt engineering as guiding generative AI solutions to produce desired outputs through techniques like generated knowledge prompting and least-to-most prompting.

dowidth.com

dowidth.com