Edge AI processes data locally on devices, reducing latency and enhancing real-time decision-making compared to traditional AI models reliant on cloud computing. AI at the endpoint enables smarter, faster responses by analyzing data directly where it is generated, improving privacy and decreasing bandwidth usage. Explore deeper insights into how Edge AI and endpoint AI transform industries with their unique capabilities.

Why it is important

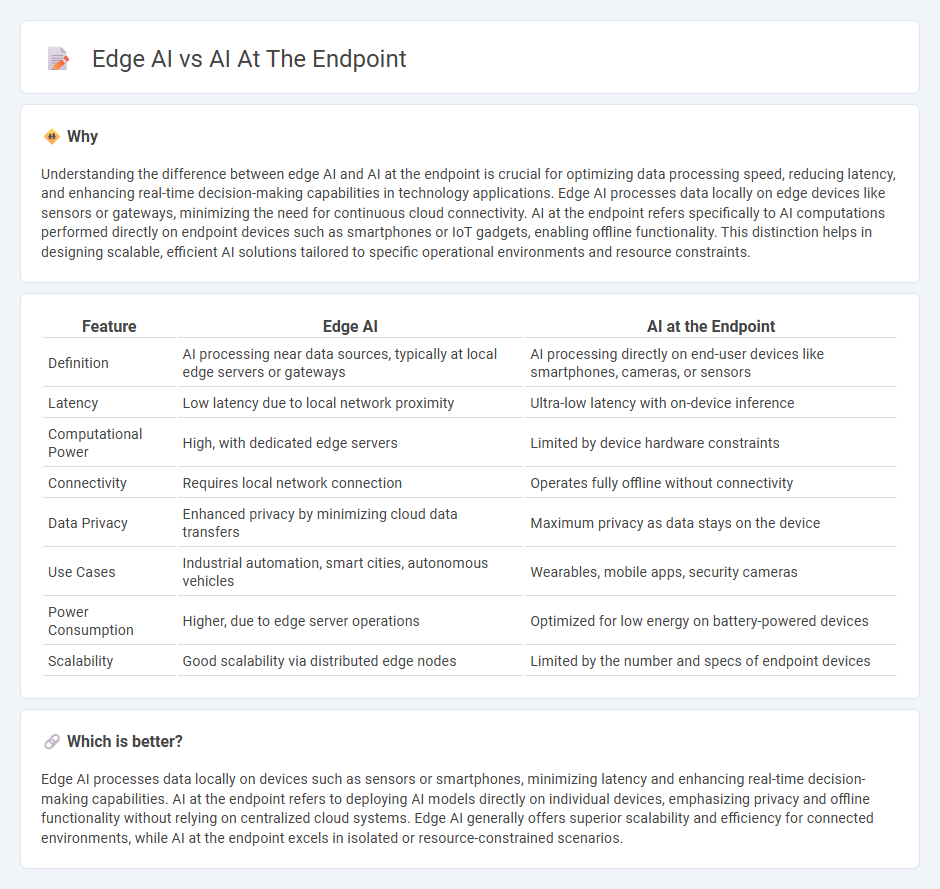

Understanding the difference between edge AI and AI at the endpoint is crucial for optimizing data processing speed, reducing latency, and enhancing real-time decision-making capabilities in technology applications. Edge AI processes data locally on edge devices like sensors or gateways, minimizing the need for continuous cloud connectivity. AI at the endpoint refers specifically to AI computations performed directly on endpoint devices such as smartphones or IoT gadgets, enabling offline functionality. This distinction helps in designing scalable, efficient AI solutions tailored to specific operational environments and resource constraints.

Comparison Table

| Feature | Edge AI | AI at the Endpoint |

|---|---|---|

| Definition | AI processing near data sources, typically at local edge servers or gateways | AI processing directly on end-user devices like smartphones, cameras, or sensors |

| Latency | Low latency due to local network proximity | Ultra-low latency with on-device inference |

| Computational Power | High, with dedicated edge servers | Limited by device hardware constraints |

| Connectivity | Requires local network connection | Operates fully offline without connectivity |

| Data Privacy | Enhanced privacy by minimizing cloud data transfers | Maximum privacy as data stays on the device |

| Use Cases | Industrial automation, smart cities, autonomous vehicles | Wearables, mobile apps, security cameras |

| Power Consumption | Higher, due to edge server operations | Optimized for low energy on battery-powered devices |

| Scalability | Good scalability via distributed edge nodes | Limited by the number and specs of endpoint devices |

Which is better?

Edge AI processes data locally on devices such as sensors or smartphones, minimizing latency and enhancing real-time decision-making capabilities. AI at the endpoint refers to deploying AI models directly on individual devices, emphasizing privacy and offline functionality without relying on centralized cloud systems. Edge AI generally offers superior scalability and efficiency for connected environments, while AI at the endpoint excels in isolated or resource-constrained scenarios.

Connection

Edge AI processes data locally on devices rather than relying on centralized cloud servers, enabling real-time analytics and reducing latency. AI at the endpoint refers to deploying artificial intelligence algorithms directly on devices such as smartphones, sensors, or cameras, facilitating immediate decision-making and enhanced privacy. Together, they form a decentralized AI model that improves efficiency, responsiveness, and security in applications like autonomous vehicles, smart manufacturing, and IoT systems.

Key Terms

Inference Location

AI at the endpoint processes data directly on devices such as smartphones, sensors, or cameras, reducing latency and enhancing real-time decision-making capabilities by performing inference locally. Edge AI extends this concept by utilizing nearby edge servers or gateways to handle more complex computations, balancing workload distribution between the device and the network edge. Explore further to understand how inference location impacts performance, privacy, and scalability in AI applications.

Latency

Edge AI processes data locally on devices such as sensors or gateways, significantly reducing latency compared to traditional cloud-based AI by minimizing data transmission times. AI at the endpoint operates directly on end-user devices, providing the fastest response times by analyzing data instantly without dependency on network connectivity. Explore further to understand how latency impacts real-time applications and decision-making in AI systems.

Data Privacy

Edge AI processes sensitive data locally on devices, minimizing the risk of data breaches by keeping information within the endpoint. AI at the endpoint offers real-time analytics and decision-making without relying heavily on cloud connectivity, ensuring faster response times and enhanced privacy controls. Explore more about how edge AI solutions safeguard data privacy in various industries.

Source and External Links

Getting Started with Endpoint AI | Renesas - Endpoint AI brings AI-powered machine learning capabilities directly to IoT devices at the network edge, enabling real-time decision making closer to data sources to improve speed, accuracy, and reduce costs in industries like healthcare, manufacturing, and retail.

AI in Modern Endpoint Security: Transforming Defense - AI enhances endpoint security by providing real-time threat detection, behavioral analysis, predictive capabilities, and automated incident response to detect and mitigate both known and unknown cyber threats effectively.

How AI Is Transforming Endpoint Security In 2025 | Cyble - AI in endpoint security enables faster automated responses to threats, continuous learning to adapt to evolving cyber threats, and scalable protection that supports diverse and growing device environments including remote work setups.

dowidth.com

dowidth.com