Edge AI processes data locally on devices such as smartphones or IoT sensors, reducing latency and enhancing real-time decision-making capabilities. Serverless AI leverages cloud computing with scalable, event-driven functions that minimize infrastructure management and dynamically allocate resources. Explore the unique advantages and use cases of Edge AI versus serverless AI to optimize your technology strategy.

Why it is important

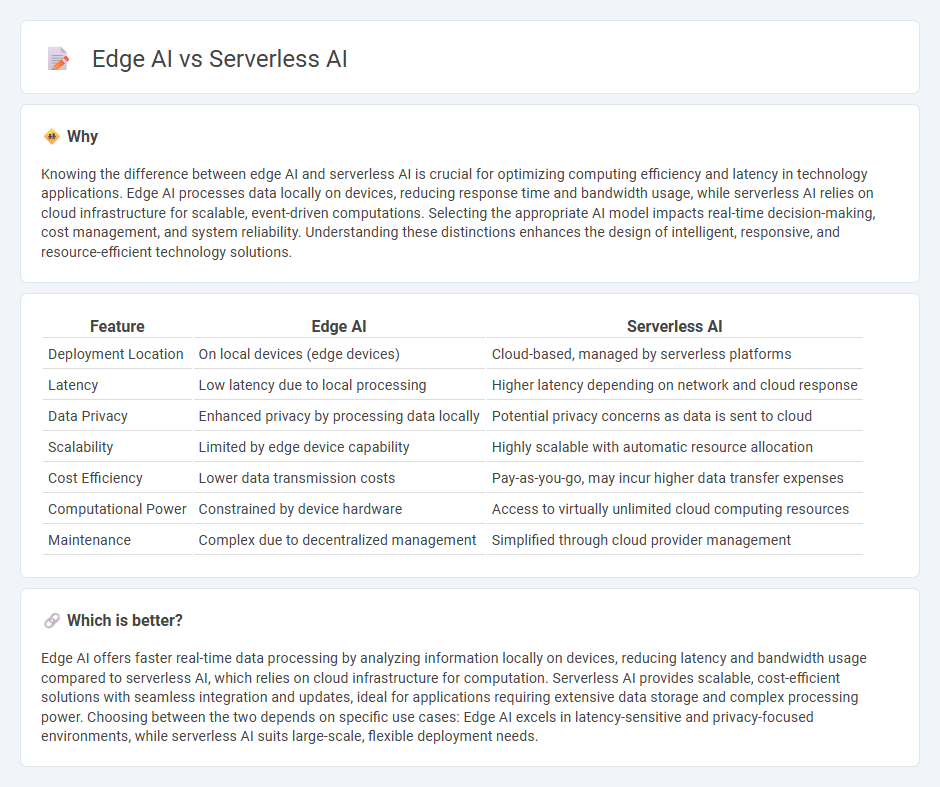

Knowing the difference between edge AI and serverless AI is crucial for optimizing computing efficiency and latency in technology applications. Edge AI processes data locally on devices, reducing response time and bandwidth usage, while serverless AI relies on cloud infrastructure for scalable, event-driven computations. Selecting the appropriate AI model impacts real-time decision-making, cost management, and system reliability. Understanding these distinctions enhances the design of intelligent, responsive, and resource-efficient technology solutions.

Comparison Table

| Feature | Edge AI | Serverless AI |

|---|---|---|

| Deployment Location | On local devices (edge devices) | Cloud-based, managed by serverless platforms |

| Latency | Low latency due to local processing | Higher latency depending on network and cloud response |

| Data Privacy | Enhanced privacy by processing data locally | Potential privacy concerns as data is sent to cloud |

| Scalability | Limited by edge device capability | Highly scalable with automatic resource allocation |

| Cost Efficiency | Lower data transmission costs | Pay-as-you-go, may incur higher data transfer expenses |

| Computational Power | Constrained by device hardware | Access to virtually unlimited cloud computing resources |

| Maintenance | Complex due to decentralized management | Simplified through cloud provider management |

Which is better?

Edge AI offers faster real-time data processing by analyzing information locally on devices, reducing latency and bandwidth usage compared to serverless AI, which relies on cloud infrastructure for computation. Serverless AI provides scalable, cost-efficient solutions with seamless integration and updates, ideal for applications requiring extensive data storage and complex processing power. Choosing between the two depends on specific use cases: Edge AI excels in latency-sensitive and privacy-focused environments, while serverless AI suits large-scale, flexible deployment needs.

Connection

Edge AI processes data locally on devices, reducing latency and bandwidth usage, while serverless AI leverages cloud infrastructure to dynamically allocate computational resources without managing servers. Together, they create a hybrid ecosystem where edge AI handles real-time, on-device analytics and serverless AI supports scalable, backend model training and complex processing. This integration optimizes AI deployment by balancing immediacy and flexibility across decentralized and cloud environments.

Key Terms

Cloud Functions

Serverless AI leverages Cloud Functions to dynamically scale AI workloads without managing infrastructure, optimizing costs and efficiency by executing code only in response to events. Edge AI processes data locally on devices, reducing latency and bandwidth usage by running AI models near the data source, which is crucial for real-time applications. Explore the benefits and use cases of Cloud Functions in serverless AI to enhance your AI deployment strategies.

On-device Processing

Serverless AI relies on cloud infrastructure to execute machine learning tasks, minimizing the need for local hardware and reducing operational costs, while edge AI emphasizes on-device processing to achieve low latency and enhanced data privacy by analyzing data directly on the device. On-device processing in edge AI improves real-time decision-making capabilities, crucial for applications in autonomous vehicles, healthcare devices, and IoT sensors. Explore further to understand how these AI paradigms redefine computational efficiency and user experience.

Latency

Serverless AI processes data remotely in the cloud, which can introduce higher latency due to network transmission times. Edge AI executes inference locally on devices like IoT sensors or smartphones, significantly reducing latency and enabling real-time responses. Explore the benefits and use cases of serverless AI versus edge AI to optimize latency in your applications.

Source and External Links

AI Goes Serverless: Are Systems Ready? - SIGARCH - Serverless AI enables custom model serving with minimal infrastructure management, abstracts hardware and scaling decisions for developers, and aligns billing to actual usage (e.g., pay-per-token) for improved efficiency and cost savings.

Overview * Cloudflare Workers AI docs - Cloudflare Workers AI provides serverless access to over 50 open-source models on GPUs, eliminates infrastructure management, and charges based on usage, all part of a global developer platform with built-in AI Gateway and vector database.

Fermyon Serverless AI - Fermyon Serverless AI leverages WebAssembly for ultra-fast (51ms) cold starts and enables developers to deploy LLM workloads in seconds, with examples and sample apps available to accelerate application building.

dowidth.com

dowidth.com