Retrieval Augmented Generation (RAG) leverages external knowledge bases to enhance language model outputs, improving accuracy by integrating relevant data during the generation process. Few-shot learning enables models to perform new tasks with minimal examples, focusing on adaptability without extensive retraining. Explore the nuances and applications of RAG and few-shot learning to understand their impact on modern AI advancements.

Why it is important

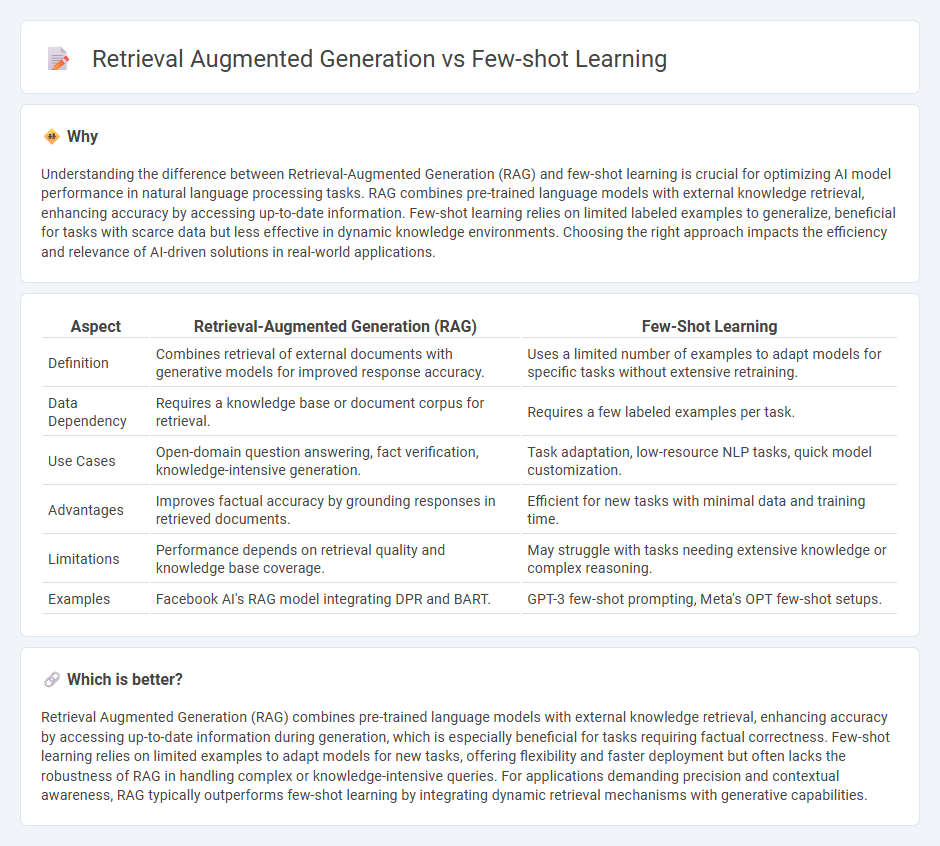

Understanding the difference between Retrieval-Augmented Generation (RAG) and few-shot learning is crucial for optimizing AI model performance in natural language processing tasks. RAG combines pre-trained language models with external knowledge retrieval, enhancing accuracy by accessing up-to-date information. Few-shot learning relies on limited labeled examples to generalize, beneficial for tasks with scarce data but less effective in dynamic knowledge environments. Choosing the right approach impacts the efficiency and relevance of AI-driven solutions in real-world applications.

Comparison Table

| Aspect | Retrieval-Augmented Generation (RAG) | Few-Shot Learning |

|---|---|---|

| Definition | Combines retrieval of external documents with generative models for improved response accuracy. | Uses a limited number of examples to adapt models for specific tasks without extensive retraining. |

| Data Dependency | Requires a knowledge base or document corpus for retrieval. | Requires a few labeled examples per task. |

| Use Cases | Open-domain question answering, fact verification, knowledge-intensive generation. | Task adaptation, low-resource NLP tasks, quick model customization. |

| Advantages | Improves factual accuracy by grounding responses in retrieved documents. | Efficient for new tasks with minimal data and training time. |

| Limitations | Performance depends on retrieval quality and knowledge base coverage. | May struggle with tasks needing extensive knowledge or complex reasoning. |

| Examples | Facebook AI's RAG model integrating DPR and BART. | GPT-3 few-shot prompting, Meta's OPT few-shot setups. |

Which is better?

Retrieval Augmented Generation (RAG) combines pre-trained language models with external knowledge retrieval, enhancing accuracy by accessing up-to-date information during generation, which is especially beneficial for tasks requiring factual correctness. Few-shot learning relies on limited examples to adapt models for new tasks, offering flexibility and faster deployment but often lacks the robustness of RAG in handling complex or knowledge-intensive queries. For applications demanding precision and contextual awareness, RAG typically outperforms few-shot learning by integrating dynamic retrieval mechanisms with generative capabilities.

Connection

Retrieval augmented generation (RAG) enhances language models by integrating external knowledge bases, improving response accuracy through relevant data retrieval. Few-shot learning enables models to generalize from limited examples, facilitating adaptation to new tasks with minimal training. Together, RAG leverages the few-shot paradigm to dynamically retrieve context-specific information, boosting performance in low-resource scenarios.

Key Terms

Prompt Engineering

Few-shot learning leverages a small number of labeled examples within prompts to enable language models to generalize tasks without extensive retraining, emphasizing precise prompt construction for effective context delivery. Retrieval augmented generation (RAG) integrates external document retrieval with generative models, enhancing responses with up-to-date or domain-specific knowledge via well-crafted retrieval prompts that align search queries with relevant data. Explore advanced prompt engineering techniques to optimize performance in both few-shot learning and RAG frameworks.

External Knowledge Base

Few-shot learning relies on limited labeled examples to generalize tasks without extensive retraining, leveraging pretrained models' internal knowledge but lacks direct access to up-to-date information. Retrieval augmented generation (RAG) integrates an external knowledge base, dynamically fetching relevant documents to enhance response accuracy and grounding in real-time data. Explore how incorporating external knowledge bases in RAG improves contextual understanding beyond few-shot limitations.

Contextual Adaptation

Few-shot learning leverages limited labeled examples to quickly adapt language models to new tasks, emphasizing efficient generalization from minimal context. Retrieval augmented generation improves contextual adaptation by integrating external knowledge databases, enhancing output relevance with dynamic information retrieval. Explore the comparative benefits and practical applications of these approaches to optimize contextual understanding in AI systems.

Source and External Links

What is Few-Shot Learning? Unlocking Insights with ... - Few-shot learning is a machine learning approach where models learn to recognize patterns and make predictions using only a very small number of labeled examples for each class, enabling them to generalize to new tasks with minimal additional data.

Unlocking the potential of few-shot learning - Few-shot learning allows AI models to adapt to new information by comparing a query example to a small support set of labeled examples, using similarity metrics to classify unseen data with very few training samples.

What Is Few-Shot Learning? - Few-shot learning trains models to make accurate predictions on classification tasks when labeled data is scarce, aiming to mimic the human ability to learn quickly from just a few examples.

dowidth.com

dowidth.com