Liquid neural networks dynamically adapt their structure and parameters in real-time, offering enhanced flexibility and efficiency over traditional recurrent neural networks (RNNs), which rely on fixed architectures to process sequential data. These networks excel in applications requiring real-time learning and adaptability, such as robotics and autonomous systems. Discover how liquid neural networks revolutionize machine learning and outperform RNNs in complex, evolving environments.

Why it is important

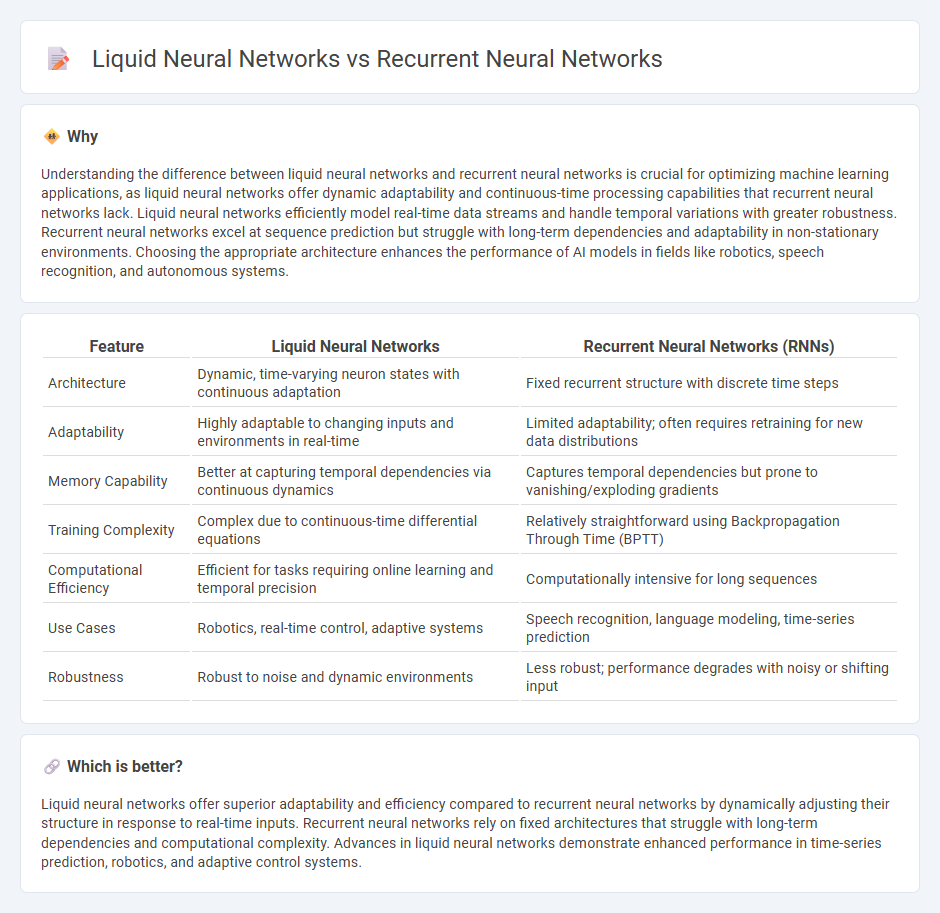

Understanding the difference between liquid neural networks and recurrent neural networks is crucial for optimizing machine learning applications, as liquid neural networks offer dynamic adaptability and continuous-time processing capabilities that recurrent neural networks lack. Liquid neural networks efficiently model real-time data streams and handle temporal variations with greater robustness. Recurrent neural networks excel at sequence prediction but struggle with long-term dependencies and adaptability in non-stationary environments. Choosing the appropriate architecture enhances the performance of AI models in fields like robotics, speech recognition, and autonomous systems.

Comparison Table

| Feature | Liquid Neural Networks | Recurrent Neural Networks (RNNs) |

|---|---|---|

| Architecture | Dynamic, time-varying neuron states with continuous adaptation | Fixed recurrent structure with discrete time steps |

| Adaptability | Highly adaptable to changing inputs and environments in real-time | Limited adaptability; often requires retraining for new data distributions |

| Memory Capability | Better at capturing temporal dependencies via continuous dynamics | Captures temporal dependencies but prone to vanishing/exploding gradients |

| Training Complexity | Complex due to continuous-time differential equations | Relatively straightforward using Backpropagation Through Time (BPTT) |

| Computational Efficiency | Efficient for tasks requiring online learning and temporal precision | Computationally intensive for long sequences |

| Use Cases | Robotics, real-time control, adaptive systems | Speech recognition, language modeling, time-series prediction |

| Robustness | Robust to noise and dynamic environments | Less robust; performance degrades with noisy or shifting input |

Which is better?

Liquid neural networks offer superior adaptability and efficiency compared to recurrent neural networks by dynamically adjusting their structure in response to real-time inputs. Recurrent neural networks rely on fixed architectures that struggle with long-term dependencies and computational complexity. Advances in liquid neural networks demonstrate enhanced performance in time-series prediction, robotics, and adaptive control systems.

Connection

Liquid neural networks and recurrent neural networks (RNNs) both process sequential data by maintaining state information over time, enabling dynamic temporal pattern recognition. Liquid neural networks enhance traditional RNNs by incorporating continuous-time dynamics and adaptability, allowing for more robust and flexible learning in changing environments. This connection leverages the temporal memory capabilities of RNNs while extending them with advanced differential equation-based models for improved real-time processing.

Key Terms

Sequence Modeling

Recurrent neural networks (RNNs) excel in sequence modeling by maintaining internal states that capture temporal dependencies, enabling tasks like speech recognition and language translation. Liquid neural networks, inspired by biological neural dynamics, offer improved adaptability and robustness in processing time-varying inputs through continuous-time recurrent structures. Explore the advantages of these architectures to enhance your understanding of advanced sequence modeling techniques.

Memory Dynamics

Recurrent neural networks (RNNs) maintain memory through fixed feedback loops, enabling them to handle sequential data but often struggle with long-term dependencies due to vanishing gradients. Liquid neural networks utilize adaptable continuous-time dynamics, offering enhanced flexibility and more robust memory retention in dynamic environments. Discover how these memory dynamics impact AI performance in real-world applications.

Adaptivity

Recurrent Neural Networks (RNNs) process sequences by maintaining hidden states but often struggle with adaptability to changing inputs or environments due to fixed weights. Liquid Neural Networks incorporate a continuous-time dynamic system capable of rapid adaptation through learnable time constants and feedback, enabling real-time adjustments in response to non-stationary data. Explore more to understand how liquid networks enhance adaptivity beyond traditional RNN capabilities.

Source and External Links

Recurrent Neural Networks - IBM - This webpage provides an overview of recurrent neural networks, explaining how they process sequential or time-series data by maintaining a hidden state through time steps.

Recurrent Neural Network - Wikipedia - This article describes recurrent neural networks as deep learning models designed to process sequential data, using recurrent connections to capture temporal dependencies.

Recurrent Neural Networks - Dive into Deep Learning - This chapter discusses how recurrent neural networks capture sequence dynamics via recurrent connections, which are essential for tasks like image captioning and speech synthesis.

dowidth.com

dowidth.com