Multimodal AI integrates diverse data types such as text, images, and audio to enhance machine understanding and decision-making across complex scenarios. Federated learning enables decentralized model training on distributed devices, preserving data privacy while improving collective intelligence. Explore how these cutting-edge technologies shape the future of artificial intelligence.

Why it is important

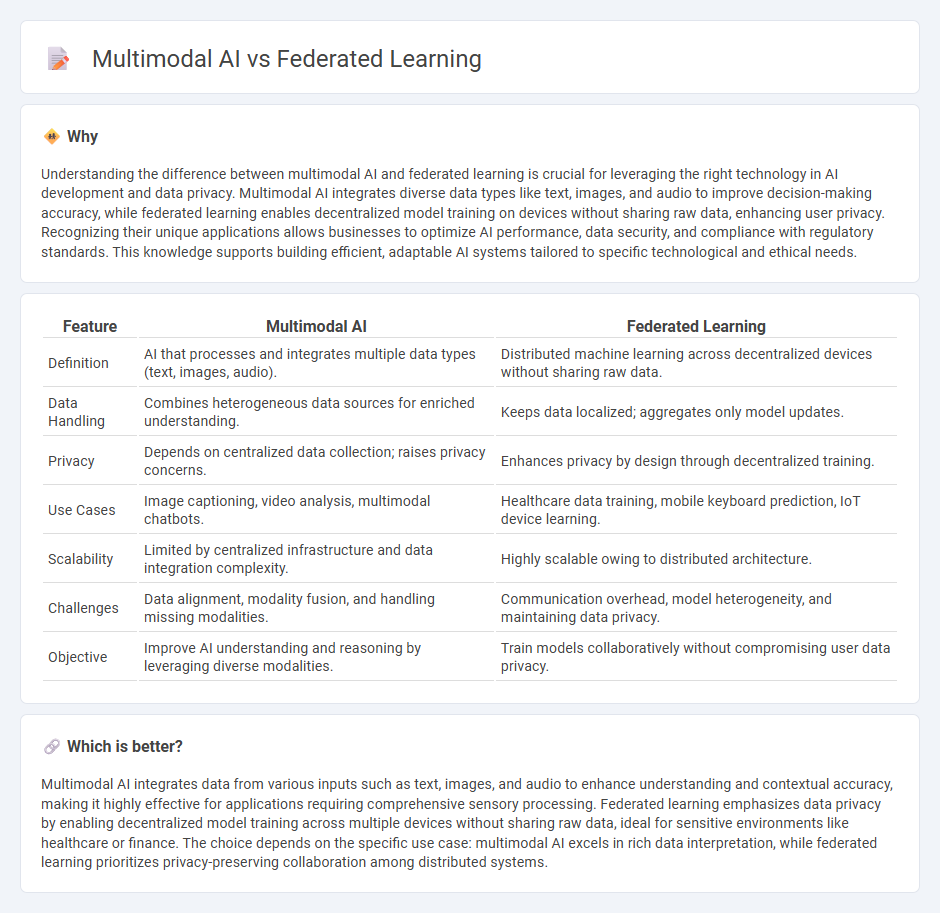

Understanding the difference between multimodal AI and federated learning is crucial for leveraging the right technology in AI development and data privacy. Multimodal AI integrates diverse data types like text, images, and audio to improve decision-making accuracy, while federated learning enables decentralized model training on devices without sharing raw data, enhancing user privacy. Recognizing their unique applications allows businesses to optimize AI performance, data security, and compliance with regulatory standards. This knowledge supports building efficient, adaptable AI systems tailored to specific technological and ethical needs.

Comparison Table

| Feature | Multimodal AI | Federated Learning |

|---|---|---|

| Definition | AI that processes and integrates multiple data types (text, images, audio). | Distributed machine learning across decentralized devices without sharing raw data. |

| Data Handling | Combines heterogeneous data sources for enriched understanding. | Keeps data localized; aggregates only model updates. |

| Privacy | Depends on centralized data collection; raises privacy concerns. | Enhances privacy by design through decentralized training. |

| Use Cases | Image captioning, video analysis, multimodal chatbots. | Healthcare data training, mobile keyboard prediction, IoT device learning. |

| Scalability | Limited by centralized infrastructure and data integration complexity. | Highly scalable owing to distributed architecture. |

| Challenges | Data alignment, modality fusion, and handling missing modalities. | Communication overhead, model heterogeneity, and maintaining data privacy. |

| Objective | Improve AI understanding and reasoning by leveraging diverse modalities. | Train models collaboratively without compromising user data privacy. |

Which is better?

Multimodal AI integrates data from various inputs such as text, images, and audio to enhance understanding and contextual accuracy, making it highly effective for applications requiring comprehensive sensory processing. Federated learning emphasizes data privacy by enabling decentralized model training across multiple devices without sharing raw data, ideal for sensitive environments like healthcare or finance. The choice depends on the specific use case: multimodal AI excels in rich data interpretation, while federated learning prioritizes privacy-preserving collaboration among distributed systems.

Connection

Multimodal AI processes and integrates data from multiple sources such as text, images, and audio to enhance machine understanding, which demands significant data privacy and distributed computational resources. Federated learning addresses these challenges by enabling decentralized model training on edge devices without sharing raw data, preserving user privacy while generating comprehensive multimodal insights. This synergy accelerates advancements in personalized AI applications by combining diverse data modalities with privacy-preserving collaborative learning frameworks.

Key Terms

Decentralization

Federated learning enables decentralized AI by allowing multiple devices to collaboratively train models without sharing raw data, preserving privacy and reducing central server dependency. Multimodal AI integrates diverse data types, such as text, images, and audio, often requiring centralized processing to effectively combine information across modalities. Explore further to understand how decentralization impacts the efficiency and privacy of these advanced AI approaches.

Data Fusion

Federated learning enables decentralized data fusion by aggregating model updates from multiple edge devices without sharing raw data, enhancing privacy and scalability in AI systems. Multimodal AI fuses heterogeneous data types such as text, images, and audio at feature or decision levels to improve context understanding and model performance. Explore deeper into the synergies and techniques of federated learning and multimodal AI data fusion for advanced machine learning applications.

Privacy

Federated learning enhances privacy by enabling decentralized model training on local devices, preventing raw data from leaving user environments and reducing exposure to data breaches. Multimodal AI integrates diverse data types, such as text, images, and audio, to improve understanding but often requires centralized data aggregation, posing higher privacy risks. Explore how these approaches balance innovative AI capabilities with privacy preservation strategies.

Source and External Links

What Is Federated Learning? | IBM - Federated learning is a decentralized approach to training machine learning models where local nodes train a global model using their own data without sharing the data itself, preserving privacy and allowing collaborative improvements through iterative aggregation of updates by a central server.

What is federated learning? - IBM Research - Federated learning trains AI models collaboratively across multiple decentralized data sources without sharing raw data; model updates are encrypted, sent to a cloud server, averaged, and integrated iteratively, enabling privacy-preserving training across diverse datasets and tasks.

Federated learning - Wikipedia - Federated learning is a machine learning technique that collaboratively trains a global model on multiple local datasets held by clients without exchanging raw data, addressing data privacy and heterogeneity while enabling training on decentralized, non-i.i.d. data samples.

dowidth.com

dowidth.com