Edge intelligence processes data locally on devices to reduce latency, enhance privacy, and enable real-time decision-making, unlike traditional machine learning, which relies heavily on centralized cloud servers for data analysis. This approach is crucial for applications in autonomous vehicles, smart cities, and IoT devices where immediate responses are necessary. Explore the benefits and use cases of edge intelligence compared to conventional machine learning to understand their impact on future technology trends.

Why it is important

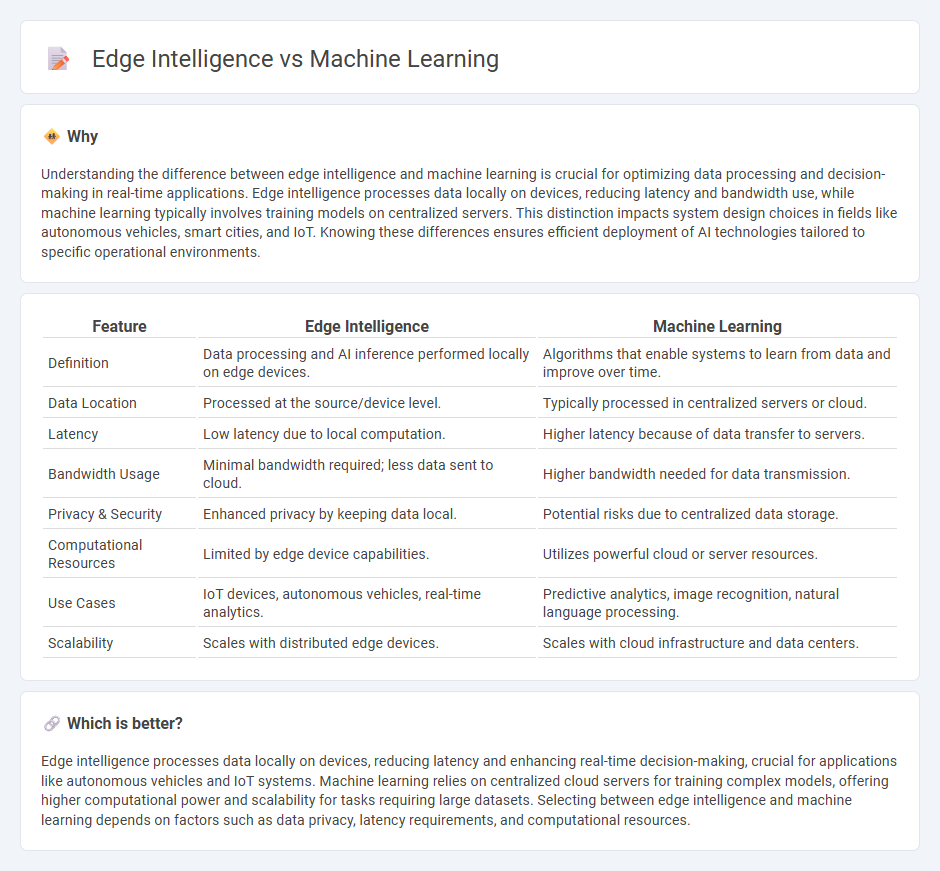

Understanding the difference between edge intelligence and machine learning is crucial for optimizing data processing and decision-making in real-time applications. Edge intelligence processes data locally on devices, reducing latency and bandwidth use, while machine learning typically involves training models on centralized servers. This distinction impacts system design choices in fields like autonomous vehicles, smart cities, and IoT. Knowing these differences ensures efficient deployment of AI technologies tailored to specific operational environments.

Comparison Table

| Feature | Edge Intelligence | Machine Learning |

|---|---|---|

| Definition | Data processing and AI inference performed locally on edge devices. | Algorithms that enable systems to learn from data and improve over time. |

| Data Location | Processed at the source/device level. | Typically processed in centralized servers or cloud. |

| Latency | Low latency due to local computation. | Higher latency because of data transfer to servers. |

| Bandwidth Usage | Minimal bandwidth required; less data sent to cloud. | Higher bandwidth needed for data transmission. |

| Privacy & Security | Enhanced privacy by keeping data local. | Potential risks due to centralized data storage. |

| Computational Resources | Limited by edge device capabilities. | Utilizes powerful cloud or server resources. |

| Use Cases | IoT devices, autonomous vehicles, real-time analytics. | Predictive analytics, image recognition, natural language processing. |

| Scalability | Scales with distributed edge devices. | Scales with cloud infrastructure and data centers. |

Which is better?

Edge intelligence processes data locally on devices, reducing latency and enhancing real-time decision-making, crucial for applications like autonomous vehicles and IoT systems. Machine learning relies on centralized cloud servers for training complex models, offering higher computational power and scalability for tasks requiring large datasets. Selecting between edge intelligence and machine learning depends on factors such as data privacy, latency requirements, and computational resources.

Connection

Edge intelligence integrates machine learning algorithms directly on edge devices, enabling real-time data processing and decision-making without relying on cloud connectivity. This proximity to data sources reduces latency, enhances privacy, and optimizes bandwidth usage by minimizing data transmission to centralized servers. Implementing machine learning models at the edge supports applications in autonomous vehicles, smart cities, and IoT systems where timely and efficient data analysis is crucial.

Key Terms

Model Training

Model training in machine learning typically occurs on centralized cloud servers with vast computational resources, enabling the processing of large datasets and complex algorithms. Edge intelligence shifts part of this training to decentralized edge devices, improving latency and data privacy by leveraging local computation power and real-time data. Explore deeper insights on balancing cloud and edge model training for optimized AI performance.

Inference

Machine learning inference involves processing data through trained models to generate predictions or insights, typically performed in centralized cloud servers with high computational resources. Edge intelligence brings inference closer to data sources by deploying lightweight machine learning models on edge devices, reducing latency and bandwidth usage while enhancing real-time decision-making. Explore the advantages and limitations of both approaches to understand their impact on modern AI applications.

On-device Processing

On-device processing in machine learning enables real-time data analysis with reduced latency and enhanced privacy by executing algorithms directly on devices like smartphones and IoT sensors. Edge intelligence extends this concept by integrating AI capabilities at the network edge to support distributed computing and minimize reliance on centralized cloud infrastructure. Explore the benefits and technical nuances of on-device processing and edge intelligence to optimize your AI deployments.

Source and External Links

What Is Machine Learning (ML)? - Machine learning is a branch of artificial intelligence focused on enabling computers and machines to imitate the way that humans learn from data, typically divided into supervised, unsupervised, and reinforcement learning.

Machine Learning: What it is and why it matters - Machine learning automates the building of analytical models, allowing systems to learn from data patterns and make predictions or decisions without explicit programming.

Machine learning - Machine learning is the study and development of statistical algorithms that enable software systems to improve their performance with experience and data.

dowidth.com

dowidth.com