Generative adversarial networks (GANs) leverage a dual-model architecture where a generator creates data samples and a discriminator evaluates their authenticity, driving the generator to improve iteratively. Energy-based models (EBMs) define an energy function over data configurations and optimize to assign lower energy to real data, enabling flexible representation of complex distributions. Explore the distinctive mechanisms and applications of GANs versus EBMs to understand their roles in advancing artificial intelligence.

Why it is important

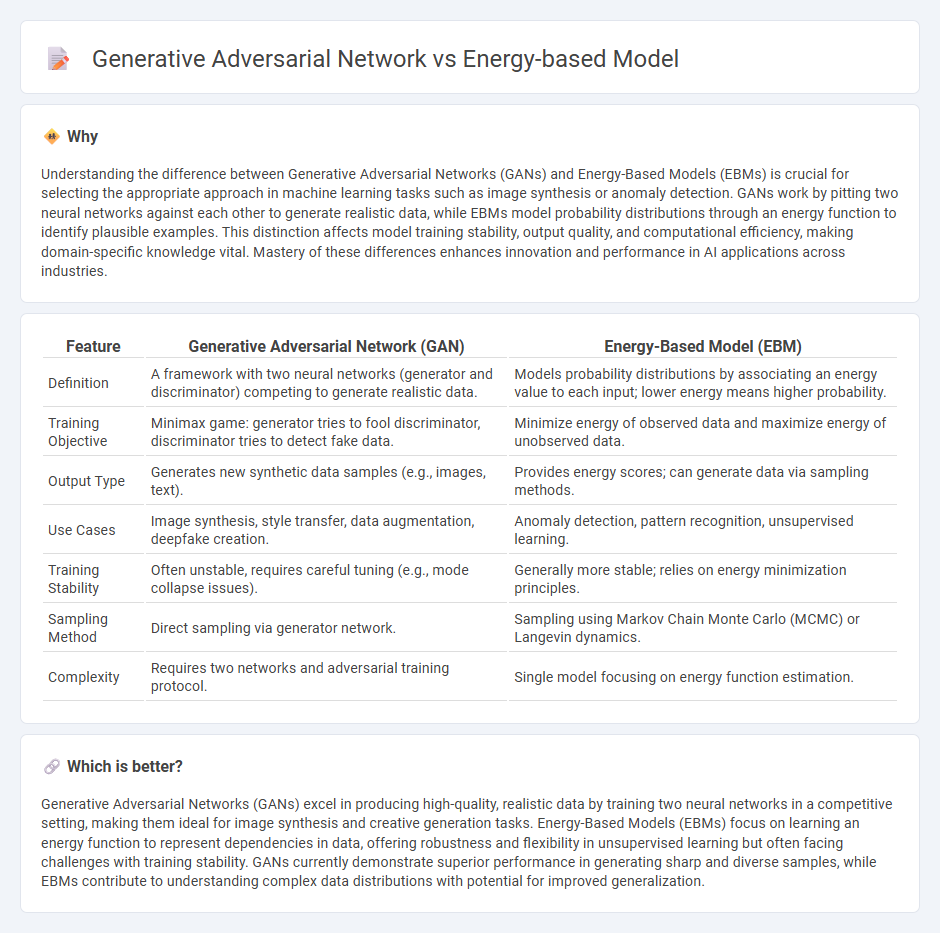

Understanding the difference between Generative Adversarial Networks (GANs) and Energy-Based Models (EBMs) is crucial for selecting the appropriate approach in machine learning tasks such as image synthesis or anomaly detection. GANs work by pitting two neural networks against each other to generate realistic data, while EBMs model probability distributions through an energy function to identify plausible examples. This distinction affects model training stability, output quality, and computational efficiency, making domain-specific knowledge vital. Mastery of these differences enhances innovation and performance in AI applications across industries.

Comparison Table

| Feature | Generative Adversarial Network (GAN) | Energy-Based Model (EBM) |

|---|---|---|

| Definition | A framework with two neural networks (generator and discriminator) competing to generate realistic data. | Models probability distributions by associating an energy value to each input; lower energy means higher probability. |

| Training Objective | Minimax game: generator tries to fool discriminator, discriminator tries to detect fake data. | Minimize energy of observed data and maximize energy of unobserved data. |

| Output Type | Generates new synthetic data samples (e.g., images, text). | Provides energy scores; can generate data via sampling methods. |

| Use Cases | Image synthesis, style transfer, data augmentation, deepfake creation. | Anomaly detection, pattern recognition, unsupervised learning. |

| Training Stability | Often unstable, requires careful tuning (e.g., mode collapse issues). | Generally more stable; relies on energy minimization principles. |

| Sampling Method | Direct sampling via generator network. | Sampling using Markov Chain Monte Carlo (MCMC) or Langevin dynamics. |

| Complexity | Requires two networks and adversarial training protocol. | Single model focusing on energy function estimation. |

Which is better?

Generative Adversarial Networks (GANs) excel in producing high-quality, realistic data by training two neural networks in a competitive setting, making them ideal for image synthesis and creative generation tasks. Energy-Based Models (EBMs) focus on learning an energy function to represent dependencies in data, offering robustness and flexibility in unsupervised learning but often facing challenges with training stability. GANs currently demonstrate superior performance in generating sharp and diverse samples, while EBMs contribute to understanding complex data distributions with potential for improved generalization.

Connection

Generative Adversarial Networks (GANs) and Energy-Based Models (EBMs) are connected through their approach to modeling data distributions via optimization of an objective function that evaluates data likelihood or energy. GANs consist of a generator and discriminator engaged in a minimax game, effectively learning data distribution by minimizing divergence, while EBMs define an energy function over data configurations, seeking to assign lower energy to real data and higher to generated or unlikely samples. Both frameworks leverage adversarial or contrastive learning principles to improve generative performance and data representation in unsupervised settings.

Key Terms

Energy Function

Energy-Based Models (EBMs) utilize an energy function to assign scalar energy values to data configurations, guiding the learning process by minimizing energy for observed data and maximizing it for non-observed data. Generative Adversarial Networks (GANs) do not explicitly define an energy function but rely on a discriminator and generator in a zero-sum game to implicitly learn data distribution. Explore detailed comparisons and applications of energy functions in EBMs and GANs to understand their unique generative capabilities.

Discriminator

The discriminator in energy-based models evaluates data by assigning low energy to real samples and higher energy to fake ones, aiming to capture underlying data distributions through energy functions instead of explicit probability densities. In generative adversarial networks (GANs), the discriminator functions as a binary classifier distinguishing real from generated data, providing gradients that guide the generator to produce more realistic samples. Explore deeper insights into discriminators to understand their impact on model performance and training dynamics.

Sampling

Energy-Based Models (EBMs) use an energy function to assign low energy to real data samples, guiding the sampling process through optimization techniques like Langevin dynamics, which can be computationally intensive and often require careful tuning to produce high-quality samples. Generative Adversarial Networks (GANs) employ a generator-discriminator framework, enabling efficient, direct sampling by training the generator to produce realistic data that fools the discriminator, resulting in faster sample generation but potential mode collapse. Explore deeper insights into sampling strategies and performance comparisons between EBMs and GANs for advanced generative modeling.

Source and External Links

Energy-based model (Wikipedia) - Energy-based models (EBMs) are a class of generative models from statistical physics that learn data distributions by defining an energy function--low energy states correspond to high probability, and new data can be generated by sampling from this learned distribution.

Energy-Based Models * Deep Learning (Yann LeCun) - EBMs provide a flexible, unifying framework for supervised, unsupervised, and self-supervised learning, handling complex inference and multiple possible outputs for a single input by scoring compatibility with an energy function rather than a probability.

A Tutorial on Energy-Based Learning (Yann LeCun) - In energy-based learning, the model measures compatibility between variables X and Y via an energy function E(Y,X), with inference involving finding the Y that minimizes this energy, applicable even to complex, high-dimensional outputs.

dowidth.com

dowidth.com