Algorithmic bias auditing involves systematically examining AI systems to detect and measure unfair biases embedded in their decision-making processes. Fairness-aware machine learning focuses on developing models that incorporate fairness constraints to prevent biased outcomes during training. Explore the distinctions and interplay between these approaches to enhance equitable AI development.

Why it is important

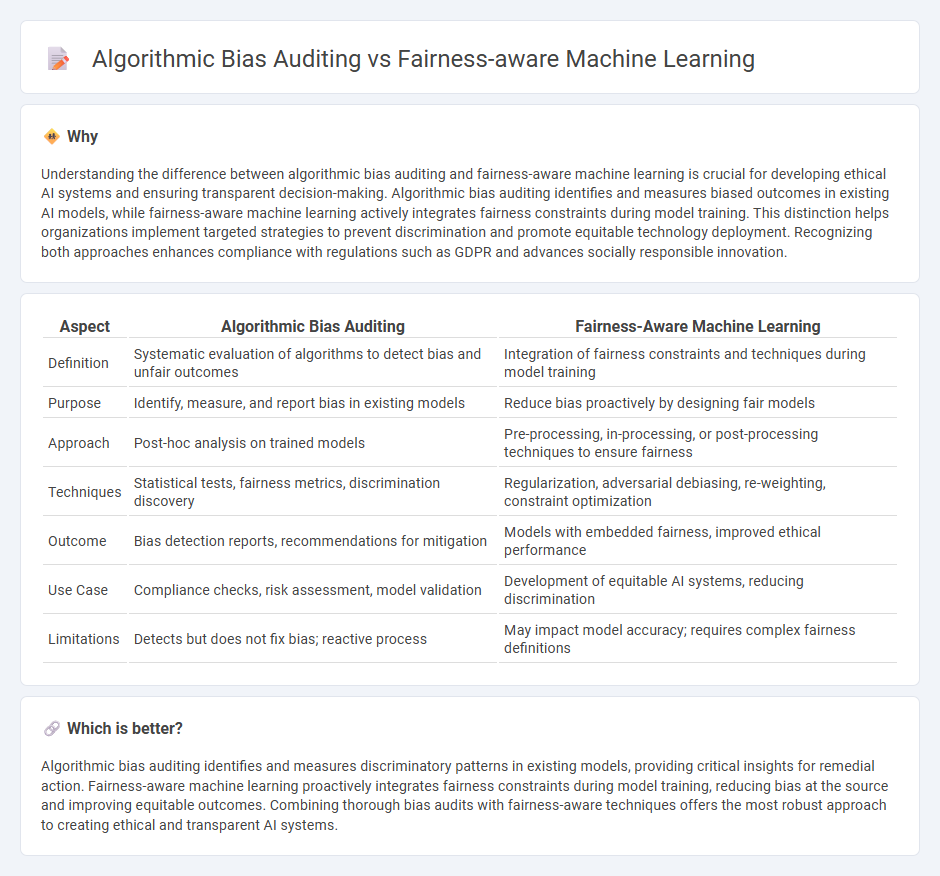

Understanding the difference between algorithmic bias auditing and fairness-aware machine learning is crucial for developing ethical AI systems and ensuring transparent decision-making. Algorithmic bias auditing identifies and measures biased outcomes in existing AI models, while fairness-aware machine learning actively integrates fairness constraints during model training. This distinction helps organizations implement targeted strategies to prevent discrimination and promote equitable technology deployment. Recognizing both approaches enhances compliance with regulations such as GDPR and advances socially responsible innovation.

Comparison Table

| Aspect | Algorithmic Bias Auditing | Fairness-Aware Machine Learning |

|---|---|---|

| Definition | Systematic evaluation of algorithms to detect bias and unfair outcomes | Integration of fairness constraints and techniques during model training |

| Purpose | Identify, measure, and report bias in existing models | Reduce bias proactively by designing fair models |

| Approach | Post-hoc analysis on trained models | Pre-processing, in-processing, or post-processing techniques to ensure fairness |

| Techniques | Statistical tests, fairness metrics, discrimination discovery | Regularization, adversarial debiasing, re-weighting, constraint optimization |

| Outcome | Bias detection reports, recommendations for mitigation | Models with embedded fairness, improved ethical performance |

| Use Case | Compliance checks, risk assessment, model validation | Development of equitable AI systems, reducing discrimination |

| Limitations | Detects but does not fix bias; reactive process | May impact model accuracy; requires complex fairness definitions |

Which is better?

Algorithmic bias auditing identifies and measures discriminatory patterns in existing models, providing critical insights for remedial action. Fairness-aware machine learning proactively integrates fairness constraints during model training, reducing bias at the source and improving equitable outcomes. Combining thorough bias audits with fairness-aware techniques offers the most robust approach to creating ethical and transparent AI systems.

Connection

Algorithmic bias auditing systematically evaluates machine learning models to identify and measure disparities affecting protected groups, ensuring ethical outcomes. Fairness-aware machine learning integrates bias mitigation techniques during model development, directly addressing issues highlighted by audits. Together, these processes create a feedback loop that enhances transparency and promotes equitable AI systems.

Key Terms

Fairness Metrics (e.g., demographic parity, equal opportunity)

Fairness-aware machine learning integrates fairness constraints directly into model training using metrics like demographic parity, which ensures equal positive rates across groups, and equal opportunity, which guarantees equal true positive rates. Algorithmic bias auditing evaluates models post-deployment by measuring discrepancies in these fairness metrics to detect and mitigate biases. Explore further to understand how these approaches differ in enhancing ethical AI systems.

Disparate Impact Analysis

Fairness-aware machine learning integrates algorithms designed to minimize discrimination during model training, while algorithmic bias auditing primarily evaluates existing models to identify discriminatory impacts. Disparate Impact Analysis quantifies the extent to which decisions disproportionately affect protected groups, serving as a critical metric in both proactive fairness adjustments and retrospective bias assessments. Explore the methodologies and tools used to measure and mitigate disparate impact in machine learning systems.

Bias Detection Tools

Fairness-aware machine learning integrates bias mitigation techniques directly into model training to reduce discrimination, while algorithmic bias auditing focuses on identifying and measuring biases post-deployment using specialized bias detection tools such as AIF360 and Fairlearn. Bias detection tools provide quantitative metrics like disparate impact, equal opportunity difference, and demographic parity to evaluate model fairness across protected groups. Explore in-depth comparisons of these approaches and tools to enhance ethical AI deployment.

Source and External Links

Fairness-Aware Machine Learning and Data Mining - Fairness-aware machine learning analyzes data while considering potential issues of fairness, discrimination, neutrality, and/or independence, with methods to both detect and mitigate algorithmic unfairness at various stages of the machine learning process.

Fairness-Aware Machine Learning - An Extensive Overview - This survey compiles current research on fairness-aware machine learning, covering definitions of fairness notions, pre-, in-, and post-processing algorithms, and real-world examples of how machine learning can produce or reinforce social biases.

Fairness (machine learning) - Wikipedia - Fairness in machine learning refers to efforts to correct algorithmic bias in automated decision processes, addressing discrimination based on sensitive attributes through data preprocessing, in-processing, or post-processing techniques.

dowidth.com

dowidth.com