Diffusion models simulate data generation through iterative noise reduction, excelling in image synthesis and complex data representation. Bayesian Neural Networks incorporate uncertainty estimation by applying Bayesian inference to neural parameters, enhancing model robustness and decision-making in uncertain environments. Explore the differences and applications of these advanced technologies to understand their impact on artificial intelligence and machine learning.

Why it is important

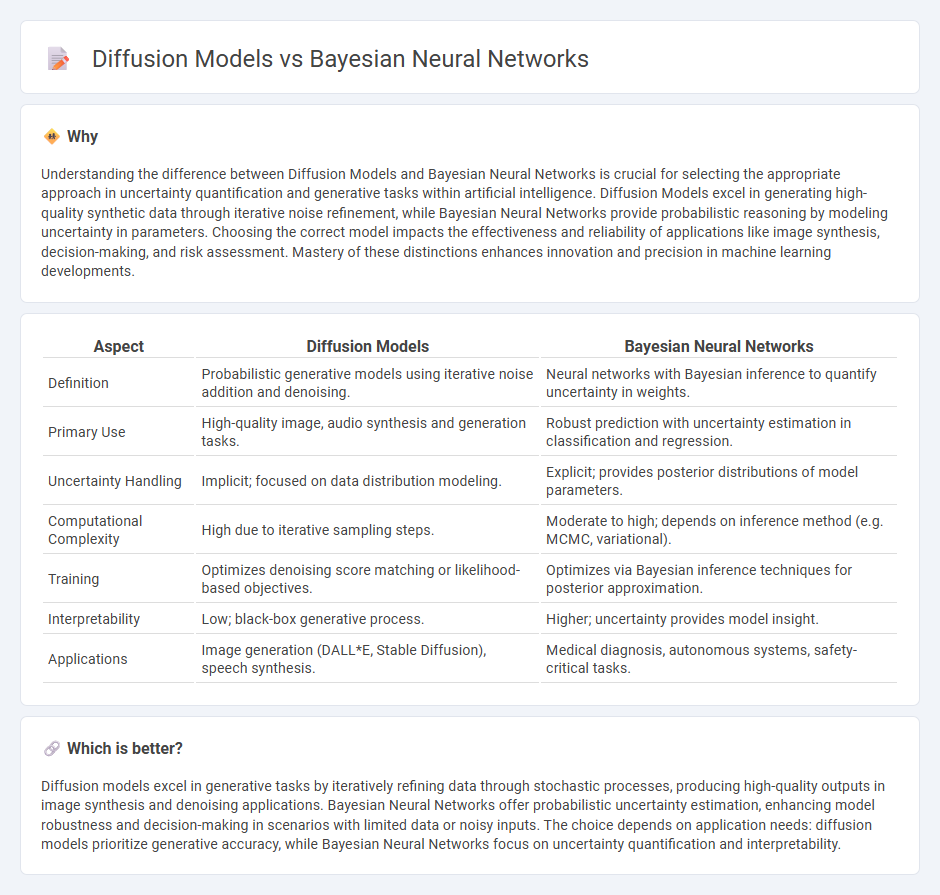

Understanding the difference between Diffusion Models and Bayesian Neural Networks is crucial for selecting the appropriate approach in uncertainty quantification and generative tasks within artificial intelligence. Diffusion Models excel in generating high-quality synthetic data through iterative noise refinement, while Bayesian Neural Networks provide probabilistic reasoning by modeling uncertainty in parameters. Choosing the correct model impacts the effectiveness and reliability of applications like image synthesis, decision-making, and risk assessment. Mastery of these distinctions enhances innovation and precision in machine learning developments.

Comparison Table

| Aspect | Diffusion Models | Bayesian Neural Networks |

|---|---|---|

| Definition | Probabilistic generative models using iterative noise addition and denoising. | Neural networks with Bayesian inference to quantify uncertainty in weights. |

| Primary Use | High-quality image, audio synthesis and generation tasks. | Robust prediction with uncertainty estimation in classification and regression. |

| Uncertainty Handling | Implicit; focused on data distribution modeling. | Explicit; provides posterior distributions of model parameters. |

| Computational Complexity | High due to iterative sampling steps. | Moderate to high; depends on inference method (e.g. MCMC, variational). |

| Training | Optimizes denoising score matching or likelihood-based objectives. | Optimizes via Bayesian inference techniques for posterior approximation. |

| Interpretability | Low; black-box generative process. | Higher; uncertainty provides model insight. |

| Applications | Image generation (DALL*E, Stable Diffusion), speech synthesis. | Medical diagnosis, autonomous systems, safety-critical tasks. |

Which is better?

Diffusion models excel in generative tasks by iteratively refining data through stochastic processes, producing high-quality outputs in image synthesis and denoising applications. Bayesian Neural Networks offer probabilistic uncertainty estimation, enhancing model robustness and decision-making in scenarios with limited data or noisy inputs. The choice depends on application needs: diffusion models prioritize generative accuracy, while Bayesian Neural Networks focus on uncertainty quantification and interpretability.

Connection

Diffusion models and Bayesian Neural Networks are connected through their shared focus on probabilistic modeling to capture uncertainty in data generation and inference processes. Diffusion models leverage stochastic differential equations to iteratively transform noise into structured data, while Bayesian Neural Networks use probability distributions over weights to quantify uncertainty in predictions. Combining these approaches enhances robustness in generative tasks by integrating principled uncertainty estimation with complex data synthesis.

Key Terms

Uncertainty Quantification

Bayesian Neural Networks (BNNs) quantify uncertainty by learning posterior distributions over weights, enabling robust decision-making under data variability and limited samples. Diffusion models capture uncertainty through stochastic sampling of latent variables during data generation, effectively modeling complex data distributions in high-dimensional spaces. Explore in-depth comparisons of uncertainty quantification techniques to enhance model interpretability and reliability.

Probabilistic Modeling

Bayesian Neural Networks (BNNs) incorporate uncertainty by placing probability distributions over weights, enabling robust probabilistic modeling and risk-aware predictions in complex tasks. Diffusion models leverage stochastic processes to generate data by iteratively refining noisy samples, excelling in high-dimensional generative modeling with inherent probabilistic frameworks. Explore more to understand how these approaches revolutionize uncertainty estimation and data generation capabilities.

Generative Processes

Bayesian Neural Networks offer probabilistic modeling by representing uncertainty in weights, enabling robust generative processes through posterior distributions over parameters. Diffusion models generate data by iteratively refining noise into coherent samples using reverse stochastic differential equations, excelling in high-fidelity image synthesis and temporal data generation. Explore detailed comparisons and applications to better understand generative capabilities and model selection.

Source and External Links

What is a Bayesian Neural Network? - Databricks - Bayesian Neural Networks (BNNs) extend standard neural networks by treating model parameters as probability distributions, enabling uncertainty estimation and helping control overfitting through Bayesian inference.

A Beginner's Guide to the Bayesian Neural Network - Coursera - In a Bayesian neural network, each weight is represented as a distribution rather than a fixed value, allowing the model to express confidence in its predictions and learn robustly even from small or noisy datasets.

Tutorial 1: Bayesian Neural Networks with Pyro - Bayesian neural networks use probabilistic modeling of weights (e.g., Gaussian priors) and likelihoods (e.g., Gaussian for outputs), enabling the network to estimate prediction uncertainty through variational inference or similar approximation methods.

dowidth.com

dowidth.com