Retrieval Augmented Generation (RAG) enhances language models by integrating external knowledge bases during text generation, improving accuracy and context relevance. Ensemble Learning combines multiple machine learning models to boost predictive performance and robustness by leveraging diverse algorithm strengths. Explore these advanced AI techniques to understand their distinct benefits and applications.

Why it is important

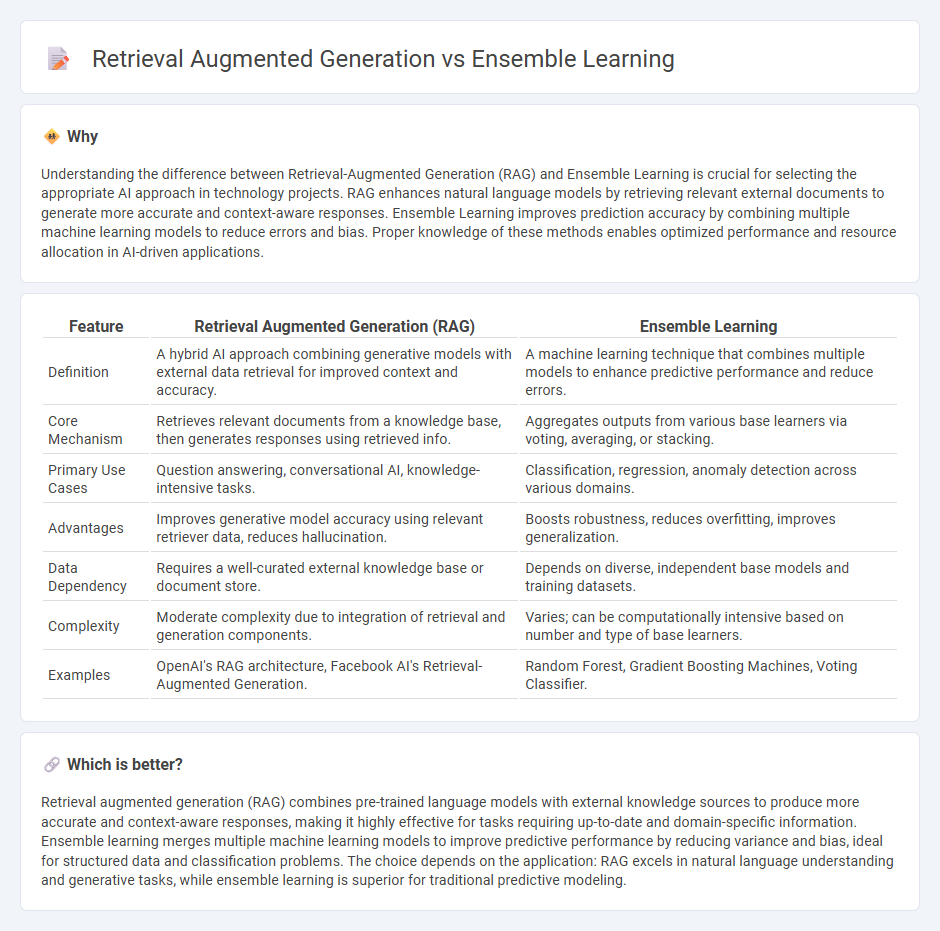

Understanding the difference between Retrieval-Augmented Generation (RAG) and Ensemble Learning is crucial for selecting the appropriate AI approach in technology projects. RAG enhances natural language models by retrieving relevant external documents to generate more accurate and context-aware responses. Ensemble Learning improves prediction accuracy by combining multiple machine learning models to reduce errors and bias. Proper knowledge of these methods enables optimized performance and resource allocation in AI-driven applications.

Comparison Table

| Feature | Retrieval Augmented Generation (RAG) | Ensemble Learning |

|---|---|---|

| Definition | A hybrid AI approach combining generative models with external data retrieval for improved context and accuracy. | A machine learning technique that combines multiple models to enhance predictive performance and reduce errors. |

| Core Mechanism | Retrieves relevant documents from a knowledge base, then generates responses using retrieved info. | Aggregates outputs from various base learners via voting, averaging, or stacking. |

| Primary Use Cases | Question answering, conversational AI, knowledge-intensive tasks. | Classification, regression, anomaly detection across various domains. |

| Advantages | Improves generative model accuracy using relevant retriever data, reduces hallucination. | Boosts robustness, reduces overfitting, improves generalization. |

| Data Dependency | Requires a well-curated external knowledge base or document store. | Depends on diverse, independent base models and training datasets. |

| Complexity | Moderate complexity due to integration of retrieval and generation components. | Varies; can be computationally intensive based on number and type of base learners. |

| Examples | OpenAI's RAG architecture, Facebook AI's Retrieval-Augmented Generation. | Random Forest, Gradient Boosting Machines, Voting Classifier. |

Which is better?

Retrieval augmented generation (RAG) combines pre-trained language models with external knowledge sources to produce more accurate and context-aware responses, making it highly effective for tasks requiring up-to-date and domain-specific information. Ensemble learning merges multiple machine learning models to improve predictive performance by reducing variance and bias, ideal for structured data and classification problems. The choice depends on the application: RAG excels in natural language understanding and generative tasks, while ensemble learning is superior for traditional predictive modeling.

Connection

Retrieval augmented generation (RAG) enhances natural language processing models by integrating external knowledge sources during text generation, improving accuracy and relevance. Ensemble learning combines multiple machine learning models to boost predictive performance and robustness. By leveraging ensemble learning techniques, RAG systems can aggregate outputs from various retrieval and generation models, optimizing information synthesis and decision-making in complex AI tasks.

Key Terms

Model Aggregation

Ensemble learning improves model performance by combining predictions from multiple models, leveraging techniques like bagging, boosting, or stacking to reduce variance and bias in outputs. Retrieval Augmented Generation (RAG) integrates external information retrieval with generative models, enhancing response accuracy by dynamically aggregating relevant data during generation rather than relying solely on pre-trained weights. Explore the nuances of model aggregation in ensemble learning and RAG to optimize AI-driven decision-making and content generation.

Knowledge Retrieval

Ensemble learning combines multiple machine learning models to improve prediction accuracy, while retrieval-augmented generation (RAG) integrates external knowledge sources directly into the text generation process, enhancing the relevance and factuality of responses. In knowledge retrieval, RAG leverages indexed databases or document stores to dynamically fetch and incorporate pertinent information, outperforming traditional ensemble methods that rely solely on pre-trained model knowledge. Explore our detailed comparison to understand which approach best suits your knowledge retrieval needs.

Contextual Generation

Ensemble learning combines multiple models to improve prediction accuracy by aggregating their outputs, enhancing robustness in contextual generation tasks. Retrieval augmented generation (RAG) integrates external knowledge bases with generative models, enabling dynamic, context-aware content creation by retrieving relevant information during text generation. Explore deeper insights into how these approaches optimize contextual generation efficiency and accuracy.

Source and External Links

What is ensemble learning? - IBM - Ensemble learning aggregates two or more base models (like regression models or neural networks) to improve prediction accuracy beyond that of any single model alone, often converting weak learners into strong ones.

Ensemble Learning: Boost Accuracy with Multiple Models - Ensemble learning trains multiple models to solve a problem and combines their predictions, using methods such as bagging, boosting, and stacking, to enhance overall performance in classification, regression, and clustering tasks.

Ensemble learning - Wikipedia - Ensemble learning combines multiple weak or diverse base learners to form a single model with improved predictive performance and reduced variance, by leveraging their combined strengths despite individual weaknesses.

dowidth.com

dowidth.com