Edge AI processes data locally on devices, enhancing real-time decision-making capabilities without relying on cloud connectivity. Fog computing extends this by distributing computing resources across a network's edge to support larger-scale data processing and storage. Explore the key differences and benefits of Edge AI and Fog computing for smarter, faster technology solutions.

Why it is important

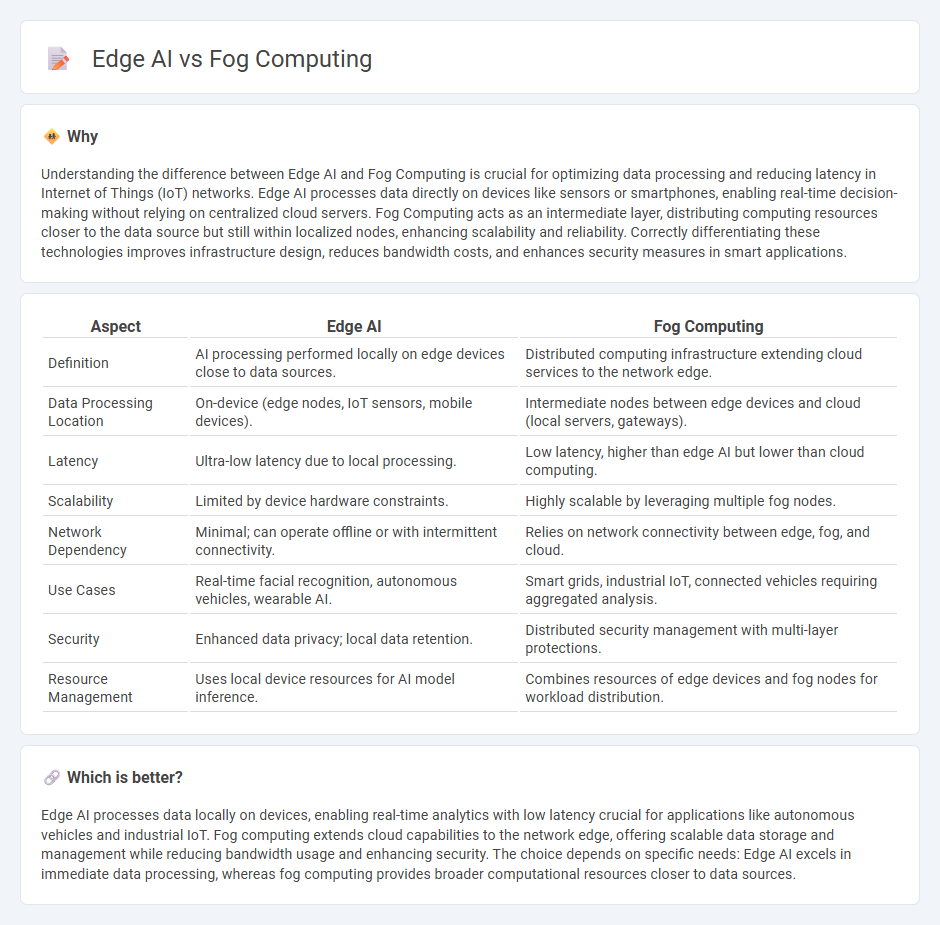

Understanding the difference between Edge AI and Fog Computing is crucial for optimizing data processing and reducing latency in Internet of Things (IoT) networks. Edge AI processes data directly on devices like sensors or smartphones, enabling real-time decision-making without relying on centralized cloud servers. Fog Computing acts as an intermediate layer, distributing computing resources closer to the data source but still within localized nodes, enhancing scalability and reliability. Correctly differentiating these technologies improves infrastructure design, reduces bandwidth costs, and enhances security measures in smart applications.

Comparison Table

| Aspect | Edge AI | Fog Computing |

|---|---|---|

| Definition | AI processing performed locally on edge devices close to data sources. | Distributed computing infrastructure extending cloud services to the network edge. |

| Data Processing Location | On-device (edge nodes, IoT sensors, mobile devices). | Intermediate nodes between edge devices and cloud (local servers, gateways). |

| Latency | Ultra-low latency due to local processing. | Low latency, higher than edge AI but lower than cloud computing. |

| Scalability | Limited by device hardware constraints. | Highly scalable by leveraging multiple fog nodes. |

| Network Dependency | Minimal; can operate offline or with intermittent connectivity. | Relies on network connectivity between edge, fog, and cloud. |

| Use Cases | Real-time facial recognition, autonomous vehicles, wearable AI. | Smart grids, industrial IoT, connected vehicles requiring aggregated analysis. |

| Security | Enhanced data privacy; local data retention. | Distributed security management with multi-layer protections. |

| Resource Management | Uses local device resources for AI model inference. | Combines resources of edge devices and fog nodes for workload distribution. |

Which is better?

Edge AI processes data locally on devices, enabling real-time analytics with low latency crucial for applications like autonomous vehicles and industrial IoT. Fog computing extends cloud capabilities to the network edge, offering scalable data storage and management while reducing bandwidth usage and enhancing security. The choice depends on specific needs: Edge AI excels in immediate data processing, whereas fog computing provides broader computational resources closer to data sources.

Connection

Edge AI processes data locally on devices or edge nodes, reducing latency and bandwidth usage by minimizing data transmitted to central servers. Fog computing extends cloud capabilities by providing intermediate processing, storage, and networking closer to the data source, enhancing real-time analytics and decision-making. Together, edge AI and fog computing create a distributed architecture that optimizes performance, scalability, and efficiency for IoT and smart applications.

Key Terms

Data Processing Location

Fog computing processes data in a decentralized network of intermediate nodes between the cloud and edge devices, distributing computational tasks to reduce latency and bandwidth usage. Edge AI performs data processing directly on the local devices where data is generated, enabling real-time decision-making with minimal reliance on external networks. Explore the distinctions in data processing locations to optimize performance and efficiency in your AI deployment.

Latency

Fog computing processes data closer to the network's edge but typically involves multiple nodes, resulting in latency around 10-50 milliseconds. Edge AI performs data processing directly on local devices, minimizing latency to under 10 milliseconds, crucial for real-time applications like autonomous vehicles and industrial automation. Explore further to understand how latency impacts fog computing and edge AI deployment strategies.

Intelligence Deployment

Fog computing distributes intelligence across multiple intermediate nodes between cloud data centers and edge devices, enabling real-time data processing closer to source devices and reducing latency. Edge AI deploys artificial intelligence algorithms directly on edge devices such as sensors, cameras, or smartphones, allowing immediate decision-making without cloud dependency. Explore how these differing intelligence deployment models impact latency, scalability, and data privacy in varied IoT applications.

Source and External Links

Fog Computing: Definition, Explanation, and Use Cases - Offers a comprehensive overview of fog computing, its decentralized infrastructure, and benefits in reducing latency and enhancing security.

Fog Computing - A distributed computing architecture that processes data locally at the edge of the network to reduce latency and bandwidth usage.

What Is Fog Computing? Importance, Applications, Everything to Know - Discusses fog computing's role in extending cloud services to the edge, highlighting its advantages in security, scalability, and latency reduction.

dowidth.com

dowidth.com