Generative fill uses AI to create new content within images by understanding context and patterns, while image completion focuses on filling missing or corrupted parts of an existing image with realistic details. Both techniques employ deep learning models like GANs or autoencoders but serve distinct purposes in image editing and restoration. Explore the differences and applications of generative fill and image completion to enhance your digital projects.

Why it is important

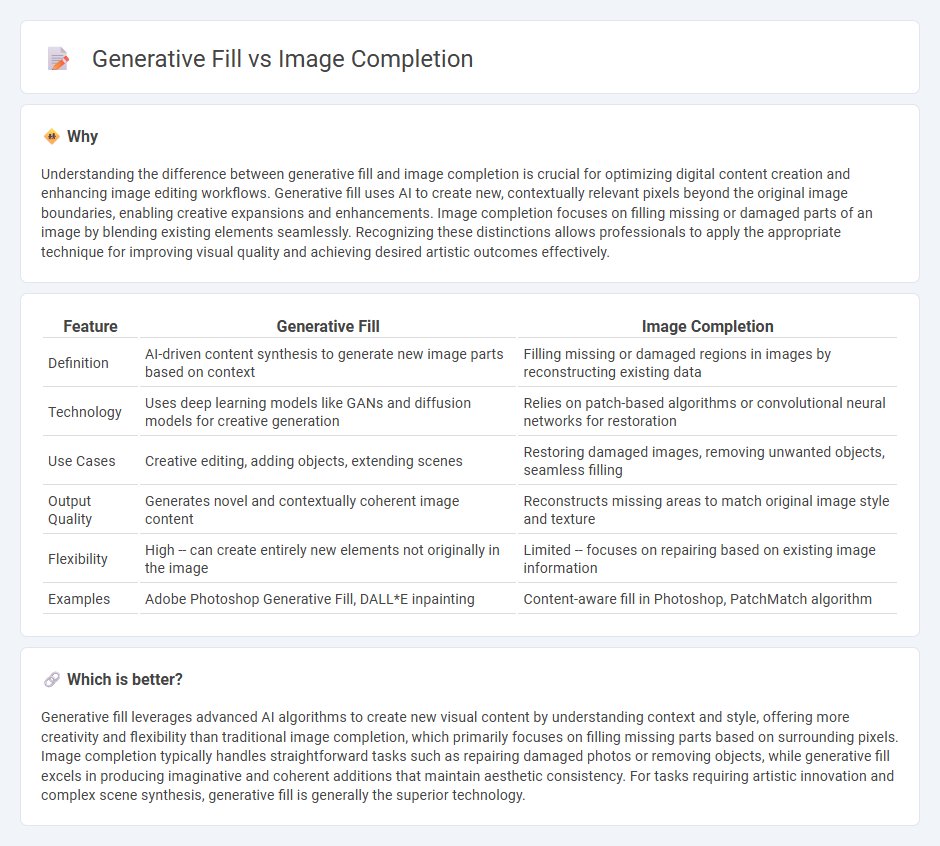

Understanding the difference between generative fill and image completion is crucial for optimizing digital content creation and enhancing image editing workflows. Generative fill uses AI to create new, contextually relevant pixels beyond the original image boundaries, enabling creative expansions and enhancements. Image completion focuses on filling missing or damaged parts of an image by blending existing elements seamlessly. Recognizing these distinctions allows professionals to apply the appropriate technique for improving visual quality and achieving desired artistic outcomes effectively.

Comparison Table

| Feature | Generative Fill | Image Completion |

|---|---|---|

| Definition | AI-driven content synthesis to generate new image parts based on context | Filling missing or damaged regions in images by reconstructing existing data |

| Technology | Uses deep learning models like GANs and diffusion models for creative generation | Relies on patch-based algorithms or convolutional neural networks for restoration |

| Use Cases | Creative editing, adding objects, extending scenes | Restoring damaged images, removing unwanted objects, seamless filling |

| Output Quality | Generates novel and contextually coherent image content | Reconstructs missing areas to match original image style and texture |

| Flexibility | High -- can create entirely new elements not originally in the image | Limited -- focuses on repairing based on existing image information |

| Examples | Adobe Photoshop Generative Fill, DALL*E inpainting | Content-aware fill in Photoshop, PatchMatch algorithm |

Which is better?

Generative fill leverages advanced AI algorithms to create new visual content by understanding context and style, offering more creativity and flexibility than traditional image completion, which primarily focuses on filling missing parts based on surrounding pixels. Image completion typically handles straightforward tasks such as repairing damaged photos or removing objects, while generative fill excels in producing imaginative and coherent additions that maintain aesthetic consistency. For tasks requiring artistic innovation and complex scene synthesis, generative fill is generally the superior technology.

Connection

Generative fill and image completion both utilize advanced deep learning algorithms to predict and recreate missing or occluded parts of an image with high accuracy. These technologies rely heavily on generative adversarial networks (GANs) or transformer-based models, which analyze context and semantic information to produce visually coherent results. Their integration enhances applications in photo editing, augmented reality, and content creation by enabling seamless restoration and modification of images.

Key Terms

Inpainting

Image completion and generative fill both focus on inpainting techniques that restore or enhance missing or damaged areas in images. Image completion often relies on patch-based methods and texture synthesis to seamlessly fill gaps, while generative fill leverages deep learning models, particularly GANs and diffusion models, to produce more realistic and context-aware results. Explore deeper insights into advancements and applications of inpainting technologies for enhanced image editing.

Diffusion Models

Image completion leverages diffusion models to iteratively refine and fill missing areas based on surrounding pixels, generating coherent visual content. Generative fill, enhanced by advanced diffusion techniques, not only completes but also creatively extends images by synthesizing plausible context and objects guided by learned data distributions. Explore the latest advancements in diffusion-powered image completion and generative fill to unlock cutting-edge creative possibilities.

Masking

Image completion relies on masking specific regions of an image to seamlessly fill in missing or corrupted parts based on surrounding pixels, ensuring visual coherence. In contrast, generative fill uses advanced AI models to interpret the masked area contextually and generate entirely new content, often enhancing creativity and details beyond the original image. Explore deeper insights into how masking techniques evolve the capabilities of image editing tools.

Source and External Links

Fragment-based image completion - ACM Digital Library - This method synthesizes missing parts of an image by iteratively selecting and compositing similar image fragments from the visible parts to ensure a visually plausible and coherent complete image.

Image Completion using Planar Structure Guidance - A patch-based image completion technique guided by automatically extracted mid-level structural cues like perspective and regularity to fill missing regions meaningfully, especially useful for scenes with planar surfaces and repetitive structures.

High-Fidelity Pluralistic Image Completion With Transformers - Combines transformers and CNNs to reconstruct the global structure and fine textures of images with missing parts, enabling high-resolution and diverse image completion results even for arbitrarily shaped holes.

dowidth.com

dowidth.com