Diffusion models utilize iterative noise addition and removal processes to generate high-quality data representations, while score-based models estimate data gradients to guide sampling in complex distributions. Both frameworks play pivotal roles in advanced generative machine learning tasks, offering unique advantages in image synthesis and denoising applications. Explore the comparative mechanisms and applications of diffusion and score-based models for deeper insights into modern AI technology.

Why it is important

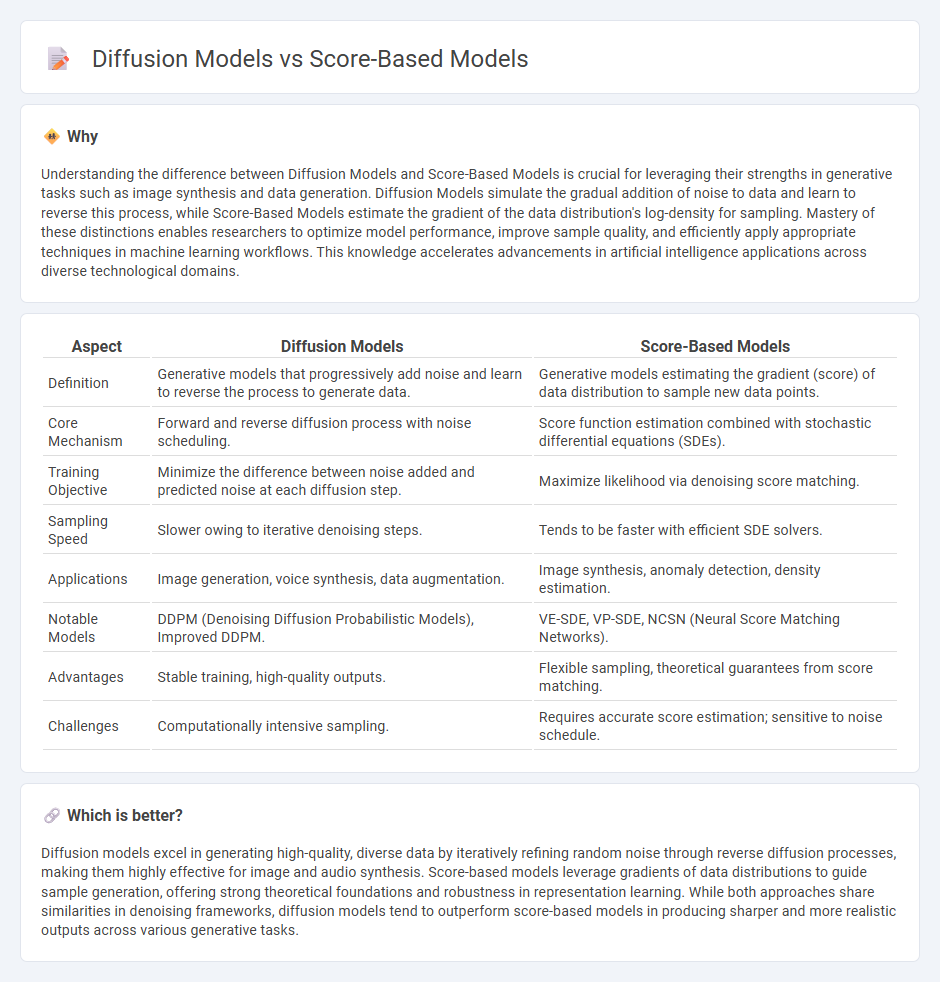

Understanding the difference between Diffusion Models and Score-Based Models is crucial for leveraging their strengths in generative tasks such as image synthesis and data generation. Diffusion Models simulate the gradual addition of noise to data and learn to reverse this process, while Score-Based Models estimate the gradient of the data distribution's log-density for sampling. Mastery of these distinctions enables researchers to optimize model performance, improve sample quality, and efficiently apply appropriate techniques in machine learning workflows. This knowledge accelerates advancements in artificial intelligence applications across diverse technological domains.

Comparison Table

| Aspect | Diffusion Models | Score-Based Models |

|---|---|---|

| Definition | Generative models that progressively add noise and learn to reverse the process to generate data. | Generative models estimating the gradient (score) of data distribution to sample new data points. |

| Core Mechanism | Forward and reverse diffusion process with noise scheduling. | Score function estimation combined with stochastic differential equations (SDEs). |

| Training Objective | Minimize the difference between noise added and predicted noise at each diffusion step. | Maximize likelihood via denoising score matching. |

| Sampling Speed | Slower owing to iterative denoising steps. | Tends to be faster with efficient SDE solvers. |

| Applications | Image generation, voice synthesis, data augmentation. | Image synthesis, anomaly detection, density estimation. |

| Notable Models | DDPM (Denoising Diffusion Probabilistic Models), Improved DDPM. | VE-SDE, VP-SDE, NCSN (Neural Score Matching Networks). |

| Advantages | Stable training, high-quality outputs. | Flexible sampling, theoretical guarantees from score matching. |

| Challenges | Computationally intensive sampling. | Requires accurate score estimation; sensitive to noise schedule. |

Which is better?

Diffusion models excel in generating high-quality, diverse data by iteratively refining random noise through reverse diffusion processes, making them highly effective for image and audio synthesis. Score-based models leverage gradients of data distributions to guide sample generation, offering strong theoretical foundations and robustness in representation learning. While both approaches share similarities in denoising frameworks, diffusion models tend to outperform score-based models in producing sharper and more realistic outputs across various generative tasks.

Connection

Diffusion models and score-based models are interconnected through their shared use of stochastic differential equations to denoise data by modeling the reverse diffusion process. Both methods estimate the gradient of the data distribution's log-density, known as the score function, to iteratively reconstruct clean samples from noise. This connection enables advanced generative modeling techniques that improve image synthesis and other data generation tasks.

Key Terms

Stochastic Differential Equations (SDEs)

Score-based models leverage Stochastic Differential Equations (SDEs) to iteratively refine data representations by estimating the gradient of the data distribution's log-density, enabling effective noise removal and sample generation. Diffusion models utilize forward and reverse SDEs to gradually corrupt and then reconstruct data, facilitating a tractable approach to high-quality generative modeling. Explore the mathematical foundations and applications of SDEs in these models to deepen your understanding of their generative capabilities.

Noise Perturbation

Score-based models estimate the gradient of the data distribution's log density to iteratively denoise samples corrupted by noise perturbations, effectively reversing the degradation process. Diffusion models progressively add Gaussian noise to data in a forward process and learn to denoise through a parameterized reverse diffusion process, enabling complex data generation. Explore deeper insights into the mechanisms and applications of these models to understand their strengths in noise perturbation handling.

Likelihood Estimation

Score-based models estimate data likelihood by directly modeling the gradient of the log probability density, enabling precise sample generation via Langevin dynamics. Diffusion models approach likelihood estimation by learning a forward noising process and a reverse denoising process, optimizing a variational bound on data likelihood. Explore more to understand the nuances of their likelihood estimation techniques and applications.

Source and External Links

Generative Modeling by Estimating Gradients of the Data Distribution - Score-based models involve learning the score function, defined as the gradient of the log density of data, which allows generation without normalizing constants in the density model and enables training by minimizing Fisher divergence using score matching techniques without adversarial optimization.

Diffusion and Score-Based Generative Models - YouTube - Score-based generative models use flexible neural nets to directly learn data score functions enabling high-quality sample generation that often outperforms other approaches, with benefits including principled sampling and accurate probability evaluation.

Score-Based Diffusion Models | Fan Pu Zeng - Score matching trains neural networks to learn score functions by minimizing Fisher divergence, addressing challenges in likelihood-based or adversarial methods, and allowing efficient sampling from complex data distributions.

dowidth.com

dowidth.com