Generative Adversarial Networks (GANs) utilize a dual-model architecture consisting of a generator and a discriminator that compete to create highly realistic synthetic data, excelling in image generation and augmentation tasks. Transformers, on the other hand, employ self-attention mechanisms to process sequential data efficiently, revolutionizing natural language processing and enabling advanced applications like machine translation and text generation. Explore the distinct strengths of GANs and Transformers to understand their transformative impact on artificial intelligence.

Why it is important

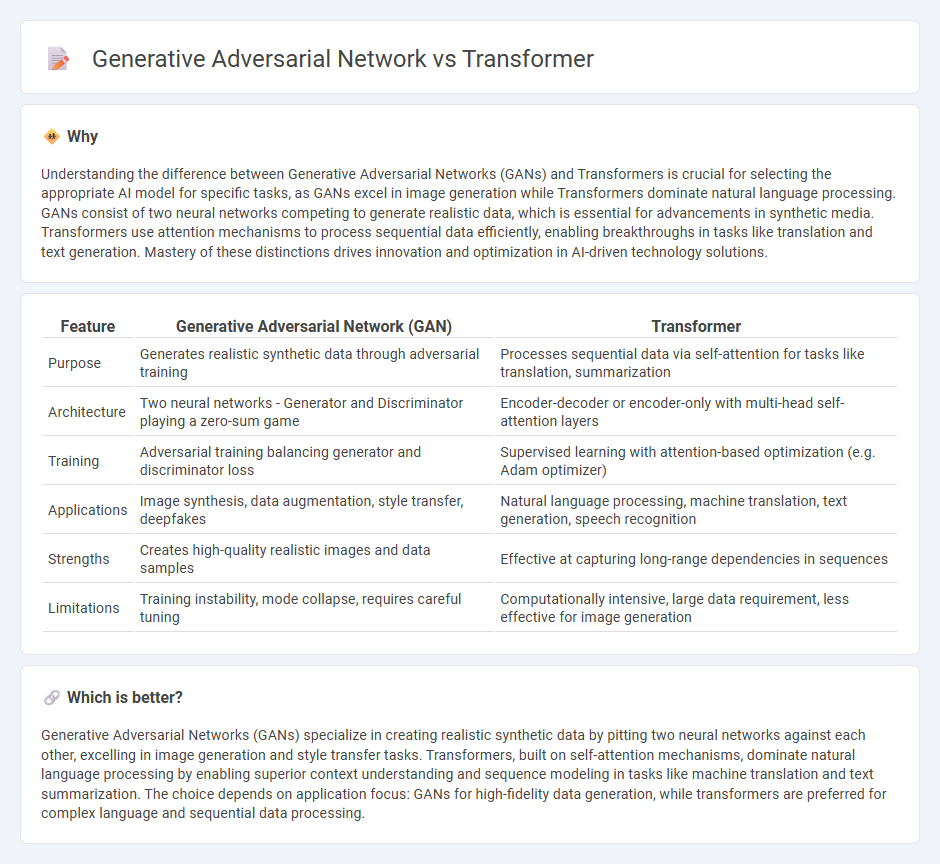

Understanding the difference between Generative Adversarial Networks (GANs) and Transformers is crucial for selecting the appropriate AI model for specific tasks, as GANs excel in image generation while Transformers dominate natural language processing. GANs consist of two neural networks competing to generate realistic data, which is essential for advancements in synthetic media. Transformers use attention mechanisms to process sequential data efficiently, enabling breakthroughs in tasks like translation and text generation. Mastery of these distinctions drives innovation and optimization in AI-driven technology solutions.

Comparison Table

| Feature | Generative Adversarial Network (GAN) | Transformer |

|---|---|---|

| Purpose | Generates realistic synthetic data through adversarial training | Processes sequential data via self-attention for tasks like translation, summarization |

| Architecture | Two neural networks - Generator and Discriminator playing a zero-sum game | Encoder-decoder or encoder-only with multi-head self-attention layers |

| Training | Adversarial training balancing generator and discriminator loss | Supervised learning with attention-based optimization (e.g. Adam optimizer) |

| Applications | Image synthesis, data augmentation, style transfer, deepfakes | Natural language processing, machine translation, text generation, speech recognition |

| Strengths | Creates high-quality realistic images and data samples | Effective at capturing long-range dependencies in sequences |

| Limitations | Training instability, mode collapse, requires careful tuning | Computationally intensive, large data requirement, less effective for image generation |

Which is better?

Generative Adversarial Networks (GANs) specialize in creating realistic synthetic data by pitting two neural networks against each other, excelling in image generation and style transfer tasks. Transformers, built on self-attention mechanisms, dominate natural language processing by enabling superior context understanding and sequence modeling in tasks like machine translation and text summarization. The choice depends on application focus: GANs for high-fidelity data generation, while transformers are preferred for complex language and sequential data processing.

Connection

Generative Adversarial Networks (GANs) and Transformers are connected through their roles in advancing artificial intelligence, with GANs excelling in generating realistic data by pitting two neural networks against each other, while Transformers revolutionize natural language processing and sequence modeling through self-attention mechanisms. Both architectures have influenced deep learning research, enabling improved generative models for applications such as image synthesis, text generation, and multimodal tasks. Integrating GANs with Transformer architectures enhances capabilities in generating coherent, high-quality content across diverse domains.

Key Terms

Attention mechanism

Transformers leverage self-attention mechanisms to weigh the importance of different input tokens dynamically, enabling efficient modeling of long-range dependencies in sequences. Generative Adversarial Networks (GANs) lack inherent attention mechanisms, relying instead on adversarial training between generator and discriminator networks to produce realistic outputs. Explore how the distinct utilization of attention impacts their performance in natural language processing and image generation tasks.

Generator-discriminator

Transformers excel in sequence-to-sequence tasks by leveraging self-attention mechanisms to generate contextually rich outputs, while Generative Adversarial Networks (GANs) rely on a dual-model setup consisting of a generator and a discriminator, where the generator creates synthetic data and the discriminator evaluates its authenticity to improve realism. The generator-discriminator dynamic in GANs fosters continuous adversarial training, driving the generator to produce increasingly convincing samples by learning from discriminator feedback. Explore more to understand how these architectures impact fields like natural language processing and image synthesis.

Sequence modeling

Transformers excel in sequence modeling by leveraging self-attention mechanisms to capture long-range dependencies and contextual relationships within data, making them ideal for natural language processing tasks like machine translation and text generation. Generative Adversarial Networks (GANs), while powerful in generating high-fidelity images, are less effective for sequence modeling due to their adversarial training paradigm and difficulty handling sequential dependencies. Explore more about how transformers are revolutionizing sequence modeling and the specific challenges GANs face in this domain.

Source and External Links

Transformer - Energy Education - A transformer is an electrical device that uses electromagnetic induction to transfer an AC signal from one circuit to another, changing voltage and current without passing DC; commonly used in power grids to step up or step down voltage for energy transmission efficiency.

Transformer | Definition, Types, & Facts - Britannica - A transformer transfers electric energy between AC circuits by electromagnetic induction, either stepping voltage up or down, and is used in applications ranging from power transmission to low-voltage household devices.

Transformer - Wikipedia - A transformer is a passive electrical component that transfers energy between circuits through a changing magnetic flux in a shared core, widely used for voltage conversion and electrical isolation in power systems and electronics.

dowidth.com

dowidth.com