Liquid neural networks adapt dynamically to time-varying data by continuously updating their internal states, unlike feedforward neural networks which process inputs through fixed layers in a single pass. This adaptability allows liquid neural networks to excel in tasks involving temporal dependencies and real-time processing. Explore how these advanced architectures revolutionize machine learning applications.

Why it is important

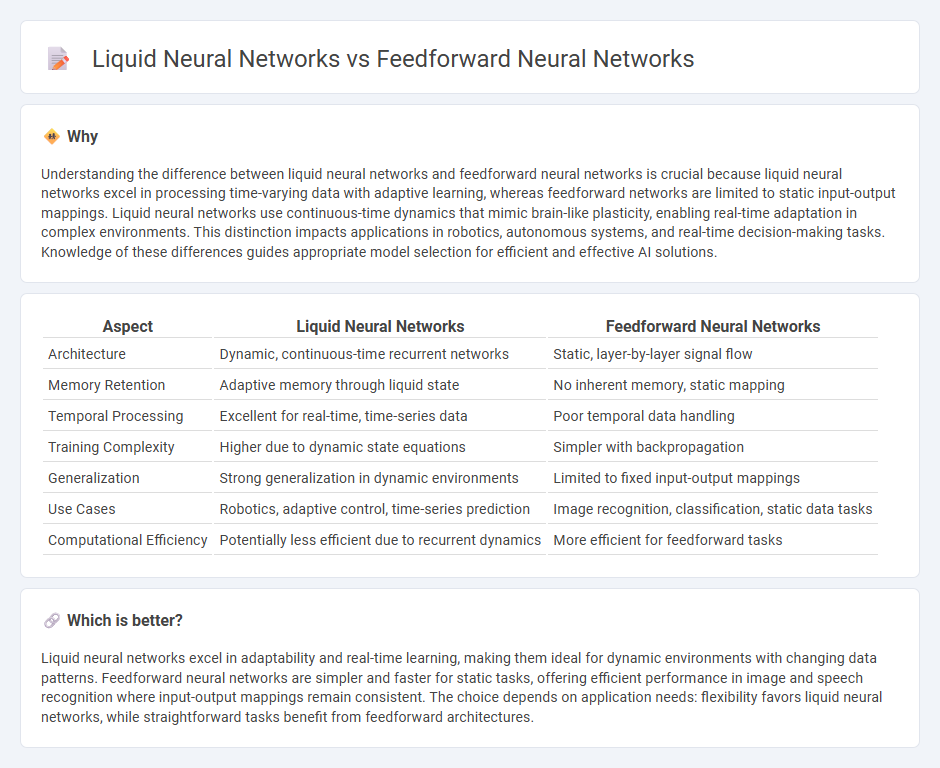

Understanding the difference between liquid neural networks and feedforward neural networks is crucial because liquid neural networks excel in processing time-varying data with adaptive learning, whereas feedforward networks are limited to static input-output mappings. Liquid neural networks use continuous-time dynamics that mimic brain-like plasticity, enabling real-time adaptation in complex environments. This distinction impacts applications in robotics, autonomous systems, and real-time decision-making tasks. Knowledge of these differences guides appropriate model selection for efficient and effective AI solutions.

Comparison Table

| Aspect | Liquid Neural Networks | Feedforward Neural Networks |

|---|---|---|

| Architecture | Dynamic, continuous-time recurrent networks | Static, layer-by-layer signal flow |

| Memory Retention | Adaptive memory through liquid state | No inherent memory, static mapping |

| Temporal Processing | Excellent for real-time, time-series data | Poor temporal data handling |

| Training Complexity | Higher due to dynamic state equations | Simpler with backpropagation |

| Generalization | Strong generalization in dynamic environments | Limited to fixed input-output mappings |

| Use Cases | Robotics, adaptive control, time-series prediction | Image recognition, classification, static data tasks |

| Computational Efficiency | Potentially less efficient due to recurrent dynamics | More efficient for feedforward tasks |

Which is better?

Liquid neural networks excel in adaptability and real-time learning, making them ideal for dynamic environments with changing data patterns. Feedforward neural networks are simpler and faster for static tasks, offering efficient performance in image and speech recognition where input-output mappings remain consistent. The choice depends on application needs: flexibility favors liquid neural networks, while straightforward tasks benefit from feedforward architectures.

Connection

Liquid neural networks and feedforward neural networks are interconnected through their foundational use of artificial neurons to process data and learn patterns. While feedforward neural networks transmit information in one direction from input to output layers, liquid neural networks incorporate dynamic, time-sensitive components that enable adaptability and continuous learning. This connection highlights the evolution from static, layered architectures to more flexible, temporally aware models in advanced machine learning.

Key Terms

Architecture

Feedforward neural networks follow a fixed architecture where information flows in one direction from input to output layers without feedback loops, primarily designed for static data processing. Liquid neural networks incorporate adaptable, recurrent structures inspired by neural plasticity, enabling dynamic reconfiguration and improved handling of time-varying data and continuous learning. Explore the architectural innovations that distinguish liquid neural networks from traditional feedforward models for deeper insights.

Temporal Dynamics

Feedforward neural networks process data in a unidirectional flow without internal memory, limiting their ability to capture temporal dynamics. Liquid neural networks, inspired by biological neural circuits, utilize continuous-time dynamics and adaptable internal states to effectively model time-varying data and temporal dependencies. Explore the advantages of liquid neural networks for handling complex temporal patterns and their applications in real-time systems.

Adaptability

Feedforward neural networks process information in a fixed, unidirectional flow, limiting their ability to adapt dynamically to new or changing inputs without retraining. Liquid neural networks feature dynamic connections and adaptable internal states that enable real-time learning and flexible responses to evolving data patterns. Explore how liquid neural networks enhance adaptability beyond traditional feedforward architectures.

Source and External Links

Feed Forward Neural Network Definition - A feedforward neural network is a simple type of artificial neural network where information moves in only one direction through the input, hidden, and output layers; it is trained using backpropagation and activation functions like sigmoid, tanh, or ReLU to learn complex patterns.

Feedforward Neural Network - This type of network processes data sequentially from input neurons through one or more hidden layers to an output layer, adjusting weights to minimize prediction error and using activation functions to introduce non-linearity for modeling complex data.

Understanding Feedforward Neural Networks (FNNs) - FNNs are used in AI for tasks like classification and regression by processing input data forward through layers without loops or memory of past inputs; they are fundamental in applications such as credit scoring by analyzing individual inputs independently.

dowidth.com

dowidth.com