Generative Adversarial Networks (GANs) focus on creating realistic data samples by pitting a generator against a discriminator in a competitive learning process, commonly used in image synthesis and enhancement. Contrastive Learning, on the other hand, emphasizes learning representations by comparing and contrasting data pairs to improve tasks like clustering and classification without labeled data. Explore how these advanced machine learning techniques transform data generation and representation learning in modern AI applications.

Why it is important

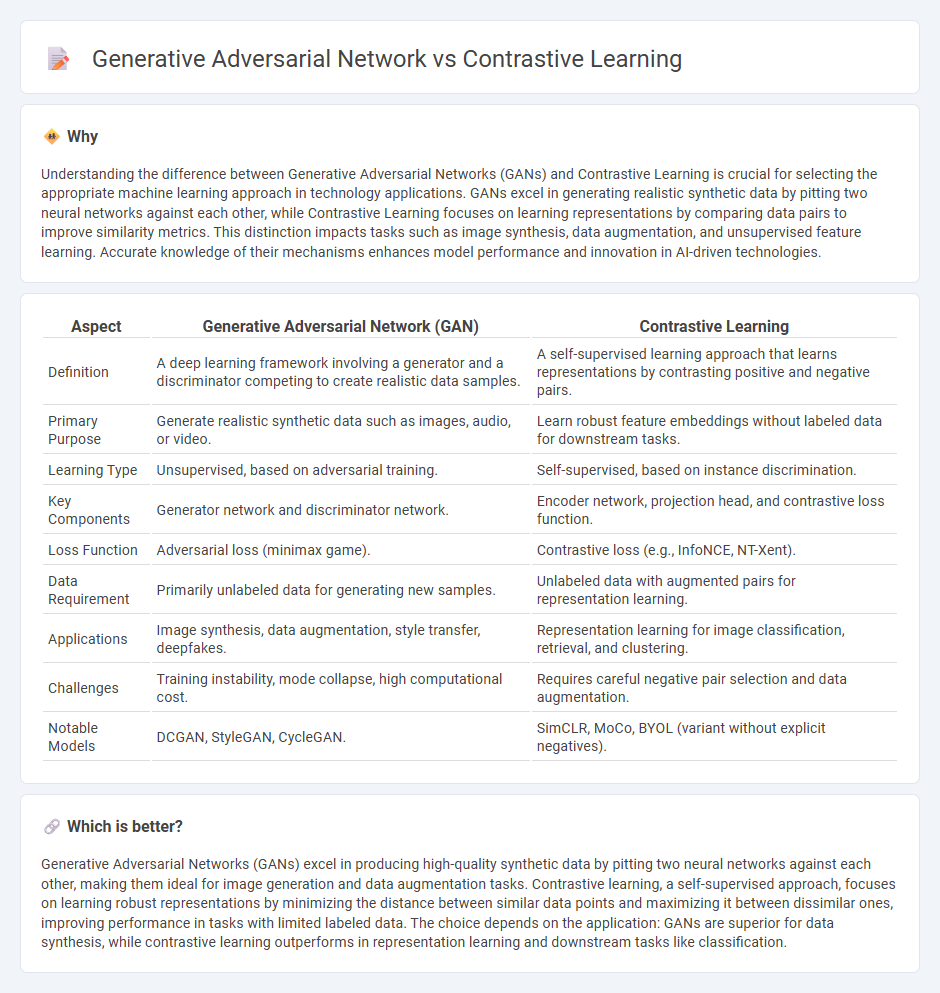

Understanding the difference between Generative Adversarial Networks (GANs) and Contrastive Learning is crucial for selecting the appropriate machine learning approach in technology applications. GANs excel in generating realistic synthetic data by pitting two neural networks against each other, while Contrastive Learning focuses on learning representations by comparing data pairs to improve similarity metrics. This distinction impacts tasks such as image synthesis, data augmentation, and unsupervised feature learning. Accurate knowledge of their mechanisms enhances model performance and innovation in AI-driven technologies.

Comparison Table

| Aspect | Generative Adversarial Network (GAN) | Contrastive Learning |

|---|---|---|

| Definition | A deep learning framework involving a generator and a discriminator competing to create realistic data samples. | A self-supervised learning approach that learns representations by contrasting positive and negative pairs. |

| Primary Purpose | Generate realistic synthetic data such as images, audio, or video. | Learn robust feature embeddings without labeled data for downstream tasks. |

| Learning Type | Unsupervised, based on adversarial training. | Self-supervised, based on instance discrimination. |

| Key Components | Generator network and discriminator network. | Encoder network, projection head, and contrastive loss function. |

| Loss Function | Adversarial loss (minimax game). | Contrastive loss (e.g., InfoNCE, NT-Xent). |

| Data Requirement | Primarily unlabeled data for generating new samples. | Unlabeled data with augmented pairs for representation learning. |

| Applications | Image synthesis, data augmentation, style transfer, deepfakes. | Representation learning for image classification, retrieval, and clustering. |

| Challenges | Training instability, mode collapse, high computational cost. | Requires careful negative pair selection and data augmentation. |

| Notable Models | DCGAN, StyleGAN, CycleGAN. | SimCLR, MoCo, BYOL (variant without explicit negatives). |

Which is better?

Generative Adversarial Networks (GANs) excel in producing high-quality synthetic data by pitting two neural networks against each other, making them ideal for image generation and data augmentation tasks. Contrastive learning, a self-supervised approach, focuses on learning robust representations by minimizing the distance between similar data points and maximizing it between dissimilar ones, improving performance in tasks with limited labeled data. The choice depends on the application: GANs are superior for data synthesis, while contrastive learning outperforms in representation learning and downstream tasks like classification.

Connection

Generative Adversarial Networks (GANs) and contrastive learning are connected through their shared goal of improving representation learning by leveraging both generative and discriminative approaches. GANs use a generator and a discriminator in a minimax game to produce realistic data samples, while contrastive learning optimizes an encoder by pulling together similar sample pairs and pushing apart dissimilar ones in the latent space. Combining these techniques enhances unsupervised learning by enabling models to generate high-quality synthetic data and learn robust, discriminative feature embeddings simultaneously.

Key Terms

Representation Learning

Contrastive learning emphasizes learning robust representations by maximizing agreement between differently augmented views of the same data, enabling efficient feature extraction without labeled data. Generative Adversarial Networks (GANs) focus on generating realistic data samples through a competitive process between a generator and a discriminator, which can also lead to useful latent representations. Explore in-depth analysis to understand the trade-offs and applications of both methods in representation learning.

Discriminator

Contrastive learning emphasizes distinguishing between similar and dissimilar data points by learning representations through comparison, whereas the discriminator in generative adversarial networks (GANs) focuses on differentiating real data from synthetic data produced by the generator. In GANs, the discriminator's primary role is to improve the generator's output quality by providing feedback on its authenticity, leveraging a binary classification task. Explore further to understand how the discriminator's architecture and training dynamics impact both contrastive learning and GAN performance.

Data Generation

Contrastive learning optimizes representation by distinguishing between similar and dissimilar data pairs, enhancing the model's ability to understand data structure without explicit data generation. Generative Adversarial Networks (GANs) actively generate new data samples by pitting a generator against a discriminator, enabling realistic data synthesis for applications like image and video creation. Explore the nuances and applications of these techniques to understand their impact on data generation.

Source and External Links

Full Guide to Contrastive Learning - Contrastive learning is a method that learns meaningful data representations by contrasting positive pairs (similar instances) against negative pairs (dissimilar instances), effectively mapping similar data close together and pushing apart different data in the latent space, widely applied in vision and NLP tasks using data augmentation and encoder networks for self-supervised learning.

Contrastive Representation Learning - Contrastive learning aims to create embedding spaces where similar samples are close and dissimilar samples are far apart, functioning in both supervised and unsupervised contexts and serving as a powerful self-supervised learning technique.

The Beginner's Guide to Contrastive Learning - This technique improves visual recognition by training models to minimize distance between an anchor and positive samples and maximize distance from negative samples, simulating human-like learning to distinguish between data distributions effectively.

dowidth.com

dowidth.com