Retrieval Augmented Generation (RAG) enhances language models by integrating external knowledge retrieval, allowing accurate and contextually rich responses from vast unstructured data. Zero-Shot Learning enables AI systems to perform tasks without prior explicit training, leveraging underlying semantic relationships to generalize knowledge effectively. Explore the nuances and applications of RAG and Zero-Shot Learning to understand their transformative impact on AI development.

Why it is important

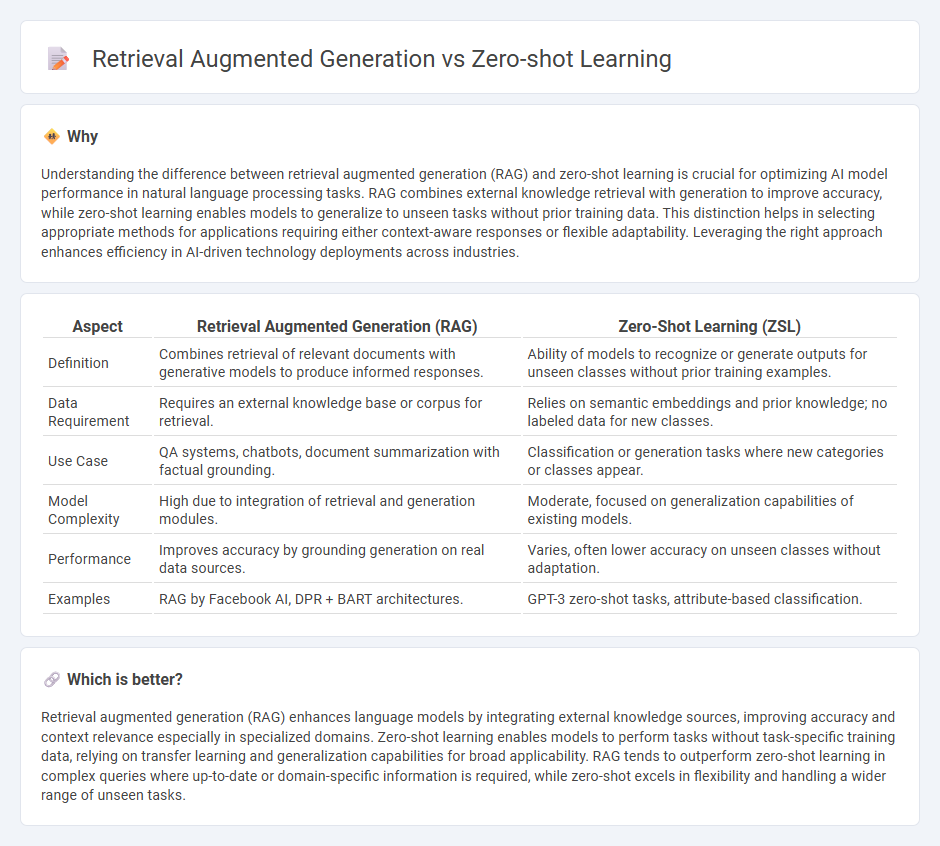

Understanding the difference between retrieval augmented generation (RAG) and zero-shot learning is crucial for optimizing AI model performance in natural language processing tasks. RAG combines external knowledge retrieval with generation to improve accuracy, while zero-shot learning enables models to generalize to unseen tasks without prior training data. This distinction helps in selecting appropriate methods for applications requiring either context-aware responses or flexible adaptability. Leveraging the right approach enhances efficiency in AI-driven technology deployments across industries.

Comparison Table

| Aspect | Retrieval Augmented Generation (RAG) | Zero-Shot Learning (ZSL) |

|---|---|---|

| Definition | Combines retrieval of relevant documents with generative models to produce informed responses. | Ability of models to recognize or generate outputs for unseen classes without prior training examples. |

| Data Requirement | Requires an external knowledge base or corpus for retrieval. | Relies on semantic embeddings and prior knowledge; no labeled data for new classes. |

| Use Case | QA systems, chatbots, document summarization with factual grounding. | Classification or generation tasks where new categories or classes appear. |

| Model Complexity | High due to integration of retrieval and generation modules. | Moderate, focused on generalization capabilities of existing models. |

| Performance | Improves accuracy by grounding generation on real data sources. | Varies, often lower accuracy on unseen classes without adaptation. |

| Examples | RAG by Facebook AI, DPR + BART architectures. | GPT-3 zero-shot tasks, attribute-based classification. |

Which is better?

Retrieval augmented generation (RAG) enhances language models by integrating external knowledge sources, improving accuracy and context relevance especially in specialized domains. Zero-shot learning enables models to perform tasks without task-specific training data, relying on transfer learning and generalization capabilities for broad applicability. RAG tends to outperform zero-shot learning in complex queries where up-to-date or domain-specific information is required, while zero-shot excels in flexibility and handling a wider range of unseen tasks.

Connection

Retrieval augmented generation (RAG) enhances natural language processing by integrating external knowledge retrieval into the generative model, enabling more accurate and context-aware responses. Zero-shot learning complements RAG by allowing models to generalize and generate relevant outputs for unseen tasks or data without explicit prior training. Together, they improve AI systems' adaptability and knowledge utilization by combining retrieval of pertinent information with the ability to reason about novel queries.

Key Terms

Transfer Learning

Zero-shot learning leverages transfer learning by applying knowledge from related tasks to recognize unseen classes without additional training data, enhancing model generalization. Retrieval augmented generation (RAG) integrates transfer learning with external knowledge retrieval, dynamically fetching relevant information to improve context-aware text generation. Explore the nuances of transfer learning in these paradigms to deepen understanding of their distinct advantages and applications.

Knowledge Retrieval

Zero-shot learning leverages pre-trained models to infer knowledge without explicit task-specific training, enabling the retrieval of relevant information based solely on semantic understanding. Retrieval augmented generation (RAG) combines neural language models with external knowledge bases, dynamically fetching pertinent data to enhance response accuracy and contextual relevance. Explore how integrating these approaches can optimize knowledge retrieval for advanced AI applications.

Prompt Engineering

Zero-shot learning leverages pre-trained models to perform tasks without task-specific training data by using carefully crafted prompts that guide model understanding, emphasizing prompt design for accurate task generalization. Retrieval Augmented Generation (RAG) innovatively combines external knowledge retrieval with generative models to enhance response relevance and factual accuracy, where prompt engineering integrates retrieval cues and generation instructions for optimal output. Explore detailed strategies in prompt engineering to master both zero-shot learning performance and effective retrieval augmentation.

Source and External Links

Zero-shot learning - Zero-shot learning is a machine learning setup where a model must classify objects from classes it was not trained on, using auxiliary information to bridge the gap between seen and unseen classes.

What Is Zero-Shot Learning? - Zero-shot learning refers to the challenge of recognizing and categorizing objects or concepts without any labeled examples of those specific classes in the training data, relying instead on auxiliary knowledge.

The Essential Guide to Zero-Shot Learning [2024] - In zero-shot learning, a model generalizes to unseen classes by leveraging semantic relationships and auxiliary information, such as descriptions or attributes, to transfer knowledge from seen to unseen categories.

dowidth.com

dowidth.com