Diffusion models and GANs represent two advanced approaches in generative AI, with diffusion models excelling in creating high-quality images through gradual noise reduction, while GANs use adversarial training between generator and discriminator networks. Diffusion models offer improved stability and diversity in outputs compared to the mode collapse challenges often seen in GANs. Explore further to understand the unique strengths and applications of both models in modern AI development.

Why it is important

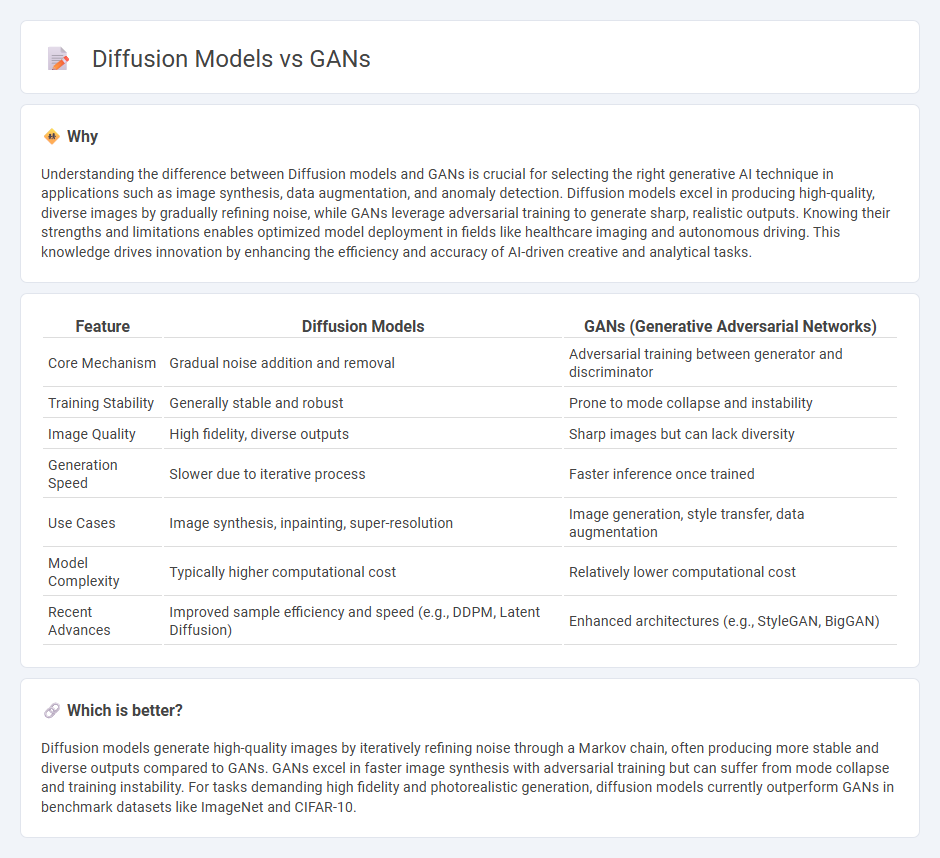

Understanding the difference between Diffusion models and GANs is crucial for selecting the right generative AI technique in applications such as image synthesis, data augmentation, and anomaly detection. Diffusion models excel in producing high-quality, diverse images by gradually refining noise, while GANs leverage adversarial training to generate sharp, realistic outputs. Knowing their strengths and limitations enables optimized model deployment in fields like healthcare imaging and autonomous driving. This knowledge drives innovation by enhancing the efficiency and accuracy of AI-driven creative and analytical tasks.

Comparison Table

| Feature | Diffusion Models | GANs (Generative Adversarial Networks) |

|---|---|---|

| Core Mechanism | Gradual noise addition and removal | Adversarial training between generator and discriminator |

| Training Stability | Generally stable and robust | Prone to mode collapse and instability |

| Image Quality | High fidelity, diverse outputs | Sharp images but can lack diversity |

| Generation Speed | Slower due to iterative process | Faster inference once trained |

| Use Cases | Image synthesis, inpainting, super-resolution | Image generation, style transfer, data augmentation |

| Model Complexity | Typically higher computational cost | Relatively lower computational cost |

| Recent Advances | Improved sample efficiency and speed (e.g., DDPM, Latent Diffusion) | Enhanced architectures (e.g., StyleGAN, BigGAN) |

Which is better?

Diffusion models generate high-quality images by iteratively refining noise through a Markov chain, often producing more stable and diverse outputs compared to GANs. GANs excel in faster image synthesis with adversarial training but can suffer from mode collapse and training instability. For tasks demanding high fidelity and photorealistic generation, diffusion models currently outperform GANs in benchmark datasets like ImageNet and CIFAR-10.

Connection

Diffusion models and Generative Adversarial Networks (GANs) both belong to the family of generative models in artificial intelligence, designed to create realistic synthetic data by learning underlying data distributions. While GANs rely on a competitive training process between a generator and a discriminator to produce high-fidelity outputs, diffusion models generate data through iterative denoising steps that reverse a gradual corruption process. Both approaches advance the field of image synthesis and representation learning by enabling precise generation of complex data patterns.

Key Terms

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) consist of two neural networks--a generator and a discriminator--that compete to produce realistic synthetic data, often used in image synthesis and video generation. GANs excel in generating high-resolution images swiftly but can suffer from training instability and mode collapse, limiting diversity in outputs. Explore more about GAN architectures and applications to understand their impact on creative AI technologies.

Denoising Diffusion Probabilistic Models (DDPMs)

Denoising Diffusion Probabilistic Models (DDPMs) outperform GANs in generating high-quality, diverse images by iteratively refining noise through a Markov chain, improving stability and reducing mode collapse. DDPMs leverage a forward diffusion process that adds noise to data and a learned reverse denoising process to recover the original signal, allowing precise control over generation quality. Explore advanced architectures and training strategies to better understand the cutting-edge advancements in diffusion-based image synthesis.

Latent Space

GANs leverage a latent space where a generator network transforms random vectors into realistic data samples, optimizing through adversarial training to capture data distributions efficiently. Diffusion models progressively refine noise through iterative denoising steps in a latent space, enabling the generation of high-fidelity and diverse outputs. Explore the detailed mechanics and applications of latent spaces in GANs and diffusion models to deepen your understanding of these cutting-edge generative techniques.

Source and External Links

What are Generative Adversarial Networks (GANs)? - IBM - GANs are machine learning models with two neural networks, a generator and a discriminator, competing to create realistic synthetic data by learning from existing datasets; the generator produces data while the discriminator evaluates its authenticity to improve realism over time.

A Gentle Introduction to Generative Adversarial Networks (GANs) - GANs use two deep learning models, a generator for creating new data samples and a discriminator for distinguishing real from fake data, training them adversarially to enhance the quality of generated outputs.

Generative Adversarial Network (GAN) - GeeksforGeeks - Introduced by Ian Goodfellow in 2014, GANs generate realistic new data samples by training a generator network with random input noise to fool a discriminator network that tries to classify data as real or generated, widely used in fields like art, gaming, and healthcare.

dowidth.com

dowidth.com