Self-supervised learning leverages unlabeled data by creating surrogate tasks, enabling models to learn rich, generalized representations without manual annotations. Weakly supervised learning utilizes limited or noisy labels, such as incomplete or coarse annotations, to train models when fully labeled data is scarce or expensive to obtain. Explore the distinctions and applications of these emerging machine learning paradigms to enhance AI model training.

Why it is important

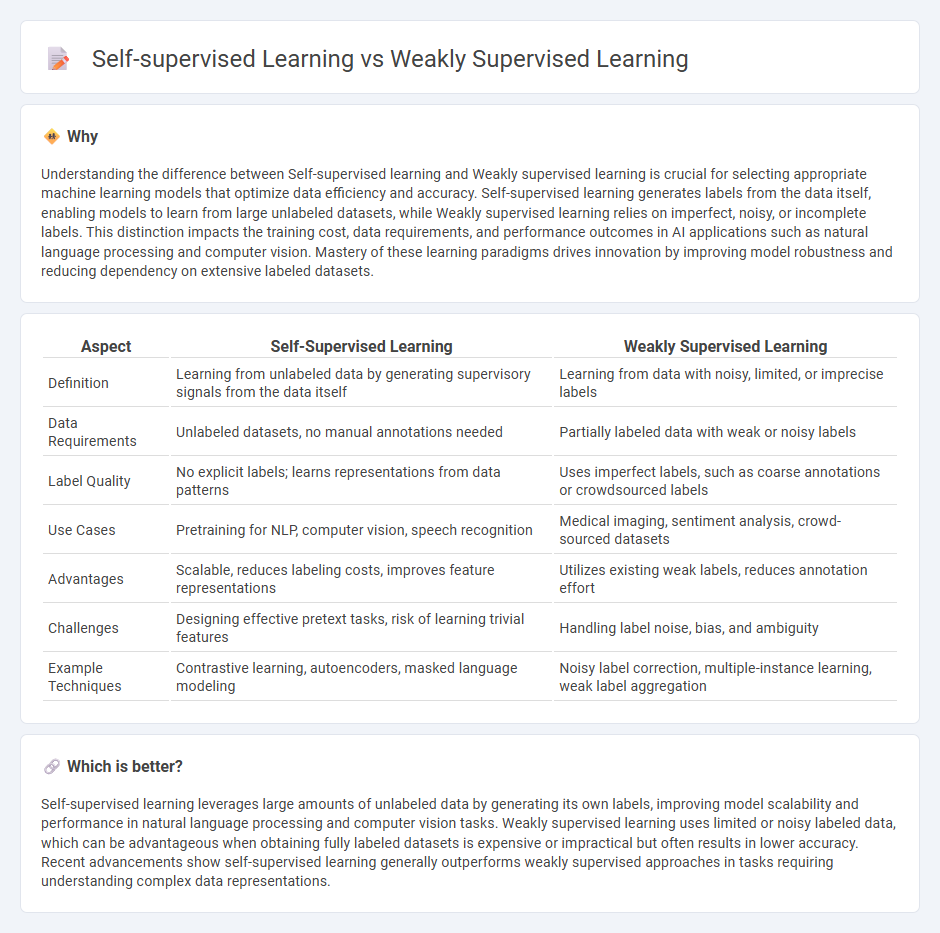

Understanding the difference between Self-supervised learning and Weakly supervised learning is crucial for selecting appropriate machine learning models that optimize data efficiency and accuracy. Self-supervised learning generates labels from the data itself, enabling models to learn from large unlabeled datasets, while Weakly supervised learning relies on imperfect, noisy, or incomplete labels. This distinction impacts the training cost, data requirements, and performance outcomes in AI applications such as natural language processing and computer vision. Mastery of these learning paradigms drives innovation by improving model robustness and reducing dependency on extensive labeled datasets.

Comparison Table

| Aspect | Self-Supervised Learning | Weakly Supervised Learning |

|---|---|---|

| Definition | Learning from unlabeled data by generating supervisory signals from the data itself | Learning from data with noisy, limited, or imprecise labels |

| Data Requirements | Unlabeled datasets, no manual annotations needed | Partially labeled data with weak or noisy labels |

| Label Quality | No explicit labels; learns representations from data patterns | Uses imperfect labels, such as coarse annotations or crowdsourced labels |

| Use Cases | Pretraining for NLP, computer vision, speech recognition | Medical imaging, sentiment analysis, crowd-sourced datasets |

| Advantages | Scalable, reduces labeling costs, improves feature representations | Utilizes existing weak labels, reduces annotation effort |

| Challenges | Designing effective pretext tasks, risk of learning trivial features | Handling label noise, bias, and ambiguity |

| Example Techniques | Contrastive learning, autoencoders, masked language modeling | Noisy label correction, multiple-instance learning, weak label aggregation |

Which is better?

Self-supervised learning leverages large amounts of unlabeled data by generating its own labels, improving model scalability and performance in natural language processing and computer vision tasks. Weakly supervised learning uses limited or noisy labeled data, which can be advantageous when obtaining fully labeled datasets is expensive or impractical but often results in lower accuracy. Recent advancements show self-supervised learning generally outperforms weakly supervised approaches in tasks requiring understanding complex data representations.

Connection

Self-supervised learning leverages unlabeled data by generating pseudo-labels from input features, forming a foundation that weakly supervised learning builds upon by incorporating imperfect or noisy labels to improve model training. Both techniques reduce reliance on extensive labeled datasets, enhancing scalability in applications like natural language processing and computer vision. Their connection lies in utilizing partial supervisory signals to extract meaningful representations, enabling efficient learning from less annotated data.

Key Terms

Labeled Data

Weakly supervised learning leverages limited labeled data often combined with noisy or imprecise labels to train models effectively, while self-supervised learning generates labels automatically from unlabeled data through pretext tasks, reducing dependency on manual annotation. In weakly supervised learning, the presence of some labeled examples guides the learning process, whereas self-supervised learning relies heavily on intrinsic data structures for representation learning. Explore these techniques further to understand their impact on reducing labeled data requirements in machine learning.

Pretext Task

Pretext tasks in weakly supervised learning rely on limited or noisy labeled data to generate surrogate labels, helping models learn meaningful representations without full supervision. In self-supervised learning, pretext tasks are designed to create intrinsic supervisory signals from the data itself, such as predicting image rotations or solving jigsaw puzzles, enabling robust feature extraction. Explore more to understand how pretext tasks drive performance differences in these learning paradigms.

Annotation Efficiency

Weakly supervised learning leverages limited or imprecise annotations such as noisy labels or coarse-grained tags to train models, significantly reducing the cost and effort compared to fully supervised approaches. Self-supervised learning creates supervisory signals from the data itself, enabling models to learn robust representations without any manual labeling, thereby maximizing annotation efficiency. Explore how these techniques transform data annotation strategies and accelerate model training workflows.

Source and External Links

Weak supervision - Wikipedia - Weak supervision, also known as semi-supervised learning, is a machine learning paradigm that uses a small amount of human-labeled data combined with a large amount of unlabeled data to train models, useful when labeled data is expensive or hard to acquire.

An Introduction to Weakly Supervised Learning - Paperspace blog - Weakly supervised learning includes incomplete, inexact, or incorrect supervision, where models learn from limited or noisy labeled data combined with large unlabeled datasets, featuring methods like active learning, semi-supervised learning, and transfer learning.

Essential Guide to Weak Supervision - Snorkel AI - Weak supervision leverages scalable but noisy sources of labels, combining multiple weak signals via labeling functions to create large training sets more quickly than manual labeling, with methods to automatically learn confidence in different sources.

dowidth.com

dowidth.com