Neural Radiance Fields (NeRF) leverage deep learning to generate photorealistic 3D scenes by synthesizing light fields, offering dynamic viewpoint adaptability absent in conventional polygonal modeling. Polygonal modeling constructs 3D objects through vertices, edges, and faces, providing precise control but often requiring extensive manual effort and limitations in rendering complex lighting effects. Explore the advancements and practical applications that distinguish NeRF from traditional polygonal frameworks.

Why it is important

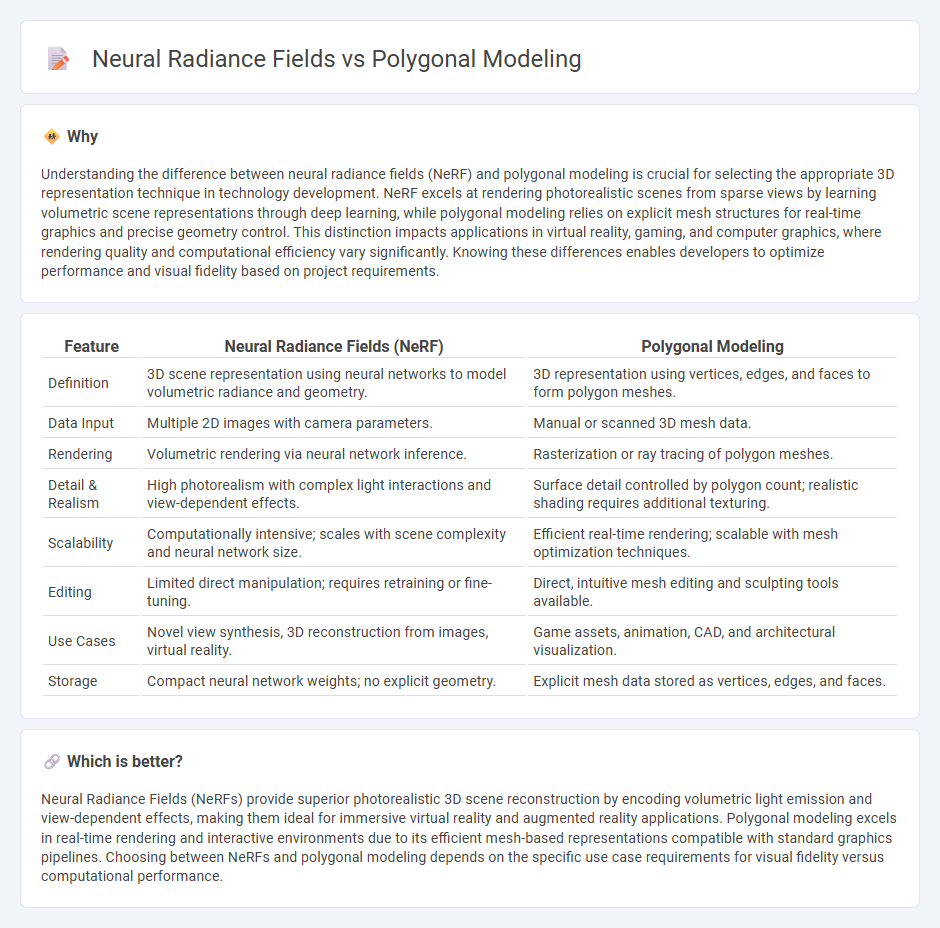

Understanding the difference between neural radiance fields (NeRF) and polygonal modeling is crucial for selecting the appropriate 3D representation technique in technology development. NeRF excels at rendering photorealistic scenes from sparse views by learning volumetric scene representations through deep learning, while polygonal modeling relies on explicit mesh structures for real-time graphics and precise geometry control. This distinction impacts applications in virtual reality, gaming, and computer graphics, where rendering quality and computational efficiency vary significantly. Knowing these differences enables developers to optimize performance and visual fidelity based on project requirements.

Comparison Table

| Feature | Neural Radiance Fields (NeRF) | Polygonal Modeling |

|---|---|---|

| Definition | 3D scene representation using neural networks to model volumetric radiance and geometry. | 3D representation using vertices, edges, and faces to form polygon meshes. |

| Data Input | Multiple 2D images with camera parameters. | Manual or scanned 3D mesh data. |

| Rendering | Volumetric rendering via neural network inference. | Rasterization or ray tracing of polygon meshes. |

| Detail & Realism | High photorealism with complex light interactions and view-dependent effects. | Surface detail controlled by polygon count; realistic shading requires additional texturing. |

| Scalability | Computationally intensive; scales with scene complexity and neural network size. | Efficient real-time rendering; scalable with mesh optimization techniques. |

| Editing | Limited direct manipulation; requires retraining or fine-tuning. | Direct, intuitive mesh editing and sculpting tools available. |

| Use Cases | Novel view synthesis, 3D reconstruction from images, virtual reality. | Game assets, animation, CAD, and architectural visualization. |

| Storage | Compact neural network weights; no explicit geometry. | Explicit mesh data stored as vertices, edges, and faces. |

Which is better?

Neural Radiance Fields (NeRFs) provide superior photorealistic 3D scene reconstruction by encoding volumetric light emission and view-dependent effects, making them ideal for immersive virtual reality and augmented reality applications. Polygonal modeling excels in real-time rendering and interactive environments due to its efficient mesh-based representations compatible with standard graphics pipelines. Choosing between NeRFs and polygonal modeling depends on the specific use case requirements for visual fidelity versus computational performance.

Connection

Neural radiance fields (NeRF) encode 3D scenes by optimizing volumetric rendering based on spatial information, while polygonal modeling represents objects using vertex-connected polygons for mesh construction. Recent advances integrate NeRF's continuous volumetric representations with polygonal modeling to improve 3D reconstruction accuracy by combining implicit neural scene encoding with explicit mesh geometries. This synergy enhances applications in augmented reality, computer graphics, and photorealistic rendering by leveraging the strengths of both volumetric and surface-based 3D modeling techniques.

Key Terms

Mesh (Polygonal Modeling)

Polygonal modeling structures 3D objects using vertices, edges, and faces to create meshes, offering precise control over shape and topology essential for animation and real-time rendering. Neural Radiance Fields (NeRF) represent scenes with continuous volumetric functions, excelling in photorealistic view synthesis but lacking explicit mesh outputs for traditional geometry manipulation. Discover how polygonal meshes remain pivotal for workflows requiring editable, tangible 3D representations and explore deeper contrasts with NeRF technology.

Voxels (Neural Radiance Fields)

Polygonal modeling relies on vertices, edges, and faces to create 3D objects defined by discrete geometric shapes, whereas Neural Radiance Fields (NeRFs) represent scenes using continuous volumetric data, capturing light fields and color gradients within voxel grids. Voxels in NeRFs allow for complex rendering of realistic lighting and translucency by encoding volumetric densities and radiance, enabling photorealistic scene synthesis that polygonal models struggle to achieve. Explore more about how voxel-based NeRFs revolutionize 3D representation and rendering in advanced computer graphics.

Differentiable Rendering

Differentiable rendering bridges the gap between polygonal modeling and neural radiance fields (NeRFs) by enabling gradient-based optimization in 3D reconstruction and scene editing. Polygonal modeling relies on explicit mesh representations optimized through differentiable renderers, facilitating precise control over geometry and textures. Explore the advances in differentiable rendering techniques to understand how these approaches impact 3D computer vision and graphics workflows.

Source and External Links

Polygonal Modeling in Computer Graphics - Polygonal modeling is a technique in 3D computer graphics using polygon meshes made of vertices, edges, and faces to represent complex surfaces, widely used in animation and video games for organic models like humans and animals.

Polygonal modeling - It is an approach that models objects by approximating surfaces with polygon meshes (mainly triangles and quads), suited for real-time graphics and based on geometric principles like vertices and edges defining faces.

The Main Benefits and Disadvantages of Polygonal Modeling - Polygonal modeling builds 3D objects from flat polygons allowing for unique, organic designs primarily used in video games and animation, with the ability to increase polygon count for more detail and hybrid modeling combining multiple 3D techniques.

dowidth.com

dowidth.com