Graph Neural Networks (GNNs) excel at processing data structured as graphs, capturing relationships between nodes to enable tasks like node classification and link prediction. Autoencoders focus on learning efficient data representations by encoding inputs into lower-dimensional embeddings and reconstructing them, widely used in dimensionality reduction and anomaly detection. Explore deeper insights into GNN architectures and Autoencoder variants to understand their unique capabilities and applications.

Why it is important

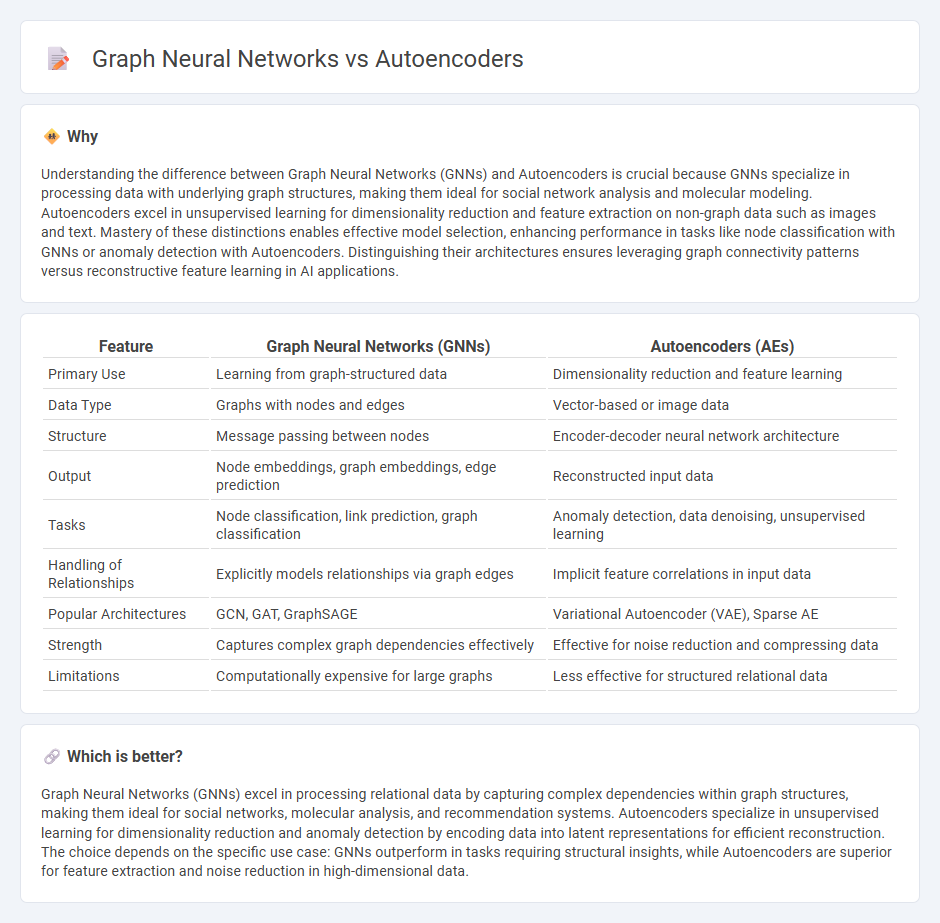

Understanding the difference between Graph Neural Networks (GNNs) and Autoencoders is crucial because GNNs specialize in processing data with underlying graph structures, making them ideal for social network analysis and molecular modeling. Autoencoders excel in unsupervised learning for dimensionality reduction and feature extraction on non-graph data such as images and text. Mastery of these distinctions enables effective model selection, enhancing performance in tasks like node classification with GNNs or anomaly detection with Autoencoders. Distinguishing their architectures ensures leveraging graph connectivity patterns versus reconstructive feature learning in AI applications.

Comparison Table

| Feature | Graph Neural Networks (GNNs) | Autoencoders (AEs) |

|---|---|---|

| Primary Use | Learning from graph-structured data | Dimensionality reduction and feature learning |

| Data Type | Graphs with nodes and edges | Vector-based or image data |

| Structure | Message passing between nodes | Encoder-decoder neural network architecture |

| Output | Node embeddings, graph embeddings, edge prediction | Reconstructed input data |

| Tasks | Node classification, link prediction, graph classification | Anomaly detection, data denoising, unsupervised learning |

| Handling of Relationships | Explicitly models relationships via graph edges | Implicit feature correlations in input data |

| Popular Architectures | GCN, GAT, GraphSAGE | Variational Autoencoder (VAE), Sparse AE |

| Strength | Captures complex graph dependencies effectively | Effective for noise reduction and compressing data |

| Limitations | Computationally expensive for large graphs | Less effective for structured relational data |

Which is better?

Graph Neural Networks (GNNs) excel in processing relational data by capturing complex dependencies within graph structures, making them ideal for social networks, molecular analysis, and recommendation systems. Autoencoders specialize in unsupervised learning for dimensionality reduction and anomaly detection by encoding data into latent representations for efficient reconstruction. The choice depends on the specific use case: GNNs outperform in tasks requiring structural insights, while Autoencoders are superior for feature extraction and noise reduction in high-dimensional data.

Connection

Graph neural networks (GNNs) and autoencoders are connected through their shared capability to learn efficient data representations; GNNs capture structured relationships in graph data while autoencoders encode and decode input features to uncover underlying patterns. Combining GNNs with autoencoders enables the extraction of low-dimensional embeddings that preserve graph topology and node attributes, enhancing tasks like node classification and link prediction. This integration leverages the strengths of both models to improve performance in complex networked data analysis.

Key Terms

Latent Representation

Autoencoders excel at learning compact latent representations by encoding input data into lower-dimensional vectors and reconstructing them, primarily suited for grid-like or vectorized data. Graph Neural Networks (GNNs) leverage the structural information of graph-formed data to generate latent embeddings that capture node relationships and graph topology effectively. Explore detailed comparisons to understand how latent representation techniques differ across these models and their applications.

Node Embedding

Autoencoders excel in node embedding by reconstructing graph data through unsupervised learning, capturing latent representations that preserve node features and graph structure. Graph Neural Networks (GNNs) leverage message passing to aggregate neighbor information, enhancing embeddings with structural and semantic context for downstream tasks. Explore deeper insights into how these models transform graph data into meaningful node embeddings.

Reconstruction

Autoencoders excel at reconstructing input data by learning efficient latent representations, making them highly effective for dimensionality reduction and anomaly detection. Graph neural networks (GNNs) focus on reconstructing graph structures and node features by capturing relational dependencies, which is crucial for tasks like link prediction and node classification. Explore more about how these models differ in their reconstruction mechanisms and applications.

Source and External Links

Autoencoder - Wikipedia - An autoencoder is a type of artificial neural network used for unsupervised learning to learn efficient codings of unlabeled data by compressing and then reconstructing the input data.

Introduction to Autoencoders: From The Basics to Advanced ... - Autoencoders consist of two key parts, an encoder that compresses input to a latent space and a decoder that reconstructs the input from this compressed representation, optimized by minimizing reconstruction error through backpropagation.

What Is an Autoencoder? | IBM - An autoencoder neural network efficiently compresses input data to essential features (latent space) and reconstructs it from this compressed form, used in tasks such as feature extraction, anomaly detection, and data generation with variations like variational autoencoders.

dowidth.com

dowidth.com