Diffusion models and Variational Autoencoders (VAEs) are prominent generative models in the field of artificial intelligence used for creating high-quality synthetic data. Diffusion models excel in gradually refining noisy inputs to produce realistic images, while VAEs leverage probabilistic encoding and decoding to generate diverse outputs with efficient latent space representation. Explore the key differences and applications of these models to enhance your understanding of advanced generative techniques.

Why it is important

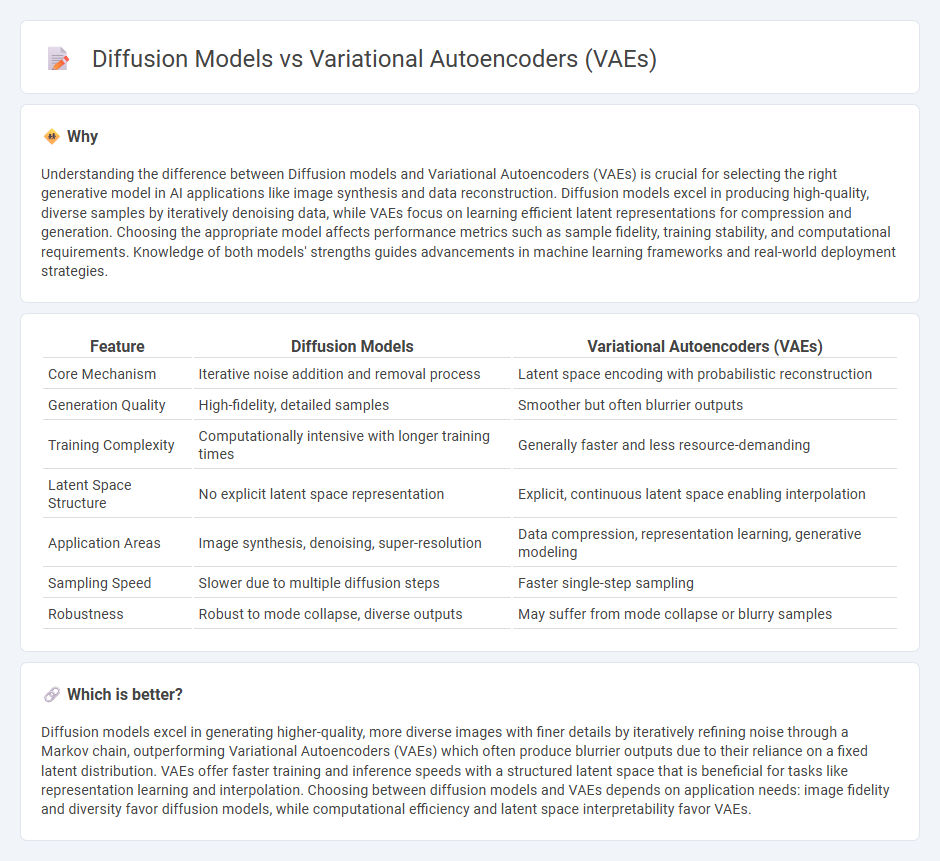

Understanding the difference between Diffusion models and Variational Autoencoders (VAEs) is crucial for selecting the right generative model in AI applications like image synthesis and data reconstruction. Diffusion models excel in producing high-quality, diverse samples by iteratively denoising data, while VAEs focus on learning efficient latent representations for compression and generation. Choosing the appropriate model affects performance metrics such as sample fidelity, training stability, and computational requirements. Knowledge of both models' strengths guides advancements in machine learning frameworks and real-world deployment strategies.

Comparison Table

| Feature | Diffusion Models | Variational Autoencoders (VAEs) |

|---|---|---|

| Core Mechanism | Iterative noise addition and removal process | Latent space encoding with probabilistic reconstruction |

| Generation Quality | High-fidelity, detailed samples | Smoother but often blurrier outputs |

| Training Complexity | Computationally intensive with longer training times | Generally faster and less resource-demanding |

| Latent Space Structure | No explicit latent space representation | Explicit, continuous latent space enabling interpolation |

| Application Areas | Image synthesis, denoising, super-resolution | Data compression, representation learning, generative modeling |

| Sampling Speed | Slower due to multiple diffusion steps | Faster single-step sampling |

| Robustness | Robust to mode collapse, diverse outputs | May suffer from mode collapse or blurry samples |

Which is better?

Diffusion models excel in generating higher-quality, more diverse images with finer details by iteratively refining noise through a Markov chain, outperforming Variational Autoencoders (VAEs) which often produce blurrier outputs due to their reliance on a fixed latent distribution. VAEs offer faster training and inference speeds with a structured latent space that is beneficial for tasks like representation learning and interpolation. Choosing between diffusion models and VAEs depends on application needs: image fidelity and diversity favor diffusion models, while computational efficiency and latent space interpretability favor VAEs.

Connection

Diffusion models and Variational Autoencoders (VAEs) both serve as generative frameworks in machine learning, utilizing latent variable representations to model complex data distributions. VAEs rely on encoding input data into a latent space and decoding it back to generate samples, whereas diffusion models iteratively refine data through a series of noising and denoising steps to produce high-fidelity samples. Both approaches leverage probabilistic frameworks to capture data variability, making them foundational for advancements in image synthesis and representation learning.

Key Terms

Latent Space

Variational Autoencoders (VAEs) encode data into a continuous latent space defined by probabilistic distributions, enabling efficient sampling and interpolation but sometimes producing blurrier outputs. Diffusion models operate through iterative denoising processes without explicit latent representations, allowing them to generate high-fidelity samples at the cost of increased computational resources. Explore the nuances of latent spaces and generation mechanisms to understand the strengths of VAEs and diffusion models.

Probabilistic Sampling

Variational Autoencoders (VAEs) generate data by encoding inputs into a latent space and sampling from a learned probability distribution, optimizing the evidence lower bound to balance reconstruction accuracy and latent regularization. Diffusion models iteratively transform noise into data by reversing a gradual noising process through a learned denoising probabilistic model, enabling high-quality sample generation from complex distributions. Explore deeper insights into how these probabilistic sampling frameworks shape generative modeling by diving into latest research on VAEs and diffusion models.

Image Generation

Variational Autoencoders (VAEs) utilize probabilistic encoding and decoding to generate images by learning latent representations, but often struggle with producing sharp, high-fidelity outputs. Diffusion models iteratively refine noise through a gradual denoising process, achieving superior image quality and diversity by modeling complex data distributions more effectively. Explore further to understand how these differences impact practical applications in image synthesis.

Source and External Links

Variational Autoencoders (VAEs) - Dataforest - Variational Autoencoders are generative models that learn probabilistic latent space representations to generate new data points similar to the training data, using an encoder-decoder architecture where inputs map to distributions rather than fixed points, enabling controlled and diverse outputs.

What is a Variational Autoencoder? - IBM - VAEs use deep neural networks to encode inputs into continuous latent probability distributions and decode samples from these to generate data variations, with the key innovation of the reparameterization trick enabling efficient training of these probabilistic models.

What is Variational Autoencoders (VAEs)? - Kanerika - VAEs encode input data into a lower-dimensional latent space with added randomness and decode back to reconstruct inputs, allowing new data generation, useful in image synthesis, anomaly detection, and data compression by learning efficient unsupervised representations.

dowidth.com

dowidth.com