Federated learning enables decentralized model training by allowing multiple devices to collaboratively learn without sharing raw data, enhancing privacy and security. Transfer learning focuses on leveraging pre-trained models to adapt knowledge from one domain to another, significantly reducing the need for large labeled datasets. Explore the detailed differences and applications to understand which approach suits your AI project best.

Why it is important

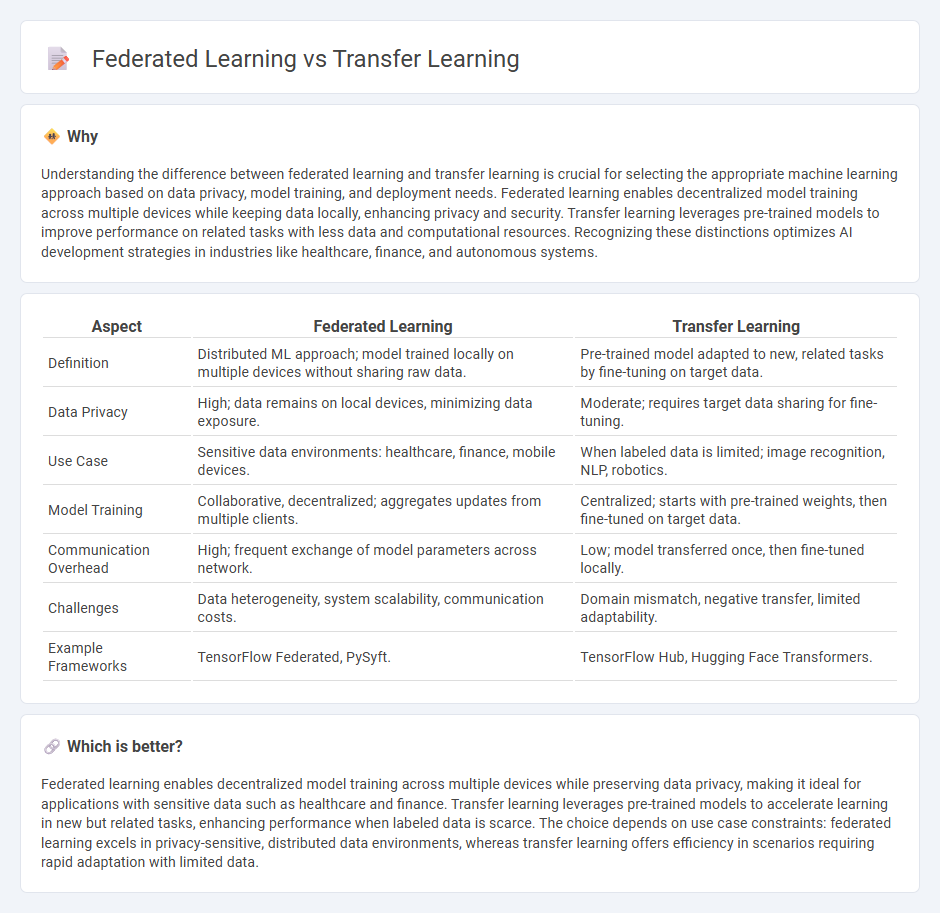

Understanding the difference between federated learning and transfer learning is crucial for selecting the appropriate machine learning approach based on data privacy, model training, and deployment needs. Federated learning enables decentralized model training across multiple devices while keeping data locally, enhancing privacy and security. Transfer learning leverages pre-trained models to improve performance on related tasks with less data and computational resources. Recognizing these distinctions optimizes AI development strategies in industries like healthcare, finance, and autonomous systems.

Comparison Table

| Aspect | Federated Learning | Transfer Learning |

|---|---|---|

| Definition | Distributed ML approach; model trained locally on multiple devices without sharing raw data. | Pre-trained model adapted to new, related tasks by fine-tuning on target data. |

| Data Privacy | High; data remains on local devices, minimizing data exposure. | Moderate; requires target data sharing for fine-tuning. |

| Use Case | Sensitive data environments: healthcare, finance, mobile devices. | When labeled data is limited; image recognition, NLP, robotics. |

| Model Training | Collaborative, decentralized; aggregates updates from multiple clients. | Centralized; starts with pre-trained weights, then fine-tuned on target data. |

| Communication Overhead | High; frequent exchange of model parameters across network. | Low; model transferred once, then fine-tuned locally. |

| Challenges | Data heterogeneity, system scalability, communication costs. | Domain mismatch, negative transfer, limited adaptability. |

| Example Frameworks | TensorFlow Federated, PySyft. | TensorFlow Hub, Hugging Face Transformers. |

Which is better?

Federated learning enables decentralized model training across multiple devices while preserving data privacy, making it ideal for applications with sensitive data such as healthcare and finance. Transfer learning leverages pre-trained models to accelerate learning in new but related tasks, enhancing performance when labeled data is scarce. The choice depends on use case constraints: federated learning excels in privacy-sensitive, distributed data environments, whereas transfer learning offers efficiency in scenarios requiring rapid adaptation with limited data.

Connection

Federated learning and transfer learning both enhance machine learning efficiency by distributing knowledge across decentralized data sources while preserving privacy. Federated learning enables multiple devices to collaboratively train models without sharing raw data, while transfer learning applies pre-trained models to new, related tasks, accelerating adaptation. Their connection lies in leveraging shared knowledge to improve learning accuracy and reduce resource consumption across diverse environments.

Key Terms

Model Reuse (Transfer Learning)

Transfer learning enables model reuse by leveraging pre-trained models on large datasets to improve performance and reduce training time on new, related tasks, particularly when labeled data is scarce. Federated learning, in contrast, focuses on decentralized model training across multiple devices while preserving data privacy, without directly reusing pre-trained models. Discover more about how transfer learning accelerates AI solutions through effective model reuse strategies.

Data Privacy (Federated Learning)

Federated learning enhances data privacy by enabling models to be trained directly on decentralized devices, ensuring that sensitive data never leaves the local environment, unlike traditional transfer learning which often requires centralized data aggregation. This decentralized approach reduces the risk of data breaches and complies with strict privacy regulations such as GDPR and HIPAA. Explore how federated learning can revolutionize privacy-preserving AI in your applications.

Decentralized Training (Federated Learning)

Decentralized training in federated learning enables multiple edge devices to collaboratively train a shared model without exchanging raw data, preserving privacy and reducing communication overhead. Unlike transfer learning, which adapts pre-trained models to new tasks by leveraging centralized data, federated learning distributes model updates across nodes while maintaining data locality. Explore how federated learning revolutionizes privacy-preserving AI and enhances decentralized model training.

Source and External Links

What is transfer learning? - Transfer learning is a machine learning technique where knowledge gained from one task or dataset is used to improve model performance on a related, new task or dataset, enabling reuse of pre-trained models to enhance generalization and reduce data needs for deep learning problems.

What Is Transfer Learning? A Guide for Deep Learning - Transfer learning reuses a pre-trained model on a new but related problem, allowing deep neural networks to be trained effectively with much less data by transferring learned weights and patterns between tasks.

What is Transfer Learning? - Transfer learning fine-tunes a pre-trained model for a related task, improving efficiency, accessibility, and performance by reducing the need for large datasets and computational resources while enabling faster adaptation to new use cases.

dowidth.com

dowidth.com