Retrieval Augmented Generation (RAG) combines pre-trained language models with external knowledge sources to improve response accuracy by retrieving relevant documents during generation. Transfer learning leverages knowledge from large pre-trained models, adapting them to specific tasks with fine-tuning, often requiring less labeled data. Explore the nuanced differences and applications of RAG and transfer learning to understand their impact on modern AI systems.

Why it is important

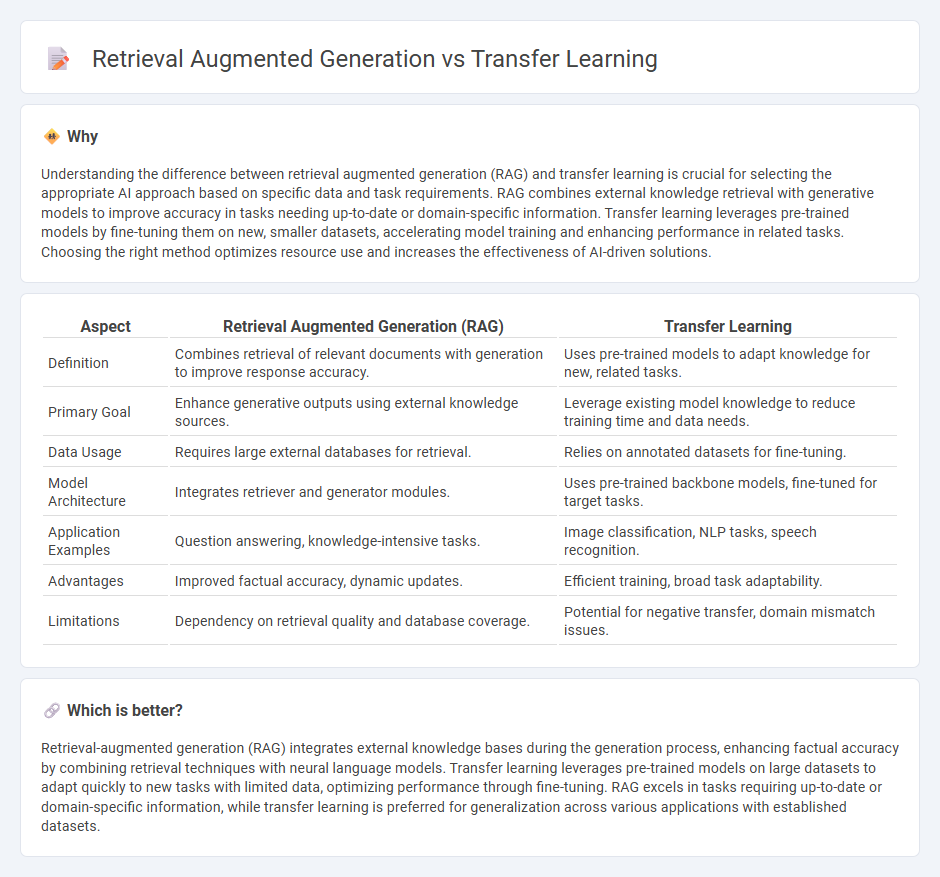

Understanding the difference between retrieval augmented generation (RAG) and transfer learning is crucial for selecting the appropriate AI approach based on specific data and task requirements. RAG combines external knowledge retrieval with generative models to improve accuracy in tasks needing up-to-date or domain-specific information. Transfer learning leverages pre-trained models by fine-tuning them on new, smaller datasets, accelerating model training and enhancing performance in related tasks. Choosing the right method optimizes resource use and increases the effectiveness of AI-driven solutions.

Comparison Table

| Aspect | Retrieval Augmented Generation (RAG) | Transfer Learning |

|---|---|---|

| Definition | Combines retrieval of relevant documents with generation to improve response accuracy. | Uses pre-trained models to adapt knowledge for new, related tasks. |

| Primary Goal | Enhance generative outputs using external knowledge sources. | Leverage existing model knowledge to reduce training time and data needs. |

| Data Usage | Requires large external databases for retrieval. | Relies on annotated datasets for fine-tuning. |

| Model Architecture | Integrates retriever and generator modules. | Uses pre-trained backbone models, fine-tuned for target tasks. |

| Application Examples | Question answering, knowledge-intensive tasks. | Image classification, NLP tasks, speech recognition. |

| Advantages | Improved factual accuracy, dynamic updates. | Efficient training, broad task adaptability. |

| Limitations | Dependency on retrieval quality and database coverage. | Potential for negative transfer, domain mismatch issues. |

Which is better?

Retrieval-augmented generation (RAG) integrates external knowledge bases during the generation process, enhancing factual accuracy by combining retrieval techniques with neural language models. Transfer learning leverages pre-trained models on large datasets to adapt quickly to new tasks with limited data, optimizing performance through fine-tuning. RAG excels in tasks requiring up-to-date or domain-specific information, while transfer learning is preferred for generalization across various applications with established datasets.

Connection

Retrieval augmented generation (RAG) enhances natural language processing by integrating external knowledge bases during text generation, improving accuracy and relevance. Transfer learning contributes to RAG by leveraging pretrained models on large datasets, enabling efficient adaptation to specific tasks with limited labeled data. Together, they optimize language models, combining knowledge retrieval with learned representations for robust and context-aware AI applications.

Key Terms

Pre-trained Models

Transfer learning leverages pre-trained models by fine-tuning them on specific tasks, allowing faster adaptation with less data while retaining learned knowledge from vast datasets. Retrieval augmented generation (RAG) combines pre-trained language models with external retrieval systems to enhance output accuracy by incorporating relevant, up-to-date information during generation. Explore detailed comparisons on leveraging pre-trained models for optimized AI performance.

Knowledge Base

Transfer learning leverages pre-trained models to adapt knowledge representations from large datasets into domain-specific Knowledge Bases, enhancing accuracy with minimal labeled data. Retrieval Augmented Generation (RAG) integrates external document retrieval directly into the generative process, dynamically accessing and incorporating relevant Knowledge Base content to improve response relevance. Explore how each method optimizes Knowledge Base utilization and performance in real-world applications.

Fine-tuning

Fine-tuning in transfer learning involves adapting a pre-trained model's weights on a specific downstream task using labeled data, enhancing task-specific performance by leveraging existing learned representations. In retrieval augmented generation (RAG), fine-tuning emphasizes integrating an external knowledge retriever with the generator, optimizing both components to improve contextual information retrieval and generation accuracy. Explore more about how fine-tuning shapes the efficiency of transfer learning and RAG in specialized applications.

Source and External Links

What is transfer learning? - IBM - Transfer learning is a machine learning technique where knowledge gained from one task or dataset is used to improve model performance on a related but different task or dataset, enabling improved generalization without training from scratch.

What Is Transfer Learning? A Guide for Deep Learning | Built In - Transfer learning reuses a pre-trained model on a new related problem, allowing deep neural networks to be trained effectively even when limited labeled data is available for the new task.

What is Transfer Learning? - AWS - Transfer learning fine-tunes models pre-trained on one task for new related tasks, offering benefits including faster adaptation, lower dataset requirements, and improved performance in diverse real-world scenarios.

dowidth.com

dowidth.com