Graph Neural Networks excel in processing complex graph-structured data by leveraging node and edge relationships, making them ideal for social networks and molecular analysis. Random Forests utilize ensemble learning with decision trees to handle tabular data effectively, offering robustness against overfitting and ease of interpretation. Discover more about the strengths and applications of these powerful machine learning models.

Why it is important

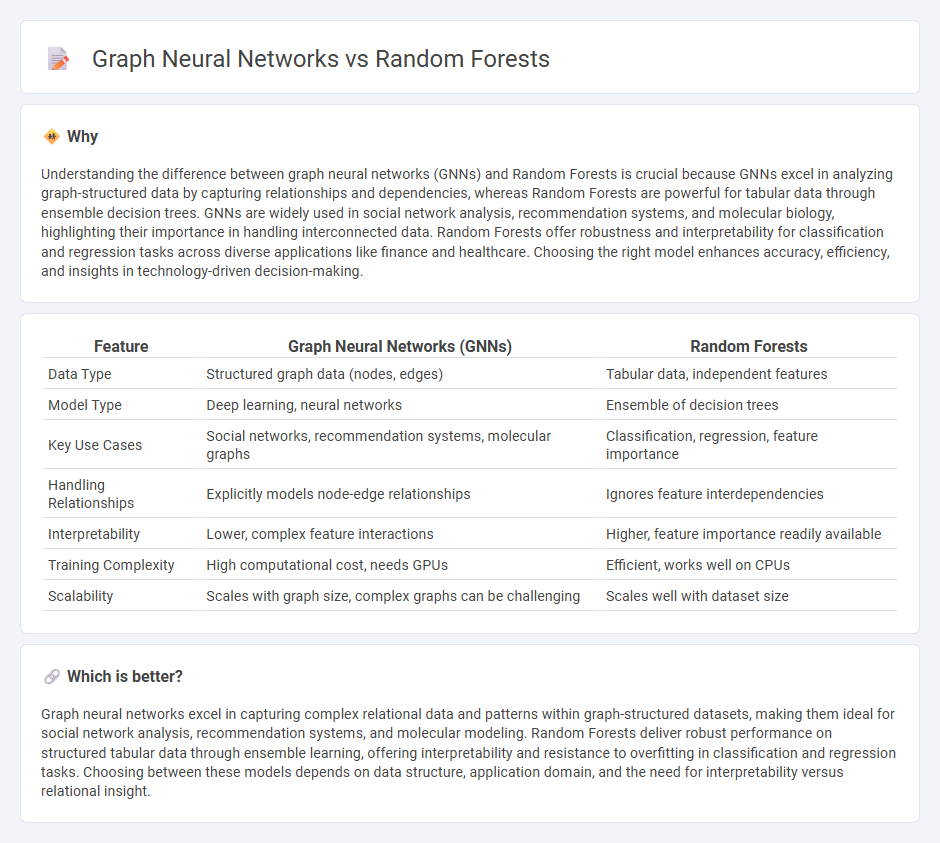

Understanding the difference between graph neural networks (GNNs) and Random Forests is crucial because GNNs excel in analyzing graph-structured data by capturing relationships and dependencies, whereas Random Forests are powerful for tabular data through ensemble decision trees. GNNs are widely used in social network analysis, recommendation systems, and molecular biology, highlighting their importance in handling interconnected data. Random Forests offer robustness and interpretability for classification and regression tasks across diverse applications like finance and healthcare. Choosing the right model enhances accuracy, efficiency, and insights in technology-driven decision-making.

Comparison Table

| Feature | Graph Neural Networks (GNNs) | Random Forests |

|---|---|---|

| Data Type | Structured graph data (nodes, edges) | Tabular data, independent features |

| Model Type | Deep learning, neural networks | Ensemble of decision trees |

| Key Use Cases | Social networks, recommendation systems, molecular graphs | Classification, regression, feature importance |

| Handling Relationships | Explicitly models node-edge relationships | Ignores feature interdependencies |

| Interpretability | Lower, complex feature interactions | Higher, feature importance readily available |

| Training Complexity | High computational cost, needs GPUs | Efficient, works well on CPUs |

| Scalability | Scales with graph size, complex graphs can be challenging | Scales well with dataset size |

Which is better?

Graph neural networks excel in capturing complex relational data and patterns within graph-structured datasets, making them ideal for social network analysis, recommendation systems, and molecular modeling. Random Forests deliver robust performance on structured tabular data through ensemble learning, offering interpretability and resistance to overfitting in classification and regression tasks. Choosing between these models depends on data structure, application domain, and the need for interpretability versus relational insight.

Connection

Graph neural networks leverage graph structures to capture relational data while Random Forests use ensemble learning on decision trees for classification and regression. Both methods enhance predictive analytics by modeling complex patterns; GNNs excel in graph-based data whereas Random Forests are favored for tabular datasets. Integrating graph neural networks with Random Forest algorithms can improve feature extraction and increase model interpretability in heterogeneous data environments.

Key Terms

Ensemble Learning

Random Forests leverage ensemble learning by combining multiple decision trees to improve prediction accuracy and control overfitting through bagging and feature randomness. Graph Neural Networks (GNNs) utilize ensemble methods less traditionally but can incorporate ensemble strategies by aggregating multiple GNN models to capture complex relational structures in graph data. Explore how ensemble learning techniques differ and complement Random Forests and GNNs for enhanced model performance in various applications.

Node Embeddings

Random Forests excel in tabular data classification by aggregating decision trees, but they lack inherent capabilities for capturing relational structures in graph data, limiting their effectiveness in node embedding tasks. Graph Neural Networks (GNNs) leverage node features and graph topology, enabling the generation of highly informative node embeddings that reflect both local and global graph properties. Explore advanced techniques in node embeddings to understand how GNNs outperform traditional methods in graph-based learning.

Decision Trees

Random Forests utilize an ensemble of decision trees to enhance classification and regression accuracy by aggregating multiple tree predictions, reducing overfitting inherent in single trees. Graph Neural Networks (GNNs) extend deep learning to graph-structured data, capturing complex node relationships that standard decision trees cannot model directly. Explore further insights on leveraging decision trees within graph-based frameworks for advanced machine learning applications.

Source and External Links

Random Forests - Random forests are ensemble learning methods that combine multiple decision trees, each trained on a random subset of data and features, improving prediction accuracy and robustness to noise over single trees.

Random Forest Classification with Scikit-Learn - In random forest classification, predictions are made by aggregating the votes from many individual decision trees, each built from different random samples of the data and feature set, and the majority class is chosen as the final prediction.

Random forest - Random forests work by growing a multitude of decision trees during training, with classification outputs determined by majority voting and regression outputs by averaging, significantly reducing overfitting compared to single decision trees.

dowidth.com

dowidth.com