Edge intelligence integrates AI processing directly on edge devices, enabling real-time data analysis and decision-making without relying on cloud connectivity, which reduces latency and enhances privacy. TinyML focuses on deploying machine learning models on ultra-low-power devices with limited computational resources, optimizing efficiency for applications like sensor networks and wearable gadgets. Explore how these cutting-edge technologies revolutionize data processing by visiting our detailed insights.

Why it is important

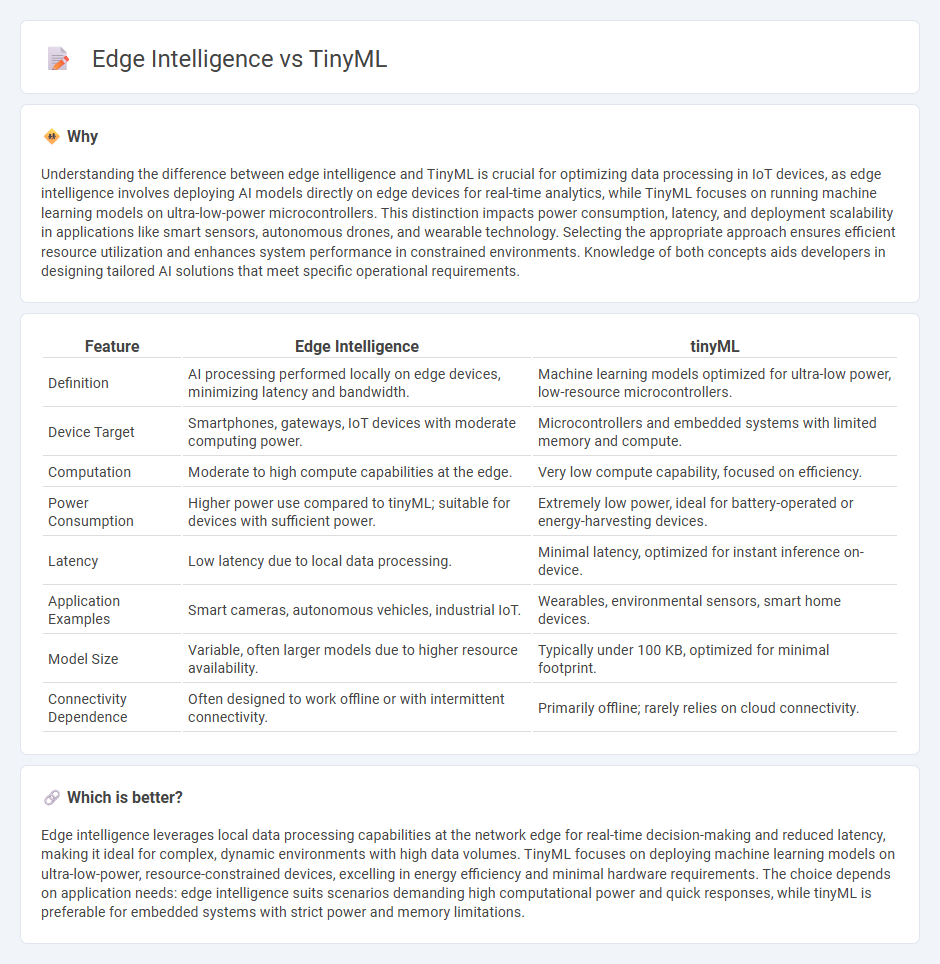

Understanding the difference between edge intelligence and TinyML is crucial for optimizing data processing in IoT devices, as edge intelligence involves deploying AI models directly on edge devices for real-time analytics, while TinyML focuses on running machine learning models on ultra-low-power microcontrollers. This distinction impacts power consumption, latency, and deployment scalability in applications like smart sensors, autonomous drones, and wearable technology. Selecting the appropriate approach ensures efficient resource utilization and enhances system performance in constrained environments. Knowledge of both concepts aids developers in designing tailored AI solutions that meet specific operational requirements.

Comparison Table

| Feature | Edge Intelligence | tinyML |

|---|---|---|

| Definition | AI processing performed locally on edge devices, minimizing latency and bandwidth. | Machine learning models optimized for ultra-low power, low-resource microcontrollers. |

| Device Target | Smartphones, gateways, IoT devices with moderate computing power. | Microcontrollers and embedded systems with limited memory and compute. |

| Computation | Moderate to high compute capabilities at the edge. | Very low compute capability, focused on efficiency. |

| Power Consumption | Higher power use compared to tinyML; suitable for devices with sufficient power. | Extremely low power, ideal for battery-operated or energy-harvesting devices. |

| Latency | Low latency due to local data processing. | Minimal latency, optimized for instant inference on-device. |

| Application Examples | Smart cameras, autonomous vehicles, industrial IoT. | Wearables, environmental sensors, smart home devices. |

| Model Size | Variable, often larger models due to higher resource availability. | Typically under 100 KB, optimized for minimal footprint. |

| Connectivity Dependence | Often designed to work offline or with intermittent connectivity. | Primarily offline; rarely relies on cloud connectivity. |

Which is better?

Edge intelligence leverages local data processing capabilities at the network edge for real-time decision-making and reduced latency, making it ideal for complex, dynamic environments with high data volumes. TinyML focuses on deploying machine learning models on ultra-low-power, resource-constrained devices, excelling in energy efficiency and minimal hardware requirements. The choice depends on application needs: edge intelligence suits scenarios demanding high computational power and quick responses, while tinyML is preferable for embedded systems with strict power and memory limitations.

Connection

Edge intelligence integrates AI processing directly on devices, minimizing latency and enhancing data privacy by eliminating dependency on cloud computing. TinyML enables this by compressing machine learning models to run efficiently on low-power, resource-constrained edge devices such as sensors and microcontrollers. Together, edge intelligence and tinyML empower real-time, intelligent decision-making in diverse applications ranging from IoT to autonomous systems.

Key Terms

Microcontrollers

TinyML leverages machine learning models tailored for ultra-low power microcontrollers, enabling real-time data processing on resource-constrained devices. Edge intelligence expands this paradigm by integrating more complex AI algorithms and heterogeneous hardware at or near the data source, optimizing latency and privacy beyond simple microcontrollers. Explore how advancements in tinyML and edge intelligence are revolutionizing microcontroller applications and boosting decentralized AI capabilities.

On-device processing

tinyML emphasizes ultra-low power machine learning models optimized for microcontrollers, enabling real-time on-device processing with minimal energy consumption. Edge intelligence extends this concept by integrating advanced AI capabilities on edge devices, allowing complex data analysis closer to the data source for enhanced privacy and reduced latency. Explore how on-device processing transforms industries by enhancing responsiveness and security.

Model optimization

tinyML emphasizes ultra-efficient model optimization to deploy machine learning on low-power, resource-constrained devices, often using quantization and pruning techniques for minimal memory footprint and latency. Edge intelligence prioritizes merging model optimization with real-time data processing directly on edge devices, balancing computational power and energy consumption for enhanced responsiveness. Explore the latest advancements in model optimization strategies to maximize the potential of tinyML and edge intelligence.

Source and External Links

tinyML - MATLAB & Simulink - MathWorks - TinyML is a branch of machine learning dedicated to deploying models on microcontrollers and ultra-low-power edge devices, enabling real-time, low-latency AI inference without cloud dependency, optimized for energy efficiency and small footprint.

What is TinyML? An Introduction to Tiny Machine Learning - TinyML enables machine learning models to run on small, low-power devices such as microcontrollers, offering benefits like reduced latency, energy savings, improved data privacy, and minimized bandwidth by processing data locally on edge devices.

What is TinyML | Seeed Studio Wiki - TinyML refers to optimized machine learning models designed for very low-power, small-footprint embedded devices like microcontrollers, making it feasible to deploy neural networks on tiny, inexpensive hardware for tasks such as audio recognition and anomaly detection.

dowidth.com

dowidth.com