Neural Radiance Fields (NeRF) leverage deep learning to synthesize novel views of complex 3D scenes by representing volumetric radiance using neural networks, achieving high-fidelity rendering with fine details. Volumetric rendering, on the other hand, focuses on visualizing 3D data through direct manipulation of voxel or density grids, often requiring significant computational resources. Explore the nuances and advancements of NeRF and volumetric rendering technologies to understand their impact on 3D visualization and graphics.

Why it is important

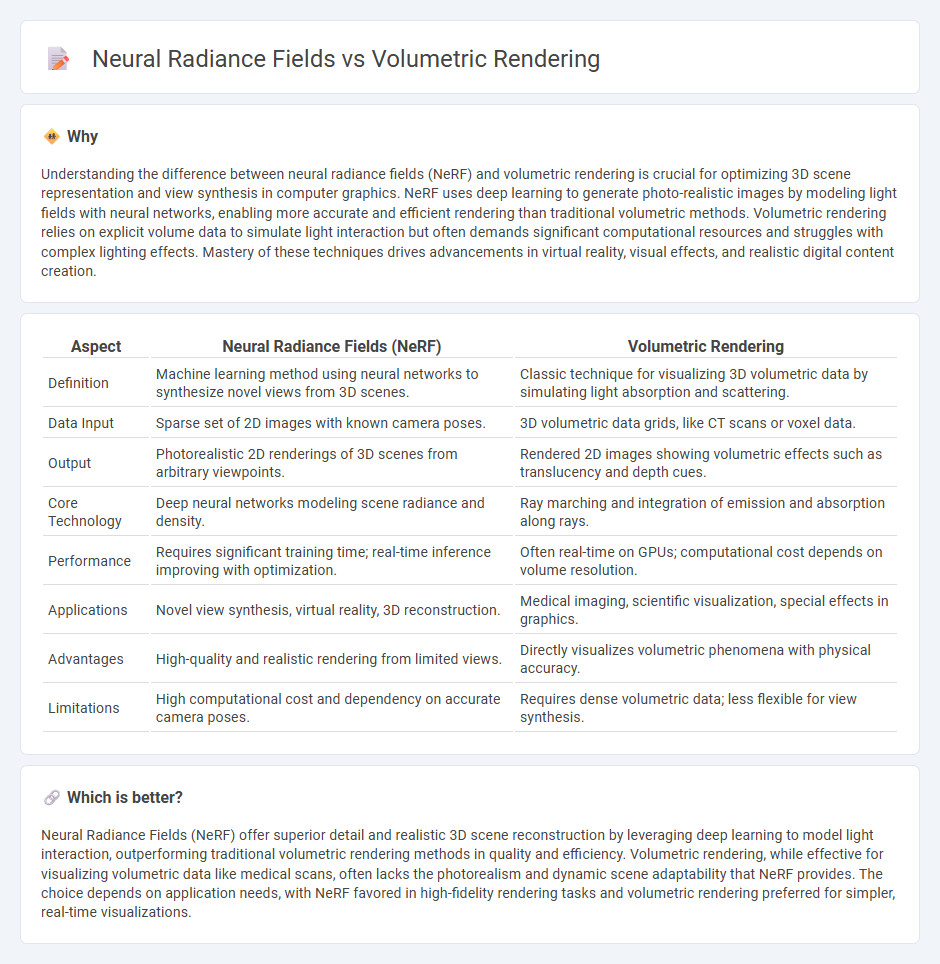

Understanding the difference between neural radiance fields (NeRF) and volumetric rendering is crucial for optimizing 3D scene representation and view synthesis in computer graphics. NeRF uses deep learning to generate photo-realistic images by modeling light fields with neural networks, enabling more accurate and efficient rendering than traditional volumetric methods. Volumetric rendering relies on explicit volume data to simulate light interaction but often demands significant computational resources and struggles with complex lighting effects. Mastery of these techniques drives advancements in virtual reality, visual effects, and realistic digital content creation.

Comparison Table

| Aspect | Neural Radiance Fields (NeRF) | Volumetric Rendering |

|---|---|---|

| Definition | Machine learning method using neural networks to synthesize novel views from 3D scenes. | Classic technique for visualizing 3D volumetric data by simulating light absorption and scattering. |

| Data Input | Sparse set of 2D images with known camera poses. | 3D volumetric data grids, like CT scans or voxel data. |

| Output | Photorealistic 2D renderings of 3D scenes from arbitrary viewpoints. | Rendered 2D images showing volumetric effects such as translucency and depth cues. |

| Core Technology | Deep neural networks modeling scene radiance and density. | Ray marching and integration of emission and absorption along rays. |

| Performance | Requires significant training time; real-time inference improving with optimization. | Often real-time on GPUs; computational cost depends on volume resolution. |

| Applications | Novel view synthesis, virtual reality, 3D reconstruction. | Medical imaging, scientific visualization, special effects in graphics. |

| Advantages | High-quality and realistic rendering from limited views. | Directly visualizes volumetric phenomena with physical accuracy. |

| Limitations | High computational cost and dependency on accurate camera poses. | Requires dense volumetric data; less flexible for view synthesis. |

Which is better?

Neural Radiance Fields (NeRF) offer superior detail and realistic 3D scene reconstruction by leveraging deep learning to model light interaction, outperforming traditional volumetric rendering methods in quality and efficiency. Volumetric rendering, while effective for visualizing volumetric data like medical scans, often lacks the photorealism and dynamic scene adaptability that NeRF provides. The choice depends on application needs, with NeRF favored in high-fidelity rendering tasks and volumetric rendering preferred for simpler, real-time visualizations.

Connection

Neural Radiance Fields (NeRF) employ volumetric rendering techniques to synthesize novel views of complex 3D scenes by modeling the volumetric density and color emitted along camera rays. Volumetric rendering enables NeRF to integrate radiance and opacity over continuous spatial domains, producing photorealistic images from sparse input views. This synergy between neural networks and volumetric integration advances 3D reconstruction and view synthesis in computer vision.

Key Terms

Ray Marching

Volumetric rendering simulates light scattering and absorption through 3D media by sampling along rays, with ray marching stepping through volume data to accumulate color and opacity, crucial for realistic effects like fog or smoke. Neural Radiance Fields (NeRF) leverage deep learning to encode complex 3D scenes as continuous volumetric radiance fields, where ray marching queries neural networks at intervals to predict color and density, enabling photorealistic novel view synthesis. Explore the technical intricacies of ray marching in volumetric rendering and NeRFs to enhance your understanding of cutting-edge 3D visualization techniques.

Density Function

Volumetric rendering uses traditional density functions to simulate light absorption and scattering within a volume, relying on explicit models for material properties. Neural Radiance Fields (NeRFs) encode density functions implicitly through neural networks, enabling continuous scene representation and view synthesis by predicting volumetric density and radiance at any 3D coordinate. Explore deeper into how density function modeling shapes rendering accuracy and efficiency between these approaches.

Neural Network Inference

Neural Radiance Fields (NeRF) utilize deep neural network inference to synthesize photorealistic 3D scenes by modeling volumetric density and color at continuous spatial coordinates, surpassing traditional volumetric rendering techniques reliant on explicit voxel grids. The inference process involves querying the neural network with spatial and directional inputs to produce color and opacity outputs, enabling efficient view-dependent effects and high-fidelity reconstructions with fewer memory requirements. Explore how neural network inference powers NeRF to revolutionize 3D rendering by uncovering detailed scene representations and realistic lighting.

Source and External Links

What Is Volume Rendering? - Autodesk - Volume rendering is a graphics technique for visualizing internal structures of 3D data sets by directly working with volumetric data, as opposed to only rendering surfaces, making it essential for semi-transparent materials and effects.

3D Visualization and Volume Rendering - The yt Project - Volume rendering in yt involves ray casting through 3D data with user-defined transfer functions, allowing visualization of both opaque and transparent structures, all controllable through a programmable scene setup.

Volume rendering - Wikipedia - Volume rendering techniques project a 2D image from a 3D sampled data set by mapping each data value to color and opacity, often using ray casting to sample and composite values along rays for each pixel.

dowidth.com

dowidth.com